Is there a Cognos cleanup or migration looming in your future? Is management asking how much it will cost and how long it will take? Are you feeling a bit nervous about the hidden complexities that have built up over the last 10, 15 or even 20+ years?

Which data models need to be moth balled, which need consolidation, which need refactoring, and which are suitable the way they are? Will your target platform support your requirements? Which reports are mission critical, which are popular, which are duplicates and which are not being used? Is there embedded logic in your reports? What if one or more of your data sources needs to change as part of the process?

Chances are you’re feeling a bit frozen in the headlights and not quite sure where to start.

Let us be your guide through the countless questions and interrelated answers while we show you some possible paths to unwinding your Cognos investment.

In this on-demand webinar, we discuss foundational concepts you need to confidently assess, plan, budget and execute a Cognos cleanup or migration. We take you through our proven methodology for determining Cognos migration timelines and resources. See our secret weapon that automagically takes care of one of the biggest hurdles to assessing any Cognos migration or cleanup: getting the details behind the who, what and where of every report and model living in your content store.

Experience has shown that taking the time to plan out a migration saves countless dollars and shortens the project timeline. Let’s remove the overwhelm from your upcoming Cognos cleanup and migration and turn it into an opportunity for modernization success.

Presenters

Peter Jogopulos

Senior Consultant

Senturus, Inc.

Peter is seasoned data architect who is passionate about using technology to design and implement solutions for clients.

Scott Felten

Managing Director

Senturus, Inc.

Scott is passionate about bringing the right people, process and technology together to solve complex technology problems for clients, ultimately delivering solutions that provide departmental and organizational value.

Read moreMachine transcript

Welcome to today’s Senturus webinar on How to confidently Assess and Budget and Cognos Cleanup or Migration. Thanks for joining us today. Couple of quick housekeeping things before we get started.

0:17

In your GoToWebinar control panel you will see an option for a question section.

0:24

You can type questions into that control panel as we go along. If you go to the next slide, we can see an example of that. So on your GoToWebinar control panel, you can type questions in there in real time. We may answer some questions while the webinar is running. But if not, we’ll get to them at the end of the session, So go ahead and type your questions as you come up with them, and we will answer as many of those as we can as we go along here today.

0:55

And on the next slide to obtain the presentation a little bit later, we will post the slide deck to our Senturus website, so you’ll be able to get a senturus.com/resources, to download the slide deck, and we’ll post that in. The chat also works. That’s available. So you can keep an eye out for that.

1:16

In terms of today’s agenda, we’ll do some quick introductions of today’s presenters. Talk a little bit about how we got here, and how BI has grown more and more complex, and how environments have a tendency to sprawl over time.

1:30

I’ll talk a little bit about what we’ve seen heard and learned from all of you as far as what you’ve encountered with your own cleanups and migrations. We’ll do a little bit of the focus on the important metrics to consider.

1:45

What we recommend, and a little overview of Senturus, as a company, finishing off with Q and A and again, we’ll answer as many of your questions as we can during the webinar, today.

1:56

If we don’t get to your question, like today’s webinar, we will respond to those afterwards, and we’ll post the answers to the questions, also, on our website.

2:08

Quick introductions for today’s presenters, Will be Peter Jogopulos and Scott Felton. Peter is seasoned data architect with over 20 years of experience working in the BI world. As long as I’ve known Peter, he has been part of the Cognos world.

2:22

In addition to Peter, we have Scott Felton, Managing Director here at Senturus. Scott’s been producing solutions that deliver value to our customers. So, we are thrilled to have Peter and Scott here today as presenters. I’m Steve Reed-Pitman, Director of Architecture and Engineering. I’m just here for the formalities so we can skip me and onto the next.

2:52

So we’re going to do a couple of quick polls, and then I’m going to turn it over to Scott. So the first poll is, what is your best guess of the total number of reports in your environment?

3:03

So, I’m going to go ahead and launch that, and everybody should see a window there. You can choose any one of the following options, are just curious to know how many reports? best guess.

3:15

Of course, it’s hard to know exactly a lot of environments, what’s your best guess of the total number of reports in your mind?

3:24

I see some answers coming in here. So, far, it looks like about half of you are in the 1000 to 2500 reports range. That’s pretty common. Quite a few of you are, in smaller environments, less than 500.

3:41

We’ve got a few more coming in.

3:44

Looks like we’ve got, 62% of you have voted, so I’m going to go ahead and keep the poll open just for a little bit longer.

3:51

You get your answers in there. And then, again, it doesn’t have to be exact. I realize a lot of the time, it’s hard to know just how many reports you actually have out there. Particularly, because there often are a tremendous number of reports hiding individually users like folders and in the Cognos world.

4:12

All right, so, I’m just going to give a few more seconds for everybody to get their answers in.

4:18

Go ahead and close out this poll.

4:22

Quick share of those results so that everybody can see what we’ve got. So again, a little over half of you are in that 1K to 2500 report range.

4:33

Quite a few down in that smaller environment range less than 500 and 10, 11% of you have a pretty large collection of reports. And, of course, the more reports you have, the more challenging it is to analyze and figure out how to tackle that when you’re doing migration.

4:53

Can we get those results? And we do have one more poll before we go on to the presentation.

5:02

To the second poll, Isabel, what percentage of your reports are highly complex? And I realize that also is a bit of a subjective judgement, But you probably know which reports in your environment are the big, hairy reports, which are pretty simple and straightforward.

5:22

Punch your answers in, we’ll pull there. Looks like about half of you have responded so far.

5:29

And what I’m seeing here so far, the lion’s share somewhere between six and 25% complex reports.

5:40

I’m just going to give this a few more seconds. It looks like most of you have already answered the poll.

5:47

So, I’m going to go ahead and close that out.

5:50

Share the results. So, yeah, about two thirds of you are in that 6 to 25% range, but a good quarter of you answer to that more than 25% of your reports are complex.

6:06

So, that’s what we’re here to talk about today, is how to deal with that complexity. And really, just how to kind of start to eat the elephant of sorting through what’s in your current environment, versus what you actually need to keep going forward.

6:28

Scott, can you go ahead and take over.

6:33

Great, a little bit of discussion of complexity?

6:37

Awesome, Thanks, and welcome, everyone, and thanks, Steve, for getting us started.

6:44

The poll results are interesting to me, specifically.

6:50

Maybe I’ll talk a little bit more about that as we, move on through the conversation today.

7:07

My role in the, webinar today is to introduce the topic a bit and focus on one very specific topic, which is really related to BI complexity. And in a kind of a specific way that I’ll get to.

7:23

Peter is going to take over and talk a little bit more about some technical stuff.

7:59

BI Complexity is a really big topic, so I’m going to kind of, try to do, extremely brief, started at the beginning. So we can orient ourselves. How did we get here?

8:30

How did we get to business intelligence at all and, you know, how do we frame this discussion of BI complexity?

8:39

Let me go back a couple of hundred years, you, start to find, you know, the advent of the concept of business intelligence showing up and you know, in literature and some theory.

8:54

It’s kind of in the fifties where we get to the point where it starts showing up in our papers.

9:01

And then the idea really comes to fruition with, you know, kind of modern database technology in the sixties.

9:07

And then BI vendors started popping up in the seventies, this is a very IBM centric view of history sketched out here, primarily because we’re talking about Cognos today.

9:21

Obviously, there’s different lenses that you could look at this history. But the most important part here is that we get into the eighties in the two thousands with the data warehousing and, you know, starting within men, and then eventually Kimball.

9:35

And then, the BI tool, expansion in the market that probably a fair number of people on this call likely date back into those, you know, two thousands era with, you know, the different BI vendors.

9:50

So, into the modern era and into the topic for today around Cognos, you know, obviously, in the two thousands, we have Cognos: eight Report net.

10:02

Most of us are going to be relatively anchored in Cognos 10, you know, with the studios and the Framework Manager as we progress through the years and you start to really, maybe, take a step back and you look at an overview of the feature set that Cognos has offered, you know, the community. Its customers over the years.

10:28

What you’ll really see is a high degree of consistency with the core pieces of Cognos, right?

10:34

The idea of the Framework Manager and the in the report authoring tool. And then, of course, all the services that lie underneath that, Of course, the querying technology has changed. We’ve cut in memory databases.

10:47

We have support for nano visualizations in data science, now, with Jupyter Notebook.

10:54

So, it certainly spans the gamut.

10:58

But, consistent through the entire thing, is this core concept of, you know, a framework manager, this idea that, in order to build a report, it makes more sense to put something between the report itself and the data source, which is, of course, our Are models, right?

11:18

And, in, a sense, the content management system that Cognos really is what it is the metadata content management system that system itself is dealing with the core problem of complexity.

11:33

So, that’s whole reason for existing, you know, back in the day.

11:38

You wanted to count things up. You had pen and paper.

11:41

You had a measuring stick.

11:42

You had to have physically touch things, or have your eyes on them, as we progress through, you know, the years. You know, we start putting sensors on things.

11:52

We start building databases, Manual entry turns into sensor based entry. You know, financial information doesn’t no longer comes from hand-written or hand typed journal entries there now, based on e-commerce systems.

12:08

So, all of that volume needs to be managed and that volume gets managed through a content management system, as we know that’s Cognos. So, that’s great.

12:20

One of the reasons why we kind of think about, at least, you know, ourselves, you know, Cognos Expert Salton Sea, the reason we think about it this way is because we’ve been kind of keeping our eye on it for 20 years.

12:36

So, as the different versions come out, you know, we see, you know, the new feature sets, but what we see consistently is this idea of the model and the report, which are the key components.

12:47

And they hold really, quite honestly, the elements of the report.

12:53

So, you know, how do we think about this, you know, this idea of, you know, complexity.

13:03

You know, we obviously have complexity growing over the years with all the different features in Cognos and we certainly are dealing with complexity in the amount of data and the types of data, and how we organize it.

13:15

And, you know, the platform itself.

13:19

But, if we, can, we try to start to understand just basic, you know, just aggregate Cognos, you know, What does it really trying to do?

13:27

It’s trying to get some data out of some data sources. We’re going to model it here.

13:33

You can see, you know, the simplified version of a kind of a Cognos platform or Dataflow type of a thing. You’ve got your physical layer and your business layer, your packages.

13:43

Are there, of course, your reports, all of your, you know, your objects inside those.

13:50

So as we start to think about no one report, and, you know, understand one report, and maybe choose the concept of reverse engineering or report, Yeah. It’s complex.

14:04

Others, a lot of items, and you can see the relationships between the packages and the report objects, and certainly the business layer and the physical layer, and, of course, the data source and the model.

14:17

Then, with, you know, with this kind of rudimentary diagram, we can say, yeah, this is manageable. I can handle this, right? Like, I can, look at a report, and I can reverse engineer it.

14:29

And my goal here is to, you know, clean the data up or understand the report, or maybe move it from one place to another, or, even, you know, migrated to a whole new reporting platform.

14:41

If that’s our goal, it seems solved at this point, but then we run into a challenge. And the challenge is, we don’t have one report. We don’t have two reports. We don’t have 10 reports.

14:52

We, in, a lot of cases, have thousands of reports, of tens of thousands of reports. We work with clients who have hundreds of thousands into the millions of reports, multiple models, etc.

15:04

And that simple picture, that simplified picture that I started with, starts to become blurry.

15:10

And it starts to become almost an insurmountable challenge.

15:15

And when we talk to customers quite a bit, what we hear is that they’re really struggling with trying to even understand how to get started.

15:25

How big is a project going to be? Things like that?

15:28

So, one of the things that we’ve learned in a recent webinar, what we did is we asked a question: What are your goals for Cognos?

15:37

And, in that webinar, you know, the focus was, you know, to try to understand migration and modernization.

15:46

The goals that our customers are telling us were, you know, what you might imagine for yourself as well, you’re changing the data source.

15:54

Maybe you’re moving from on prem to the cloud, we’re doing a Cognos cleanup, Most of the attendees, in fact, we’re really focused on actually cleaning up their environments for one reason or another.

16:08

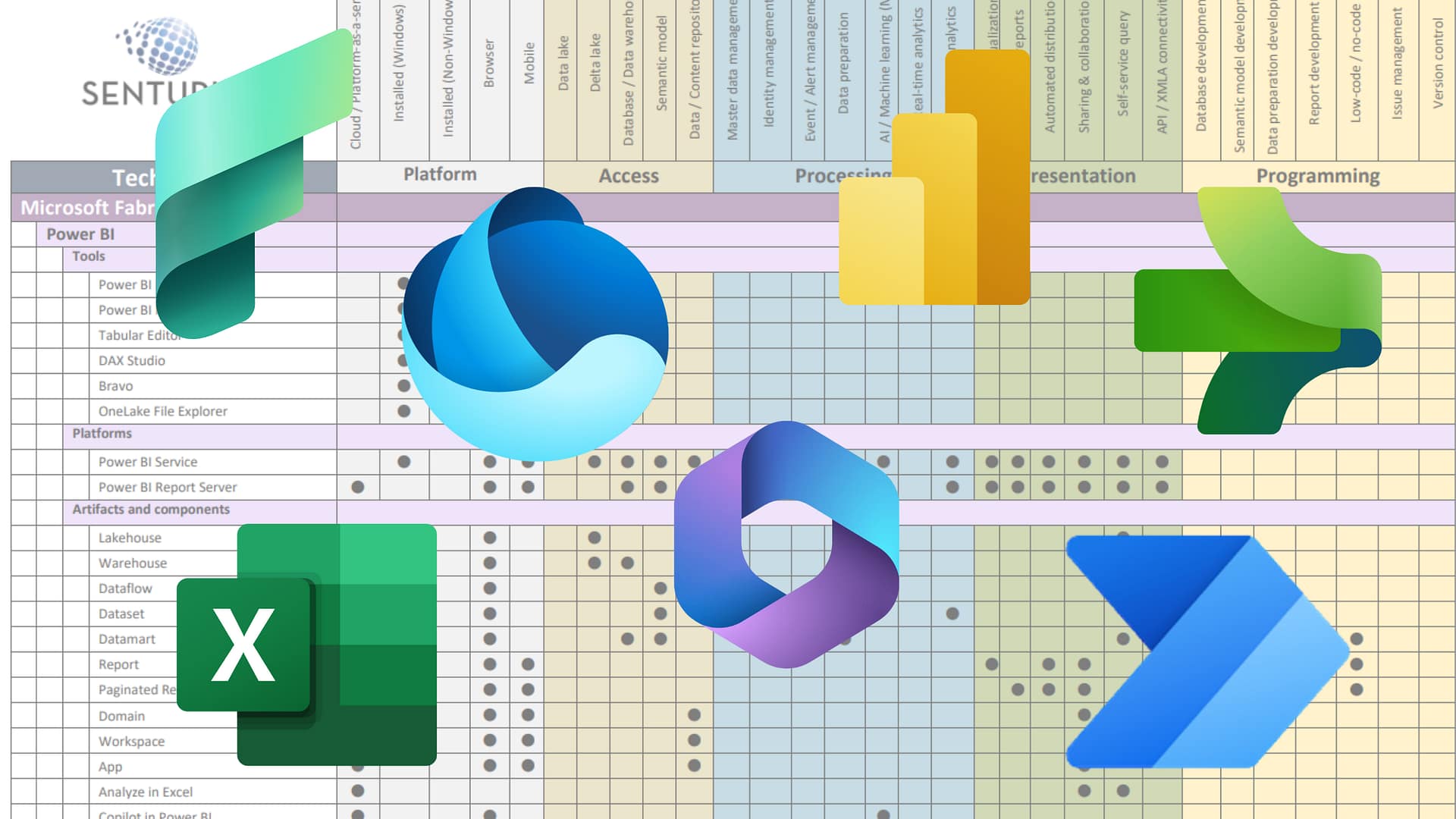

And, then, increasingly, we’re getting more calls and having more conversations related to moving some or all of the Cognos inventory, the reports themselves to suck a second platform, maybe, possibly at Tableau or a Power BI or some other platform at the organization.

16:29

Or, a business unit wants to adopt.

16:33

So what we’ve seen in the real-world when we’re out there talking with clients, is, we’ve seen it, the volumes.

16:42

If you remember that picture of not just one report, not just one package, but, you know, in the real world, the volumes that we’re starting that, we’re seeing are really quite large.

16:55

We’re seeing report inventories upwards of 5000 to 100,000 here.

17:01

I think in our poll, the most common was somewhere around 2500, that 2500 that when we first have conversations with clients, usually have to those that those numbers are usually the numbers that IT knows about, right, as opposed to the ones that it that people can’t see.

17:21

Report executions can be anywhere from for a particular report, not at all, to over a thousand a day.

17:28

We see multiple models and packages.

17:33

You know, sometimes into the thousands, Query Subjects’, an item, counts off the charts, Report, query, and query item counts off the charts.

17:42

And so when you add all these numbers up and you consider the relationship between all of these objects, you start to realize that there’s a lot of combinations, right?

17:53

It’s not just the relationship between the data source and the report through the model or, maybe not through the model for one report that’s for thousands of reports across thousands of objects, both in the model, as well as in the report itself.

18:13

And then, adding to that and, you know, kind of harkening back to the topic of this webinar. Which is how do you build a budget?

18:23

How do you get a level of effort estimate on what it would take to do a cleanup or what it would take to do partial or full migration or even to validate to validate your Cognos environment? After a data source change?

18:38

Or a large ETL change, or maybe in addition to new data’s, a data source into the into the mix.

18:47

We see somewhere in the range of Tudor up to 80 hours for a single report to be looked at and validated.

18:57

Now, when we, when we look at that range from 2 to 80 hours, we really span that across a very A variety of complexity.

19:04

So, very simple, low complexity report, one to two hours, no problem. Take a look at it.

19:11

Double check it, right? Maybe even recreating another system is, not.

19:16

There’s not a big challenge, Medium complexity a bit more, higher complexity a bit more, very high complexity.

19:24

Uh, certainly. People have highly complex reports, and then we even have a concept of kind of an outlier report. And that’s, when we get up into that 40 to 80 hour range for a single report.

19:36

We see that a lot of organizations, you have maybe 1 to 10 at most of those outlier type reports.

19:47

So with all of that information about kind of what we’ve seen out there in the market and this idea of the complexity that’s inherent in the Cognos environment.

20:03

What we’ve learned is that at the core of doing any sort of a migration or a cleanup is really trying to understand this concept of combinatorial complexity.

20:15

It’s really at the heart.

20:17

What drives all of the cost are all of the effort in any kind of a cleanup, really understanding that combinatorial complexity is that is the challenge. It’s not necessarily trying to understand Cognos better.

20:32

That’s not really trying to understand, you know, a particular data source connection or a particular type of report or an element.

20:41

It’s how do you wrap your arms around the entire report inventory, the entire environment itself, and start to address the challenge of combinatorial complexity.

20:52

The numbers are just so large, that it’s just, it really is impossible to reverse engineer.

21:00

The Cognos environment that’s no more than five years old, so, you have to find a different way of doing it.

21:08

Now one of the big ways you, You know, one of the first things you’re going to learn is that there are variable usage patterns.

21:16

So, most of the time when we talk to clients, their intuition, I think, is spot on.

21:23

Which is, we have a lot of reports, but we’re pretty sure people aren’t using a whole bunch of them.

21:28

So, we look at these variable usage patterns and say, If our business users haven’t touch this report in six months, or if they haven’t touched this report in the last three months, or even the last year, or something like that.

21:41

Some, scoping business requirement can take 50 to 95% of the entire report inventory, completely out of the picture.

21:51

So, scoping out the, a large amount of those reports.

21:57

Also, scope’s out a large amount of the complexity.

22:00

This is one of the main ways to deal with that common material.

22:04

Complexity problem is just by reducing the complexity by taking sheer numbers of reports out of the out of the mix.

22:14

The second most important one, and I think the area where we tend to focus the most, is on this idea of grouping.

22:24

Reports, looking at things that are similar.

22:26

So once we’ve gotten rid of the reports that aren’t being used, are aren’t mission critical.

22:32

We now start to take what’s leftover and put them in logical groups.

22:37

So one of the logical groups is, Is it duplicated?

22:41

One of the other logical groups would be, are these reports highly similar?

22:49

Um, is.

22:51

What’s the level of complexity in the report, and how do we, think about that?

22:55

And of all of the things I’m talking about, what’s really key, what’s really important, is that we’re not doing this by hand, one at a time. Look at a report, fill out a spreadsheet.

23:07

Look at another report, fill out another spreadsheet, right?

23:11

You can spend so much time in that analysis that you never actually get to the place where you do your cleanup.

23:21

Well, we’ve done when we work with our clients, as we try to automate as much of that process as possible. We’ve built some solutions that help us get our work done. Some of those solutions are available, you know, for others to use as well. If you’re interested, let us know.

23:37

And, so, this is.

23:39

I’m going to introduce Peter, and Peter is going to talk a little bit about how we use some of those solutions, too, You know, address some of these issues of wrapping our arms around the inventory.

23:52

Understanding which, know what bits of content are being used and not used.

23:58

What groups they fall in, how we kind of slice and dice all of that.

24:02

And then, and then a little later, I’ll talk about process itself.

24:07

So with that, I will turn it over to Peter.

24:11

Thank you, Scott.

24:13

So if you want to go to the next slide.

24:14

So part of this process that Scott’s been talking about is, you know, going back to the earlier slide where we have this in a single report that’s multiply it out by. You know, the content that we have. And, so, how do we bring it into focus? And, so, part of the process was, you know, following our own methodology that we’ve done for other clients. If we were looking at, you know, a finance, HR, supply chain, or any other organizational process that we were trying to look at the metrics.

24:44

So, we, in turn, started to focus on the Cognos metadata that exists within the environment.

24:51

And, lo and behold, when we go through that process and bring out the content, you know, we can, we end up with a series of complex complexity outcomes.

25:02

And, the first one here, is really, you know, given some insights into, you, know, the complexity of the reports that are out there, as well as, how many reports are out there and that Cognos environment. And, what does that makeup of the report, where does a report live?

25:18

And, one of the instant things that we can easily apply is, you know, the ability to see, how many reports do we have out there that are sitting in?

25:27

You know, a My Folders report.

25:29

Or, in cases where, if you have directory structures within your no main team content listing, you know, you can figure out how many reports are there that, you know, might be maintained by end users.

25:41

If you’re not deploying a My folder strategy, by looking at the report, path, know, in creating some additional filters. But at least in this particular perspective, we can get, you know, insights into the reports.

25:55

You know, how many times have been executed and what is the complexity of those reports themselves.

26:04

And at the same time, we can also take a look at how many FM models are published in terms of packages that are out there in the Cognos content Store, and along the same manner, we can look at the complexity of those.

26:19

Being able to look at how many database tables are utilized as part of this makeup, how many query subjects were ultimately created, how many data sources.

26:30

And you know also then the referencing point back to the reports themselves. How many reports are utilizing this particular package?

26:38

And what’s the execution count of those reports?

26:41

And, you know, how many users are actually executing the reports themselves?

26:49

And, so, that, you know, when we talk about users, if we go to the next slide, we have the ability, at this point, to see the users that are actually accessing the Cognos environment.

27:01

And, you can also see, you know, those that, how much, how many reports or the executing no versus how many of folks that have access to the Cognos environment.

27:11

But, essentially, haven’t accessed the report, you know, within a given timeframe, and, so, this allows you to get a little bit for license trip, if you will. If you’re looking to see how many users are still using the system at the same time, you know, you’re being able to see who your true power users are, you know, in terms of their execution of reports over a given time period.

27:44

And by taking a look at the report complexity that we talked about, you know, we’re able to break it down.

27:49

Like Scott said, and said, are low, medium, high, very high, and no outlier grouping. So, it starts to give us a little more focus with the reports.

28:00

You know, we’re able to break down each report by looking at how many pages are contained within the report, how many lists reports, how many cross tab, how many visualizations are there, you know, how many queries are supporting each of those, those out outputs.

28:17

You know, do we have joined Queries, Union queries, you know, all of that information that is contained within that report, you know, is utilize, you know, for a scoring mechanism that now, can be adjusted?

28:30

You know, individually based upon, you know, the maturity of the Cognos environment and, or the, you know, the maturity of the developers that are maintaining you know, that environment?

28:44

Along those same lines, you know, we can also provide some metrics as it relates to individual report executions.

28:50

You know, what content is, are highly utilized reports, and how many times are they being executed, you know, over a given timeframe to be able to see which reports are, are standing out.

29:05

And, in the same time, you know, folks that maybe have created a report view against an existing report, you know, we’re able to capture that content of how many times a report view has been executed. As you can see in the slide, based on the green bar content, But also, we can associate it back to the original report that it comes from, just by going through an associate in that report view back to the main report that it references.

29:34

You know, so we don’t lose track of the actual content and report that it’s based off of.

29:41

Yeah, Peter, and one of the things, I’ll just, I’ll jump in and add here, is that you know, this report consumption idea?

29:50

The idea the popularity of a report, drives complexity, um, doesn’t really make sense in and of itself, till you combine it with the complexity of the reports that they’re looking at.

30:07

So the intersection between report complexity and report popularity, we found, is a really critical thing to being able to understand the overall project of doing a cleanup or the overall project of doing a migration.

30:26

There’s just so much content there, you know, if we, you know, go back a bit here and take a look at this, you can imagine if that pie chart were a bit different, right, and we had a lot more mediums and highs and a lot less lows.

30:45

You can see how the nature of our project would not only be more labor intensive, but could also be more complex in general, right? Because you’re really getting, you’re stepping up every time when you get to those complexity numbers.

31:01

If you have a lot of highly complex reports built on, you know, packages that are highly popular, you, it increases your risk when you’re, starting to touch those things.

31:15

So that, well, we look at just the popularity in and of itself.

31:19

Well, it’s interesting and useful, especially for scoping.

31:23

I think the real value comes in the understanding the intersection between two of them.

31:37

Definitely, that leads also in, into, you know, when we’re looking at the complexity, in this particular case.

31:44

This slide is sort of depicting a view of the models perspective looking at the model and how popular that model is in terms of the reports that, its services and the complexity of that model, to give you a sort of a visualization in this particular case, the top left gives us this high, highly popular model that’s being utilized. But a relatively low complexity in terms of being able to build out that model.

32:15

And so, again, the idea is taking a look at the models, and again, if, especially if you’re talking about a conversion effort, you’ve got a model that’s very popular in terms of the reports that are being utilized and a relatively low complex model, in terms of migrating over to, say, a Power BI or Tableau environment. Just gives you some focus as to what would be a good starting point for that, those migration efforts.

32:47

Now and again, then it allows you to then move onto your high popular, high complex, you know.

32:53

And so on, you know, to be able to sort of plan out what level of effort, you know, for the migration efforts at you might want to undertake.

33:03

And, at the same time, this main focus has been on just outcomes that are not in public are excuse me, that are only in the public domain.

33:10

Really ignoring my, you know, my folder content. But again, that can also be there with a simple click of the filter to eliminate and look at all reports to see where, that’s scale might set.

33:25

So, again, just gives you some great insights into the model, the popularity, and the complexity. And, hopefully, it starts to set forth a plan of how you can tackle a huge migration effort when you’re looking at all of the content you have within your current Cognos environment.

33:45

Yeah, and I would agree, and we’re about to transition back to me here, Peter.

33:49

Um, the, I think, one of the big learnings from, you know, these last slides.

33:58

I’ll talk a little bit more about our process next, is that these are really table stakes questions that we should be able to answer quickly.

34:07

But we find that it’s difficult to answer them without the aid of some sort of an understanding of the entire inventory and a methodology.

34:18

Or looking at the data like methodology for understanding popularity and complexity of the model level and complexity at the report level.

34:27

And to start to break that out, into areas where we can say, where do we start a project, does it make sense for us to start at the high popularity or high complexity models?

34:39

Well, it might, because there might be a business driver, and maybe we really need to, you start with, for instance, the Data Warehouse Query Model or something along those lines.

34:49

But, generally speaking, we want to look at an IT project from, through the lens of lowering risk as much as possible on the upfront before we start writing code or changing anything.

35:01

So when we’re paper and pencil writing a plan, we want high impact, which means high popularity, but low complexity, so we can drive risk out.

35:11

So imagine a four phase project.

35:13

We would certainly probably, you know, at least from the gate, recommend.

35:18

Starting off with that high popularity, low complexity, model first, right?

35:23

Gives you a big bang for your buck.

35:25

You can get your model out there. You can get some reports Cleaned up or redeveloped or whatever.

35:31

The project happens to be. And now you’re, going to have some learnings and some success.

35:37

Then you can move on to, you know, the next item in your list, which possibly might be low popularity, low complexity, or maybe right on to the high popularity, high complexity.

35:49

Being able to click a button and be able to get this information is the real game changer when you’re trying to, you know, plan A 3, 6, 12 month project.

36:05

OK, so, the next part of this particular presentation is kind of, what do we recommend? How do, how do we do it right? So, a lot of you are probably on this call, you know, because of, you know, you’re looking to assess, confidently assess and budget, some sort of a Cognos cleanup. Obviously, we see the numbers are probably largely skewed towards folks who are wanting to clean up the report inventory for one reason or another.

36:33

Maybe merger and acquisition may be the addition of a new data source.

36:38

There’s so many reasons why possibly migrate a migration of content from Cognos to some other platform?

36:46

So, we do enough of this work that not only are we built, kind of, software, solutions, repeatable solutions for it, but we also have a process.

36:55

And, you know, we’ll jump into marketing in here just a bit because, um, what I’m, what I want to try to describe here is that being methodical and intentional when you’re working with all of this complexity is really key.

37:13

These are the things that really drive the confidence.

37:16

What do we do first, what do we do next?

37:20

Being able to answer those questions confidently really reduces the risk in your project.

37:26

Yeah, especially, if, you can trust the data that you’re getting.

37:30

So, we recommend doing some sort of an initial inventory assessment on your Cognos inventory, whether you do that by hand.

37:40

Some clients do that by hand where they literally open each report, and they know an analyst or an architect goes through and fills out a spreadsheet with check marks.

37:53

To say, you know, how many, reports hit this package, and how many reports at that package and how many reports?

38:00

You know, have no direct, you know SQL queries, back to the data source, and which data sources are they hitting?

38:07

We can certainly build that inventory by hand, you know, kind of clipboard and hardhats style.

38:13

We recommend trying to find ways to automate some or all of that.

38:17

The second part of the process which is, you know, fundamentally important, if you know, there isn’t and I haven’t met an organization yet where they, they don’t need to pay attention to the budget.

38:30

So scoping is really your key.

38:33

To driving cost and effort and complexity via the combinatorial complexity conversation we had earlier in the presentation.

38:43

Scope is really the key there.

38:46

You have to assess your inventory to understand what you can scope out.

38:51

So, making sure that, you know what is business critical, but, more importantly, what is not?

38:57

Making sure you know what is popular, but, more importantly, what is not?

39:02

Because, what, as, it turns out, that the cream rises to the top as far as your Cognos inventory is concerned.

39:10

There are few reports in your inventory that are really popular and really important.

39:15

So, you want to identify those, feel comfortable, that you’ve got the full list of those, and then feel comfortable scoping out, and, you know, putting on the back burner or moving off to a later phase of the project or even ignoring them altogether and letting them, you know, age out of the system. Scoping that out of a migration project is going to save you 90 plus percent of the cost.

39:42

If I scoped a project right now for manually converting the average, you know, roughly 5000 report inventory.

39:56

Just looking at the reports and doing all the work, and all the engineering, we did it by hand with no aid of automation and no process in place.

40:06

You know, we’re in the multiple millions, you know, five plus million dollars.

40:12

If you start to apply some of these processes, where you’re really trying to automate a lot of this inventory analysis, and then been what I’ll say, you know, almost ruthless or aggressive with your scoping to drive that cost out.

40:30

You’re going to, reduce the costs by a factor of 10, at least.

40:36

So, we’ll see those projects, you know, drop into the you know low, hundreds of thousands of dollars. Which is not unusual for a large migration project, ain’t possibly even sub 100,000 for a very low report inventory.

40:52

So, scoping is really key.

40:55

Then, of course, the next part of the processes is the migration, quite honestly, it really helps to, I’ve done them before because you kind of know how to do them quickly.

41:05

So, you know, looking for people in your organization, or, folks that you want to work with, who’ve done it before, is a big boost there.

41:13

You know, the, benefit of doing a migration with the aid of tools, like an analytic tool that we use, is, it allows you to understand that there’s 32 reports here, that all have highly, they’re all highly similar, They all had the same package.

41:31

They all have roughly the same elements in them, and so now you get some advantage. You can maybe consolidate a few of the reports.

41:39

You can certainly move through them much more quickly.

41:42

Now as your redeveloping are validating, you’re doing similar work and it speeds it up and every time you can shave 10 minutes off an hour or 20 minutes off an hour, it’s a good thing in these projects.

41:55

Finally, really key, important part that oftentimes we find gets neglected in the, in these projects if they’re, you know, if we’re not involved in them, is the validation step, which is, that once you’ve done all this work, right, you’ve looked at the inventory.

42:13

You’ve scoped out what you’re going to not.

42:16

No, have, in this particular project, during this phase, in you’ve, done your migration work.

42:22

It’s really key to, have a methodology for validation.

42:25

And that report inventory really serves as kind of a guide or a milepost, for you to be able to do that.

42:32

And I’ve built this, kind of graphic here, to represent that you don’t do this just once. What you want to do.

42:39

Again, like that quadrant suggests, is you want to choose some of your inventory, assess, scope, migrate, and invalidate, then move through it again.

42:48

Then you’ll notice here that we have our standard project methodology, you know, modules down here, which is, in every one of these projects, it’s really critically important to consider the business requirements, understand the priorities of not only the group doing the work itself, technically, but, also, of course, your consumers and folks in the business unit.

43:13

Fundamentally important to understand your, data models.

43:18

While doing this, building a working prototype was always not a bad idea, but at the very least, make sure that you’re not just jumping right into the high complexity modeling right away and in the rest of the course.

43:34

So, what does it look like in the real-world?

43:37

So, I’m going to show you some examples of stuff that we do with our clients.

43:41

Um, this is an example.

43:45

A final report with, you know, the go sales company, right?

43:51

Um, so, this would be one of the, the slides you will get in an assessment.

43:56

We have, you know, 3 or 4 offerings that we, that we work with clients one of them is kind of an initial consult consultation and then we have a standard assessment.

44:08

The assessment uses our software to look at the inventory, and then we automatically can you summarize that data and present the data back.

44:16

So this is a, slide from a, kind of a mock up slide from a final report.

44:21

And you can see here that what we’re doing is we’re really trying to take advantage of this relationship between complexity in popularity and understand, you know, different areas that are similar where they live in the quadrants.

44:35

And to start to, you know, build the confidence level that we’re making, the right decisions around planning our project itself.

44:46

Here’s another, little bit more deeper, part of the analysis.

44:50

So we’re looking at, you know, kind of tables in relationship to there, to their, packages, and trying to understand across, you know, the, the inventory of packages. You know, what kind of DNA to these packages have? Is there a lot of duplication? For instance, you know, we can start to see some duplications in these two gray bars here. I like to think of this as the DNA sharks.

45:14

It kind of looks like a PCR result test, but you can see that those green bars are very similar, right.

45:22

These packages are hitting the same tables all the way kind of up and down the line there. And then you can also see kind of a weld.

45:31

Distributed set of packages here where, you know, most of the packages are on the, on the first bar there. Most of them are the same.

45:43

So that’s probably the parent package, and then it was probably copied and, you know, there’s one version with one set of tables and another version with another set of tables.

45:52

So that’s more of an evenly distributed set of packages, and trying to understand the character of the profile.

45:57

These packages really helps before you really dive into, no, just reverse engineering them at the, at the code level. Of course, we’re going to do standard analysis of reports. So, you know, in our assessment, one of the things that we’re really trying to do, is, we’re trying to take inventory for you, so to speak, and, you know, kind of give you a report of that inventory at a summary level.

46:22

So you can see here, all of the mail, many of the things that Peter had already talked about, and you can get a sense of the total numbers, Right?

46:30

So we have, you know, total report executions in this particular group over this timeframe was, you know, roughly about 40,000.

46:41

Then finally, report complexity, which is, quite honestly, where a lot of people focus.

46:46

Because the idea of report complexity is, I guess, the route where the rubber meets the road. If a report has to go from one place to the next, you need to understand how much work it’s going to take. Is it going to be one hour or 40 hours, is it going to be one or 80 hours?

47:02

And, understanding who’s going to be working on that?

47:05

And, you know, what skills are needed, how much time is needed? How many people are needed?

47:13

And so, building those complexity scores is really fundamental, again, leading back to the project planning exercise which is going to be, you know, this will be use quite a bit in the scoping part of the project.

47:30

So, this is just a kind of a sample of one of our assessments.

47:35

And then, of course, at the end of the assessment, will evaluate and make some recommendations, of course.

47:42

So, um, I’ll, pop back up and, this might be a good place for us to pause, I think, and maybe, invite Steve and Peter back I’m not sure if we’ve gotten a number.

48:00

Any questions in the panel or what do we have here?

48:04

I can take a look quick. Yes, So, We’ve got just a couple of questions in the panel. So, I think what I’ll do is have we just do a quick overview here and give everyone a chance if they want to type in any additional questions, and then at the tail end, we can do a quick Q&A. And there was a specific question here from Jennifer that I wanted to address with the group.

48:29

So let’s just go through a quick overview, if you could get to the next slide.

48:35

Just so everybody’s aware, you can find additional resources on our website at Senturus.com.

48:41

We’ve been committed to sharing our BI expertise for over two decades now. And you can find archives of past webinars, blog posts, product reviews, really just a wealth of information on our website. So check that out.

48:57

Also, wanted to let you know about an upcoming webinar later this month. We’ll be doing a webinar on good data models leading to great BI.

49:09

If you could jump to the next slide, we’ve got a link there, and so that webinar will be coming up here at the end of June. We hope you’ll join us for that. If you’ve been in the BI world for any length of time, you know, excuse me, what a, difference, a good data model metrics and having a bad data model can impact usability can impact performance. Ultimately can restrict end user adoption. So good data models. We integrate BI and we’d love to have you join us for that webinar on the 23rd.

49:44

Beyond that in a little background on Senturus, we concentrate on BI modernizations and migrations across the entire BI stack. We work a great deal of course and Hybrid environments nowadays and we’d be happy to chat with you and help out with any problems or questions you may have about your own BI modernizations or migrations.

50:07

We’ve been in business for over 21 years now, thousands of clients, thousands of projects. Many repeat clients.

50:19

We have a number of clients who’ve been with us for pretty much as long as the company has been in business and we’d love to help you out as well.

50:30

Before we go into the Q&A, the one last thing, we are looking for experienced professionals. So, if you have an interest in working in the consulting world, we’re currently looking for a Microsoft BI consultant and also a senior data warehouse and Cognos consultant. So, for those happen to fit in with your skill sets, please reach out to us either at jobs@senturus.com

50:54

You can also visit our website and check out Career page.

50:59

And with that, let’s go ahead and jump back into the Q and A so the one thing I wanted to address was, Jennifer asked a question earlier about whether our tool works with the seat and content store, and it does it, and we support both Cognos 10 and Cognos 11 content stores.

51:16

Even though Cognos 10 hasn’t been sunset it for a number of years now, we realize that a lot of organizations do still have legacy tenant. So we do support those. And it looks like there’s one more question that came in from Jennifer. I haven’t read through, Yeah, When assessing complexity, factor in render variables, this is a detailed technical, so I don’t know the question, or I don’t know the answer to that off. And Peter, Scott, I don’t know if you guys know, we might have to go back to our technical team and get back to you later.

51:54

I know that the second part of the question asks about the number of pages I rendered, and I know that we can, we can certainly talk about the pages.

52:07

Peter, maybe you could describe a little bit about the elements that we track.

52:13

So, we definitely would, therefore, have to get back to the technical team as it relates to the actual render variables in terms of the content that’s, stored, the main focus, like, this has been on the page itself. But looking at most of the analysis has been on looking at the, you know, like you said, the charts to cross tabs, the list reports or the various visualizations that are out there.

52:42

As well as, you know, the makeup of, how that final output was created, you know, how many queries were created in terms to support that, That final outcome, as well as what filters and, you know, where it came from the source.

52:59

I know we grab a lot of content from the content store in the reports themselves.

53:03

So we’ll have to double-check with the development team as to the render variables that are in that content store as well.

53:13

Yeah, one of the things I can share as well.

53:16

Jennifer is no without going too far afield here.

53:23

Let me grab my share really quick.

53:28

No going rogue.

53:32

Make everybody nervous.

53:36

What was that?

53:38

So, on our Senturus website, if you go to our products, I mentioned that we have a number of solutions.

53:46

Um, Migration Assistant is primarily the solution that we’re using to wrap our arms around the inventory, collect all this data, and then produce a final report.

53:59

You can learn about it here. You can watch a demo.

54:01

But the reason I’m on the website specifically, is, we have a little bit of a mock up here.

54:07

So, you can actually see how it works.

54:10

Now, one of the things I’ll, tell you, without doing a full, you know, product sales demo here is that, while, you know, we’ll create all of these reports on the fly, we have an assessment, actually, that does a summarized version of these reports. That takes about a week. So, you’re going to engage us.

54:30

We can come in, wrap our arms around your Cognos data, as we said, and give you a report back.

54:37

You can also purchase a license for this product and once it’s installed, which takes, you know, less than a day, you can now have access to not only the overview content, data sources you’re looking at, You can get down into the report detail level.

55:01

So, you know, this particular report might be may be a medium complexity because of the number of query, encountered, etc.

55:10

Um, you can look at the executions right here. Of course, you get all the variability in them.

55:15

Really, probably, the relationships, which is the, one of the biggest areas of timesaving, when you’re really trying to do a big migration, as being able to deal with this one of the things I’ll say about this report, apologize here.

55:35

That was behind my control panel. Sorry, that took a second one of the things about our migration assistant, is we designed it very specifically, to be two modules. It’s got a core data access engine.

55:47

And it’s got a analysis function, and we intend, when we engage with clients, to create reports that are specific to you inside of, in this case, in the Power BI package.

56:03

So, um, so if you don’t see a report here, and you are curious about whether or not it can be created, do we have access to the data in the data model?

56:11

Is it something that we can produce? Please feel free to reach out. That’s, you know, work.

56:17

We’re data nerds and anytime we get to create a new report on call data, we’re happy to do so. So hopefully that that helps a bit.

56:24

Explain a little bit more about kind of where that all that data winds up coming from.

56:35

So, we’ve got another question that came in here from Kristen moment ago asking, does the sustain the Migration Assistant, analyze the usage prior to installation of the product, or only from the installation forward? and, I think, I can go ahead and take that one. So, Kristin.

56:53

And, actually, even though we’ve only addressed in the content store or in the previous questions, the Migration Assistant actually does draw on both the content store and to the Cognos Audit database.

57:04

And, so, as long as you have auditing enabled in your environment, Migration Assistant can actually access and analyze all of the usage data to the extent that it exists in your audit database. So, if you’ve had a lot of digging running for the last two years, we can actually analyze your usage for the last two years right out of the Box migrations.

57:27

I think, Jennifer, you also suggested you thought there might be a combination of content store for them.

57:33

I get in there, and you are correct.

57:40

So, with that, everyone, we’re coming up to the top of the hour here, so I think we are in a good spot to go ahead and wrap up..

57:55

Scott, if you’d go to the final slide, just some contact information. So for anybody who would like to get additional information or if you have questions need help with your own migrations and modernizations, you can always reach us on the web by phone or by e-mail. So we’re always happy to hear from you. Always happy to chat. Scott, also put a note into the webinar chat that goes straight to his calendar scheduling page. So, you can get some time, what, Scott. Very quickly, and easily, and is always happy to have a chat with you. So, again, thank you everybody, for joining us today, and have a great rest of the day. I hope to see you on a future Senturus webinar.