Cognos content stores are famously stuffed with clutter. Valuable content is mixed in with decades of duplicative or unused items. When looking to migrate Cognos reports or streamline system performance, de-cluttering that pileup is key.

To determine what should be kept, tossed or simplified, requires an in-depth understanding of the content. But native Cognos tools simply do not provide a sufficiently thorough evaluation of reports and models. Nor do they reveal the underlying complexities that help to map content to the new platform or data source.

In this on-demand webinar learn how you can get a comprehensive, automatically generated inventory of Cognos content. We demo the Migration Assistant for Cognos and show how it quickly cuts through and catalogues content so you can

- Sort out valuable content from unnecessary bloat

- Dramatically reduce the content that needs to be migrated (by 97% for one client)

- Identify content impacted by data source changes

- Streamline the re-creation of content in a new platform

- Provide the insights needed to accurately budget

Presenters

Todd Schuman

Practice Lead, Installations, Upgrades and Optimization

Senturus, Inc.

Todd is a dyed-in-the-wool data nerd with 20+ years of analytics experience across multiple industries. BI tool multi-lingual, Todd is fluent in Cognos, Tableau and Power BI.

Peter Jogopulos

Senior Consultant

Senturus, Inc.

Peter is a seasoned data architect who is passionate about utilizing technology to design and implement solutions for clients.

Read moreMachine transcript

Thank you for joining us and welcome to these Senturus webinar series.

0:14

Today’s topic is, Automate a Cognos Content Inventory to Speed up Cleanups and Migrations.

0:27

Please feel free to use the GoToWebinar control panel to make this session interactive.

0:33

We’re usually able to respond to your questions while the webinar is in progress.

0:37

If we don’t reply immediately, we’ll try and cover it either in the Q&A session at the end of the presentation or via a written response document that we’ll post on Senturus.com.

0:51

First question we usually get is Can I get a copy of the presentation? Answers? Absolutely. It’s available on Senturus.com

0:58

If you go to these Resources tab and then the Knowledge Center, you should find today’s presentation along with dozens of other presentations.

1:08

Or you can also just click on the link that was posted to the GoToWebinar Control panel and pull it down from there.

1:15

Today’s agenda, we’ll cover some introductions.

1:18

Why are we here today? Discuss some challenges.

1:22

Review how to create an inventory of your Cognos content. We’ll do a live demo.

1:28

We’ll wrap up with a quick Senturus overview and discuss some additional resources, and then we’ll open it up to a live Q and A session.

1:37

Joining me today is my colleague, Senior Consultant Peter Jogopulos. Peter is a seasoned data architect who is very passionate about utilizing technology to design and implement solutions for clients.

1:51

I’m your host today, Todd Schuman, I run the install, upgrade and optimization practice at Senturus.

1:56

You may recognize me from Box Office Smash hits such as doctor Cognos and the Metadata of Madness and the Tableau Chainsaw massacre.

2:04

Jokes aside, let’s get into it. We have a few polls to get a pulse on the audience today.

2:10

Poll number one. What are your goals for Cognos?

2:13

You need to change data sources, move to the cloud, migrate to a new platform.

2:18

You’re trying to clean up your Cognos environment, or, something else. Not listed here.

2:24

Take a second, and go ahead and just do a quick vote.

2:40

OK, we got about 80% in.

2:57

Poll results.

3:00

I’m not seeing them.

3:02

OK, you have got about 85% with wanting to clean up the Cognos environment, followed that by a tie between changing data sources and migrating to a new BI platform with 35%. Moving to the cloud, 25% and other, which would be interesting if you want to put that in the chat on what other is.

3:22

Great. Thank you.

3:25

OK, got one more poll: How many Cognos support does your organization have? 0 to 500, 500 to thousand, thousand, to ten thousand, over 10000, or maybe you don’t know, part of the reason why you’re here today?

3:47

Give it a couple more seconds. And, about 75% in.

3:56

OK, looks like majority of you, about 38% have, somewhere between 500 to 1000.

4:02

Right behind that.

4:03

Is someone one thousand to 10000, And then, a little bit below that, we’ve got 0 to 500.

4:10

So, good amount of content out there in your Cognos environments.

4:16

Thank you for the feedback.

4:18

Let’s go get into today’s topic. The main question is, why are we here?

4:24

Migrations based on some of the feedback. Most of you are involved in preparing for at least one of these types.

4:32

One of the most common ones is data source changes. You know, are you switching from one vendor to another SQL to Oracle, Oracle, to snowflake?

4:40

The amount of databases available today is really something else based on what it looked like a couple of years ago. Maybe you’re moving from on prem SQL server to a cloud-based instance.

4:50

So, lots of different challenges and in migrations from database aspect of it, we’ve also got platform migrations.

5:01

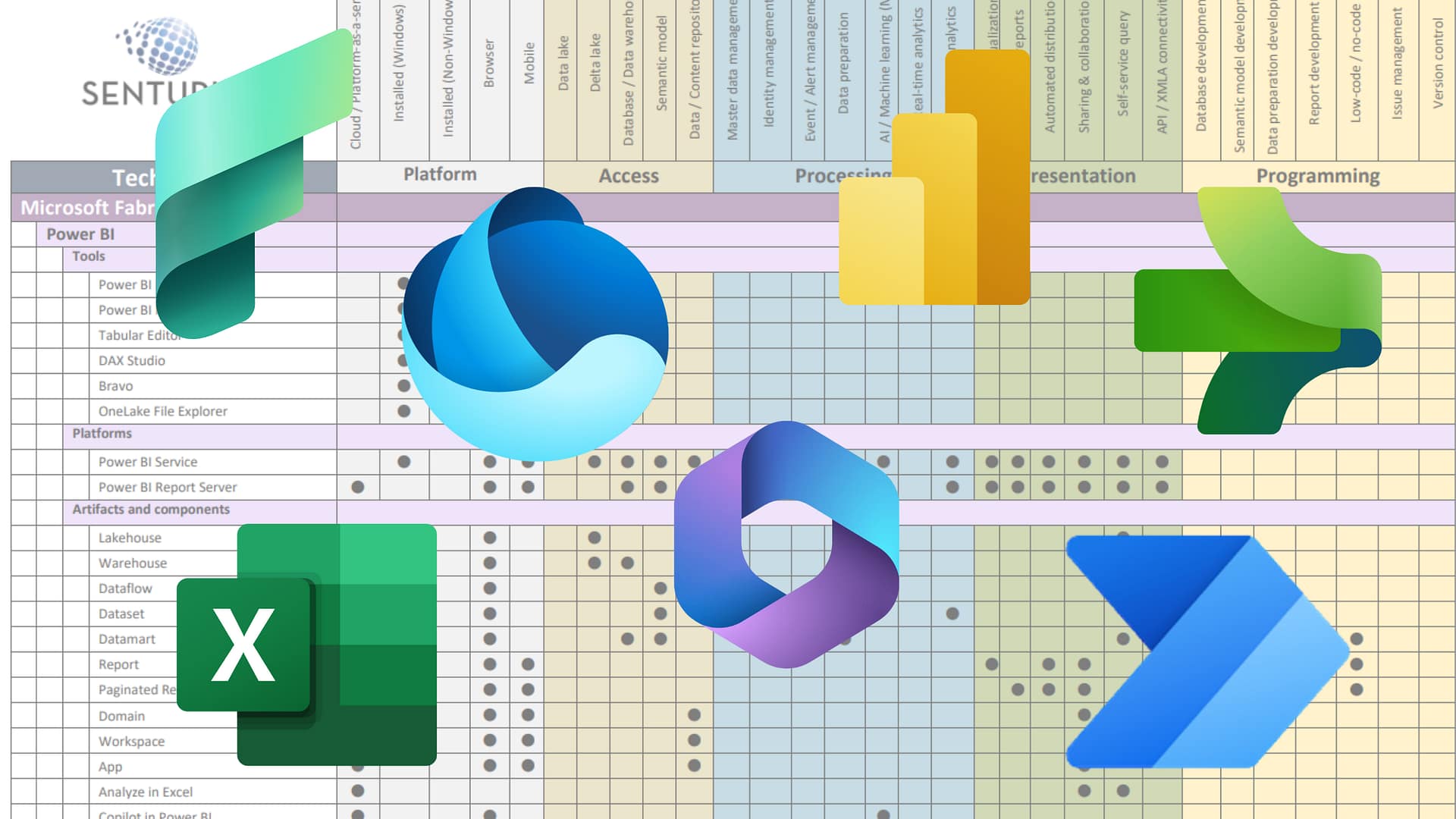

Maybe you’re moving from Cognos to Tableau or Power BI or, something else, or maybe you’re moving from one of those platforms to Cognos? Lots of tools available today, as well, each, with their own strengths and weaknesses.

5:13

Whenever you’re moving, you’re going to need to recreate models, reports, and make sure you capture that business logic that’s embedded in those old reports.

5:22

And then, finally, it looks like, a good amount of you today aren’t so much doing a migration, but more of an internal assessment cleanup.

5:29

If you’ve been using Cognos for a long time, I know we’ve got customers who are, you know, 10 plus, 15 20 years of Cognos usage.

5:37

At your time, it’s difficult to get a grasp on what that is. How many reports do I have? Are people using the reports the multiple versions of duplicates floating around? How can I get this information out of Cognos and try to reduce my cost of ownership?

5:53

So, what are the challenges? No surprise here, migrations are inherently challenging.

5:58

The grass is always greener.

6:00

New tool can offer to the promise of better performance and new functionality that will hopefully enable analytics and drive better business outcomes. So, what is preventing us from just making those migrations?

6:13

When properly done, a good BI environment is going to shield the end user from complexity and inaccuracies of addressing the data directly from the database.

6:25

Things like, a well defined, business logic and terminology that’s presented to the metadata, that’s easy to use, well organized, you know, breaks out different subject areas.

6:37

You have a high level of trust in that data.

6:40

So, more often than not, end users are not really seeing the whole picture.

6:45

More of just the tip of the iceberg as shown here, and what’s actually going on behind the scenes is much more difficult and complex.

6:53

We’ve got cryptic data source systems, data warehouses and tables, multiple transformations in business logic that’s being built out, data models, complex reports, and dashboards, as well on top of all that.

7:06

So, there’s a lot of hidden complexity that you may or may not be aware of that’s sort of driving the presentation layer of your BI.

7:16

Reporting and dashboarding.

7:21

So, while, you know, it’s complex in your own mind, you can see here various elements of a Cognos environment and how they add up.

7:29

So, this is a pretty common view you might encounter.

7:31

We’re mapping your data sources to your FM models to your reports.

7:35

Here, we’ve got multiple data sources in the different databases. We’ve got those that tie, it’s different.

7:42

Layers in your FM models, the physical layer, business layer. We’ve got packages coming out of that. Now, we’ve got reports, which have their own objects, as well.

7:50

We’ve got queries, objects that are driven from the queries, and lots of different pages, so it’s a lot of moving pieces, as you can see.

8:00

And when you start putting all that together, looking at the visual reports to the business logic, it gets really messy very quickly. As you can see, through some of these animations, trying a tie.

8:11

Whereas a database table map to, which goes to this field, it gets pretty ugly pretty quickly.

8:20

So, the real question is, how can we unravel this Cognos content?

8:24

To sculping incomplete migrations, you need to basically figure out what models do I have, What are the reports I have. Tie that together with usage.

8:34

As you can imagine, it’s extremely time consuming to go through this report by report manually, if you go through. You have to kind of go in and break down.

8:43

How many pages do I have, What’s on each page? What queries are driving those objects, What are the calculations and fields? Where did those map to? What filters do I have?

8:51

There’s just a lot of information in a single report, and again, based on the polls bissau, most of you have at least around 500. Some of you going over a thousand somebody to go and close to 10000.

9:02

To manually go in and try to map all that information out, is going to be extremely difficult and time consuming.

9:07

To make notice even worse, the My content, if you’ve got users who are storing stuff in there, my folders, my content area.

9:13

It’s very difficult to access those.

9:16

You have to either, you know, navigate through some, the, namespace is a, you know, active directory l-dap, and go into individual users folders and copy them out somewhere, and edit them.

9:27

It’s just a real pain and difficult to work with.

9:31

Then, on top of that, the Cognos audit data, hopefully you have that turned on. It does help things a lot. But even that is incomplete picture of the data. It’s sort of an on demand database, so I’ve got a higher reports, and only 20 of them have ever been run.

9:45

Those other 80 reports aren’t going to show up and that audit data, because it hasn’t ever been touched, or an entry hasn’t ever been entered into that audit database.

9:52

So I’m not going to know which reports haven’t been run on the fact that there’s missing from my audit database.

9:58

So, again, there’s a lot of extra work and extra process and time invested in trying to unravel that Cognos content.

10:10

Which, again, leads to these questions, you know, What do I need to clean up? How many reports do I have in total, you know, both within the team content and the My content? Do I need all of them?

10:19

Are any of these duplicates are very similar, you know, is the sales report and the sales report that, as, you know, underscore someone’s name on it in a date, you know, is that the same reporter is a different how can I clean these up, you know, does each report contain different elements, different queries, calculations, joins, prompts, lots of different things that each report can contain?

10:42

Also what are the sources for databases? Do you know exactly what reports are referencing? What tables, if you’ve got multiple databases, you know, or how can you figure out easily which ones are mapping to?

10:53

Which databases, which vendors, tables views.

10:55

So, it’s just an overwhelming process, and very difficult to kind of wrap your head around if you’re just getting started.

11:02

So, let’s talk about the process. Using a proven methodology, we can help a great we at help greatly reduce the risk cost, time, and effort.

11:14

So we have foreseen four major steps here, we’ve got the assessment stage, which we always recommend, starts with an inventory.

11:23

Need to index all your reports, package’s, jobs, schedules, etc.

11:28

Need to capture the lineage of all the objects.

11:31

Tie it back to the database tables and the fields.

11:34

We need to also capture the usage and the reports with none.

11:37

Once you’ve got that, you can kind of begin to build out your roadmap.

11:41

Know, do we want to target specific business units? If we’re doing a database migration when we’re moving from Oracle to Teradata?

11:49

What’s identify? All the reports that are hitting, these Oracle databases. Can you figure out which ones are those?

11:57

Do we want to focus on usage? These are the top 10 most run reports, we’re going to move these 10 first.

12:03

Or is there users? Let’s address the sales team or the executive team and target those user wherefore it so they running. Let’s build those and move those over.

12:13

So often, it’s the different ways to kind of target and filter down that inventory once you’ve built it.

12:19

Once you’ve got that list, again, you want to go ahead and optimize.

12:22

Can we get rid of some of these reports? What can we delete? What can be left behind?

12:27

Do I have a lot of duplicates? How similar are these other reports that have, you know, different naming conventions, but look like their exact same report.

12:36

And just leverage a lot of common models, subject areas.

12:39

If I want to move over lunch reports, do I have to create 20 different models, or are they all kind of leveraging the same sort of tables? And I can just build one master model to leverage that, and address all those reports.

12:52

And then, finally, put it all together, execute the plan.

12:55

Build out your targets, apply the optimizations, leverage that inventory to get the specs, and the model, tables, relationships, all the logic and the models, and then finally, create those old reports in the new tool.

13:09

So, it’s a lot of work, it’s a long process.

13:13

And, as I mentioned, we want to start with an inventory.

13:16

The better upfront effort to assess and do this, the more success we’re going to have.

13:22

So, obviously, we want to create an inventory.

13:25

So how do we do that?

13:28

We got two options. The manual option, which I’ve done in the past, it’s, again, very time consuming, as I mentioned. You know what?

13:34

Those of you who have less than 100, 500 reports, it might be manageable. It’s going to be time consuming, as well.

13:40

But if you’re looking at in the thousands or 10 thousands, it’s going to be extremely difficult to touch every single Cognos report and basically map that information back to the packages until the models in the database tables. So, looking at going into each report and package, and then also trying to leverage that audit data and sync it up. You’ve got report names. And how often are drawn, how many times it was run?

14:02

And basically brought together in some sort of you know Excel workbook, or, you know, maybe build it onto your own database, something like that.

14:09

The other option is to automate that. And that’s, we’re going to talk about next. You’ve got a tool that we want to show you call the Migration Assistant.

14:16

You can run this tool. It’s going to go ahead and capture all that information from your Cognos contents or database.

14:21

And, it allows you run some predefined reports that allow you to have visibility into all aspects of your content.

14:31

So, what it does is, it’s going to programmatically decode an inventory of your Cognos reports and packages and build that into a new database. You’re going to have a new set of tables and database, that’s all this information is going to get stored in.

14:44

It’s going to track all the details, including the data sources, the lineage, relationship’s usage, all that.

14:50

All that stuff that can be difficult to kind of track down outside of a report and put it all together.

14:55

This is going to eliminate hundreds of manual clicks that are basically required to manually do this, to discovery and all these data fields, filters, calculations, etc.

15:06

How it helps, one, it’s going to help you eliminate unused and duplicates.

15:09

You can instantly see all the reports that have never been run.

15:13

You’ll also have the ability to kind of see confidence level of how similar certain reports are.

15:21

Like I’ve mentioned, you know, I’ve often seen people have 25 versions of the sales report. They’re almost always exactly the same as something they have gone in and hard coded a filter, or something they have. You know, just tweak something very minor, but underneath it all, it’s the same report. You only need to migrate that one time.

15:36

You don’t need to do it 25 times, so it’s going to help you identify those. It’s also going to lie to plan and prioritize the high priority items.

15:44

And you can target multiple ways, as I mentioned, usage by different user groups, different subject areas, tables. We can kind of group and organize that inventory in different ways.

15:55

We ought to simplify and consolidate similar items, and most importantly, automate the manual work, introducing a lot of the, the errors that are, are done and occur when you’re manually doing this.

16:05

You know, if you’re copying and pasting, you know, the months strings from, you know, calculations and framework manager or in reports.

16:13

It’s very easy, you know, especially when you’re heads down, multiple hours a day, you know, to, miss something or copy and paste something incorrectly.

16:21

It’s going to systematically go ahead and grab that information for you. It’s a very low chance of errors. That just happened with manual work.

16:29

And then finally we have a bunch of dashboards and reports that are already prebuilt off this new target database that gets created that allow you to kind of review and get that information out that you need.

16:42

We call them recipes, it one need can kind of show you all the relationships lineage, It also can kind of give you a report recipe. So if you don’t know anything about your Cognos report, it’s going to tell you everything you need to know, as far as you had to have, you know, a pie chart on page one.

16:57

And, that pie chart is coming from this query, and it’s got product and time, and, you know, sales. Here’s what those three fields are in the database and the tables, they’re in.

17:08

Here’s the relationships between the tables. You need to filter and calculate this.

17:11

It’s going to have all the information very easy to consume in a single click.

17:15

It also helps reduce what we call the need for unicorns, which are skill sets, that we call it difficult to find the rare, because it’s someone who, you know, knows Cognos well enough to kind of figure out all the old information.

17:26

They also know a new tool, like Power BI, Tableau, what have you, to have, people who have those skill sets that are experts in both, those tools are very rare, in our opinion.

17:36

So, this is going to help you reduce the need to kind of find people who have those skill sets. You know, you can target focus on just the, target source and they don’t have to really know much about the Cognos reports.

17:46

It’s all going to be presented to them in the migration assistant.

17:51

Another nice thing about this we’ve seen in our other examples of working with customers on this is that we’ve seen compression factors of 90, 95% of contents. Again, those of you who have thousands of reports, you’re going to probably find that a lot of them are going to be something that you don’t need, or are very common, or it can be condensed out into a single report. It’s very eye opening.

18:14

Once you kind of see some of the content in your environment, especially on a whole, across the whole thing, that team content, and my content, and what’s out there, being able to get that view. That’s just difficult with the way Cognos is currently built, and the cryptic. nature of the contents to our database.

18:32

So, that said, I’m going to turn it over to Peter who’s going to give you a live demo of the tool. You can get a little bit more insight as to how it all looks.

18:40

And then I’ll wrap things up a little bit and get to your questions.

18:48

Thank you, Todd.

18:59

Right, So after the start was mentioned, you know, we run, we extract the data from the content store and the audit database.

19:06

And once that information gets processed, outcomes one of the summary page that we produce is this complexity analysis. And we immediately get to see metrics about our Cognos infrastructure.

19:21

Now, we have the number of reports, how many pages the reports generate, the number of query, query items, and filters that exist in all of these reports that are in our environment. The nice thing about this, if you have an environment that has multiple content stores, you can easily see the content within each of those content stores. You can look at them holistically or individually.

19:48

You can also view the stats of the content that’s stored in, you know, Towson is my folders.

19:55

With a simple click, yes or no, on the checkbox, will go through and say, now, I’m given a view of my content that is just in my team content store.

20:07

And the other thing that you can also take a look at it, if you have other folder structures.

20:13

You can potentially create some simple Power BI functions to create, you know, other drop-downs, if you’re looking for, maybe, you have a QA folder, or you have a another folder that you have put things in that are no longer being executed.

20:28

So, you can, you know, modify the output, you know, based upon the information that you have within your content store.

20:41

When we take a look at our report complexity groups, we, again, the reports themselves, We go through a complexity scoring, you know, trying to take a look at the level of effort it’s going to take to recreate this report, especially if you’re migrating to another platform.

21:02

We can also see how many times each of these reports in the previous slide were executed.

21:07

So, you also get some visibility into that, those metrics as well.

21:13

In this report, complexity group, we organize these groups by their complexity, you know, in the high, medium, low, or complex bucket.

21:24

And the idea is to be able to give you an idea as to the level of effort it’s going to take to recreate all of this content.

21:33

And again, right now, this is just purely looking at the content, as it exists in the team content structure without any other subsequent passes through the data, to try and, you know, figure out what other content might be there. Again, this is just purely at what’s out there.

21:51

But we could, you know, start to layer in the reports that have been there, the reports that have been executed, and the reports that have sabin executed in the last six months, nine months, to try and get a more cleaner focus as to what content is out there that is actively being utilized.

22:16

The next now that we’ve got is our Report Execution.

22:19

And again, this is more of a graphical view of the same content that was in the First Report Complexity, Tabb.

22:28

The difference here is, you can see, we’ve also included this Report View execution account as part of the metrics.

22:37

And what this allows us to do is, in the audit of, when a report is executed, if a report view is being executed behind the scenes, Cognos, and the audit is just tracking that report View’s execution, through our process, we go through and make the association back to that original report. So we know that, you know, all of the different report views that might exist are all surfacing from the same report.

23:06

So it gives you some insights into not having to recreate, you know, two reports or five reports, but just looking at that same one base report view that is being utilized as part of the execution.

23:24

So, the next thing we also take a look at, in the same thing that we did for our reports, We also do for our models.

23:31

And this one now allows us to focus on all of our FM packages that we have deployed and the components that were necessary to create each individual FM package.

23:43

We also, again, compute a complexity score on the models.

23:48

And this one, again, very similar to the reports, you know, we’re looking at how many tables are contained within each of these FM packages. How many joints, how many relationships, how many filters, how many query subjects, are trying to analyze that footprint of that model to come up with a complexity score.

24:09

And again, you can also see, you know, the number of data sources that are being utilized by the content, You know, how many reports are being referenced, whether it’s a query or a report, how many times those reports been executed.

24:23

And how many users of those reports are that package exist? So, in this, you know, looking at that, Go sell simple, again, simple data summary to users who have been executing reports 56 times.

24:38

So, it’ll give you some ideas as to, you know, the usability, you know, of packages that exist within, you know, within your infrastructure.

24:50

So, the next piece we do is, we take a look at this, this quadrant, and this, again, uses the complexity analysis. But this time, it’s looking at more of the, how complex are the models and how popular each of those models are based upon the number of report executions.

25:07

You know, ideally, when converting to a new platform, you want to start off with, you know, any package that has a low complexity, but has a high usage.

25:17

And this particular graph allows you to see very easily which packages fit that notion.

25:26

You know, and so, and this allows us, you know, if we’re doing a project, now we can start with this low complexity, high usage.

25:35

You know, and that’s to give us, you know, an easier development objective, but at the same time, given us a high impact, you know, to our end user community, for being able to convert over content.

25:49

And then the last piece that’s available through the initial summary book is just really our user summary.

25:55

And this is going to give you some insight into the user base that you have, and how frequent they are, utilizing the content that has been deployed within your content store.

26:06

You know, and, again, you have the content storage, you can have multiple, you can select them, you have a date range, you can, you know, just a simple slider to drag and drop across it to figure out, you know.

26:18

Any given timeline of how far back you want to look to see who’s been executing. And this is really good for, you know, any license shrimp, especially if you’re moving over to another platform and you’re looking to see how many licenses to buy.

26:33

You know, rather than just do a simple, you know, swap of, I have you know 100 Cognos licenses, I need, you know, 100 new licenses.

26:41

This can actually let you see how many are actively using it and be able to, you know, just, say, save some funds as you move to the new platform.

26:55

The next workbook that we talk about is getting down into more details, you know, for each of the migrations. And, you know, the first one was looking at reports and packages sort of independently with some execution counts, but now we start to get into the details. And this was where in this particular overview, we can simply select any given package, and it’s going to tell us, you know, how many reports that are out there. The different data sources that exist, you know, are the tables that the information is coming from.

27:31

And what type of data source are using?

27:34

Whether it’s SQL Server, Oracle, ODBC, Whatever type of connection we’re using, as well as within the FM package itself, you know, what namespace is this information being pulled from, you know, the query subjects that are being used and the tables that are being used as part of this particular package.

27:57

When we shift over to the Reports tab, you know, we start to get a deeper dive into each of the reports that exist.

28:06

And so in this particular one, I can see, for just one particular book we’ve got report, we’ve got 11 queries that are out there. We have 11 pages of data.

28:16

We have 33 query items, some filters.

28:21

And the nice part about this is this is all interactive.

28:24

I can simply click on a particular container that’s out there.

28:28

And it’s going to tell me, oh, what pages It’s located on, what queries are needed in order to produce the output and telling me what query items are also being utilized as part of that output. So, this is, gives you that recipe, as Todd saying, for being able to recreate the report. So, you have all of the metadata that’s coming from the package where you can find it within the package.

28:57

Any calculations that are being done on the report itself, come up in A clear, concise view, as well as being able to see any of the filters that are being applied, you know to any of the given queries.

29:13

And, again, the tables that are being utilized as part of this particular reporting output.

29:23

And the next tab against this one, again, is still focusing on executions.

29:29

But now, it’s the intersection that we can see for each particular package, we can see the number of reports that are being executed.

29:37

When were they last executed, how many executions and how many users are using them?

29:42

And this is, allows us to, you know, start that refinement of what is are true content that needs to be converted.

29:50

Now, without reports that haven’t been executed, you can see the content that’s there. How many are in that? that particular category?

29:59

And again, using the date range, you can start to navigate, to make it, you know, six months ago, nine months ago, a year ago, to be able to see what is actively being executed.

30:11

Then, the final piece that we have, as part of the base, is really dealing with the relationships that exists within the actual package itself.

30:23

And being able to take a look at the individual database tables that are part of FM model, being able to see and click in on any given table, and being able to see all of the relationships that exist.

30:39

What tables do, it doesn’t join to why or what tables or join from this one particular table.

30:44

So it gives you that insight to be able to recreate an FM package without physically having to have that package up and go through it line by line, and trace it back and forth.

30:57

This gives you a much cleaner approach in being able to information about your package, about the tables that are being used, and about the relationships that exist.

31:12

And, with that, I’ll turn it back over to you, Todd.

31:25

OK, thanks, Peter let me grab this screen here.

31:38

OK, so, what’s next?

31:42

If you are interested in what you saw today, you can find more information about the migration system on our website, including the sample dashboards that you just saw, some video demos, and some case studies.

31:53

We’d also be happy to speak with you about your unique situation. If you have any questions, please reach out.

32:00

Also, additional resources from Senturus. We’ve got hundreds of free resources on our website, in our knowledge center.

32:08

We’ve been committed to sharing our BI expertise for over a decade now.

32:12

Just go to senturus.com/resources.

32:17

We’ve also got upcoming webinars.

32:20

Thursday, October 27th, we’ve got Dataprep with Power BI versus Cognos and Tableau and on November third, we have an Agile Analytics for Cloud Cost Management.

32:31

So make sure you register for those if you’re interested.

32:35

Little background on Senturus. We concentrate on BI modernizations and migrate migrations across the entire BI stack.

32:42

We provide a full spectrum of BI services, training and power BI, Cognos, Tableau, Python, and Azure, and proprietary software to accelerate bimodal BI and migrations.

32:54

We particularly shine in hybrid BI environments.

32:58

We’ve been focused exclusively on business analytics for over 20 years.

33:02

Our team is large enough to meet all of your business analytic needs, yet small enough to provide personal attention.

33:11

We are also hiring.

33:12

If you’re interested in joining us, we’re looking for the following positions, Senior Microsoft BI Consultant and the Managing Consultant.

33:20

Please e-mail us at jobs@senturus.com if you’re interested and check out the job descriptions on our website.

33:27

On the URL posted there.

33:31

And then finally, some Q and A, if you have any questions, go ahead and post them into the Q&A section of the GoToWebinar panel and we can go ahead and take a look at this.

33:43

As I said before, if we don’t get to your question today, we can always post those, in addition to the Deck, which will be on our website.

33:55

I don’t see any questions in the panel, so quiet curve today.

34:02

Here’s a question, we’re using Power player for us as an inventory work for power Play.

34:08

That is a good question. I don’t know offhand. I don’t know if anyone on the line knows for sure.

34:17

If not, I will find out and post a response to that question just for future reference.

34:23

I know that tool is still around, but being deprecated at some point. But let me check and see, I know we do get other legacy content, like Query Studio in there.

34:33

So, let me see if that’s also captured, but we haven’t, I don’t personally use it in our sandbox environment, but I will find out.

34:47

Anyone else have any questions?

34:55

Just one quick point of clarification on that Powerplay question, for Tina. So I’m not sure, offhand, if the tool pulls Powerplay Studio Reports, but it definitely doesn’t handle the older standalone. Or if you’re using the old Powerplay fat client, I would say the answer is no.

35:14

Powerplay studio, maybe, and as Todd said, we’ll have to check them out for you.

35:19

Yeah, and that kind of feeds into, the question just came in. You are using the contents or database to build these metrics. And the answer is yes, so, if the data is in the contents or database, there’s a good chance we can get it out.

35:32

And if you’ve ever looked at those tables, essentially, you know, every single report, and the FM model itself are XML code.

35:40

So basically we just kind of decode that.

35:43

So if you have it and it’s saved in your Cognos content store database, it’s going to be in there somewhere.

35:48

It might just be a little bit different logic or the way the XML structured, if it’s, you know, outside of the sort of standard reports and models and things that we typically work with.

36:01

But, I will find out one way or the other.

36:04

Does this work for IBM Cloud? Unfortunately, no because that is completely locked down with the IBM Cognos Cloud. You don’t have access to the server or the database, they don’t give you access to that.

36:19

Scott, any additional insight on that.

36:24

Hey, I’m with IBM cloud there’s a couple of ways to work around it. There are some export functionalities where we can export and do an import.

36:36

It doesn’t require a little bit of customization on our end, but we can get some are most of the same metadata.

36:47

Another question: does this tool need to be on the Cognos server or any desktop? It doesn’t have to be on the Cognos server, it can be anywhere.

36:53

It basically just needs to be a place where, it’s going to set up a local SQL Server, instance the right to it needs to be able to read against your content, store database. And just run some scripts. So, it’s nothing to substantial just as long as they can read from your Cognos content store with, you know, some kind of database connection string and then, be able to write to a new SQL server database.

37:23

Those are really the only main requirements, and because any more specs if you’re interested just shoot us a note offline, but yeah, it’s pretty low key setup As far as what you need to do to get this, you know loaded.

37:44

One addition to that is, we can, especially for the assessment, the summary reports, we can run those in our cloud as well, with the backups of the content stored in the other database.

38:00

So, we don’t actually need to put, use, put, the migration assistant in the client environment in order to generate the reports, and then when the report comes back to the client, it comes back in the form of a Power BI desktop, um, package, so that you’re, you know, you don’t need an extra license for that. And all the data is contained in the report package itself. So that it’s fairly portable on the way back to the assessment itself.

38:32

And maybe this is a good time to just call out that, when we engage with clients, we have a kind of a number of different ways to engage.

38:39

But one of the ways that we engage is by doing a 1 to 3 week assessment.

38:45

And during that assessment, the first part of it is to ingest the metadata.

38:51

And then we analyze it. Peter is one of those folks who does a lot of analyzing the data, once it’s in the reports themselves, and then we present back to our clients what we found, and then deliver the Power BI package back to them.

39:07

So, you do have the option for portability for basically us to generate the inventory for you and then deliver it back to you.

39:20

Thanks Scott.

39:21

Another question here about what happens when Cognos upgrade is done. Do you have to upgrade the tool? The answer is no. Again, it’s going to with an upgrade, typically, it’s going to upgrade the content store and some of the objects in there.

39:35

You can go ahead and just rerun the inventory process.

39:38

It’ll repopulate the data and you can get fresher results but there’s nothing in the tool itself that we need to be upgraded. It’s more of just refreshing your content.

39:51

Question in the case of duplicates of packages and objects, is this tool have the ability to delete a duplicate, so this tool isn’t going to make any changes to your content. It is strictly informational. We’re not touching anything in that Cognos store database. It’s just to give you the information to decide on what you want to keep delete, you know, decide to leave behind on a migration, etc.

40:16

But we definitely do not touch anything, in fact, gets a read only connection to your content or database, just to get information out.

40:23

We then create and populate our own tables, which drive the workbook that Peter was, was demoing a little while ago.

40:34

One of the other things I’ll add in here is that in very large, organizations that have thousands or tens of thousands of reports, we may employ other automation tools in order to manage content.

40:49

So, if you want to break up the work into the discovery and design of your project, whether it’s a cleanup project or a migration project, you first need to run your inventory, collect all the information about all the reports and packages, then you design what it is that you intend to do.

41:08

Then, you get to choose, are you going to do that by hand Or, are you going to do it in small phases or, would you like either to build some custom automation, too?

41:21

Save yourself some time. Basically, clicking thousands and thousands of buttons. Or, there are some tools on the on the market that will help you manage your content, your Cognos content in bulk, really, the design phase.

41:34

We find that to be the most critical, the secondary phase, you really have options, and that’s largely a function of How many changes do you really need to make? and how long would it take a person to do it by hand? And, is it cost effective to possibly, know, buy a small content automation tool that will help you make those changes automatically, if they can’t be.

41:56

So, you get up. You get options at that second stage for automation, as well.

42:03

We’ve done projects both ways.

42:07

One more question.

42:08

Would this product work for Tableau analysis, or as a Cognos specific? So, right now, it is Cognos specific.

42:15

We are looking into other ways to possibly build upon this.

42:19

But, today, if it’s just the Cognos content itself, that would be stripped out and presented to you in the workbooks that we demo a little while ago.

42:30

Good question.

42:41

But, I noticed Arjun that ask some questions about licensing costs. There’s a lot of options there. I put in the chat a link to my calendar.

42:52

Feel free to reach out to me if you’d like to talk about the different options, We’re pretty flexible with how we engage with this. We think that you could certainly license it from us directly, but the real value comes in us collaborating on the use of the product. So, we have an assessment that we can run, and those assessments started about 10,995. This is our base price for the assessment, and with that, it will give you all the things that I described earlier. So, you can get, basically, all the reports plus more, and a database to query, and a platform to build custom reports into for $10000.

43:35

That’s the, kind of the starting place, and that content will be yours at the end of that assessment, as well as our analysis and recommendations.

43:58

All right, I don’t think has any other questions coming in. I will leave it open for just a couple more minutes.

44:03

If you do have a question, go ahead and just put it in there, and we can follow up offline. But wanted to thank everyone for joining us today.