Power BI datamarts promise to remove a bottleneck that has long put business users and IT at odds: the struggle by business users to get data from different sources all into one place. The reliance on silo’d data and overuse of Excel or other flat files. The long wait for IT to approve and schedule project requests. Blah blah blah. It all adds up to wasted effort, lost time and mistakes.

Power BI datamarts alleviate this process and provide a host of benefits. Business users can explore data, creating dashboards and reports without having to know a lot of coding or involving IT. The tedium of data prep work goes away since much of the ETL work is automated. And, being built on Azure SQL Database, Power BI datasets provide flexibility and better performance. All this means analysts spend more time analyzing data.

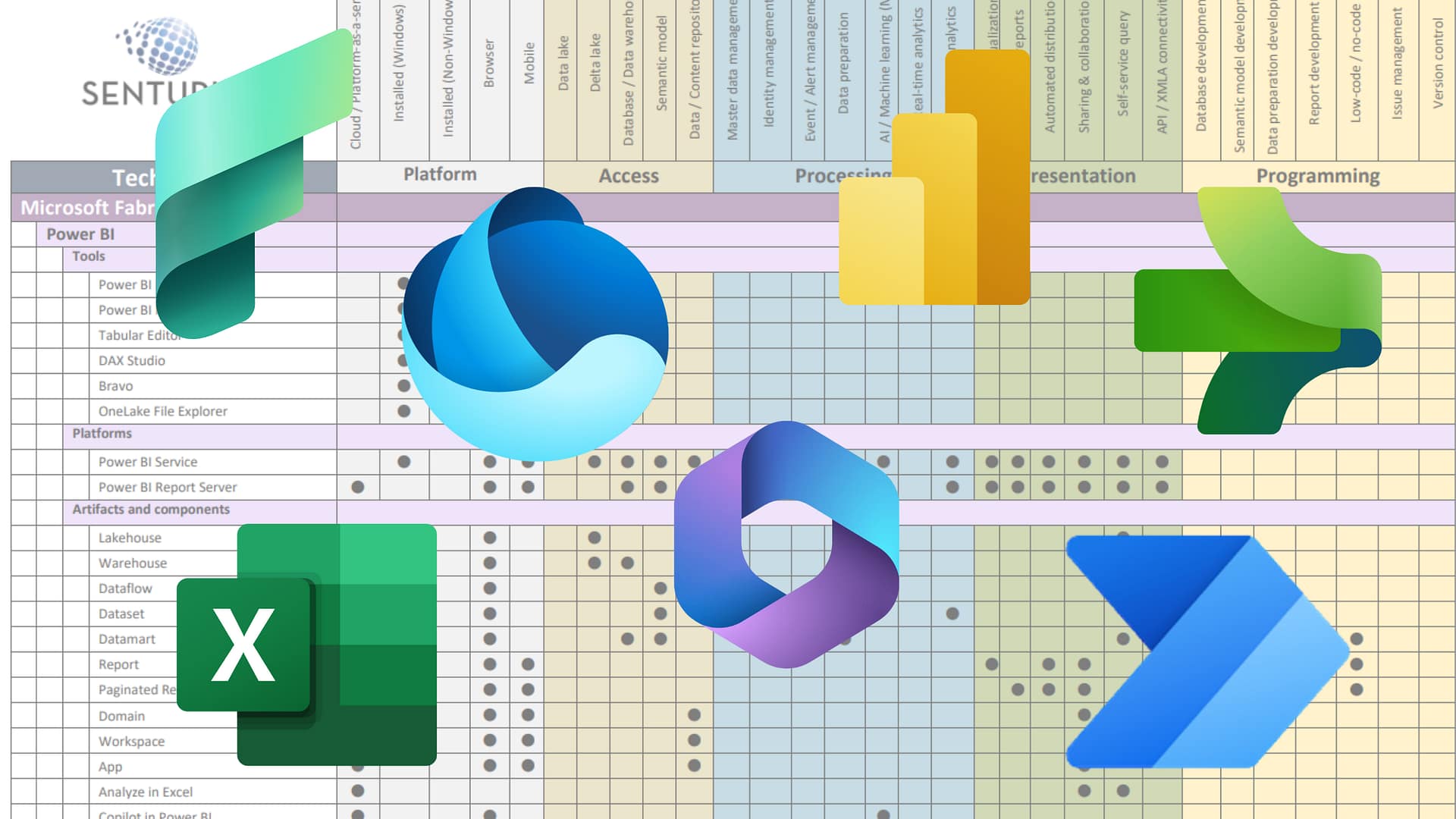

Watch our on-demand webinar for a deep exploration of this harmonious world. Exploring the benefits of Power BI datamarts, we cover the following:

- Introduction to Power BI datamarts

- Learning when to use a datamart

- Using cases of datamarts

- Comparing dataflows to datamarts

- Integrating datamarts and dataflows

- Analyzing datamarts (demo)

- Creating reports with datamarts (demo)

- Administrating with datamarts

Presenter

Pat Powers

BI Trainer and Consultant

Senturus, Inc.

Pat is one of our most popular presenters, regularly receiving high marks from participants for their subject matter knowledge, clarity of communication and ability to infuse. Pat has over 20 years of experience in data science, business intelligence and data analytics and is fluent across multiple BI platforms. They are a Tableau Certified Associate and well versed in Power BI. An expert in Cognos, their product experience goes back to version 6. Pat has extensive experience in Actuate, Hyperion and Business Objects and certifications in Java, Python, C++, Microsoft SQL.

Read moreMachine transcript

0:11

Hello, good afternoon, good morning, good evening whatever time zone you are in. Welcome to this, session of Senturus webinars. And today we are going to be talking about Power BI datamarts today’s agenda.

0:28

We’re going to start out by talking and telling you who the heck I am. For those of you who don’t know who I am, then I’m going to give you an introduction to data marts. We’re going to talk about when and how to use them. We’re going to talk about data marts versus data flows versus data sets because that that seems to be a question that folks have. What the heck is the difference? Why do I need another thing that starts with data? So we’re going to talk about data marts versus data flows versus data sets.

0:58

But I’m going to demo this. I’m going to create a data Mart. I’m going to demo it, show you how to use it, where you find it, where different types of things. We’ll take a look at how do you handle administration on a data set on data Mart, excuse me, data Mart. And then we’ll wrap it up with our Senturus overview, additional resources, Q&A, etc. So let’s get to that first topic right there. Who is this person? That’s me.

1:27

Pat Powers This is apparently who I am. I’m a BI Consultant and Trainer at Senturus Incorporated. I’ve been doing this quite a long time. 27 years of business intelligence, data analytics, data warehousing, data. I do teach our classes on Power BI, Tableau and Cognos. Multiple certifications and all those products. I actually just re upped my Cognos one earlier this year on a couple things.

1:55

Also have certification in multiple programming languages. Java, C++, databases. I’m old. I’m tired. And I should warn you all that at the end of this webinar, I am officially on vacation for two weeks. So you get me in a great mood today, All right? You get me right in that mood. Aaron, thank you. You know, I’m grateful that you’re here.

2:24

Aaron up there in the question pane saying that they’re happy I’m here, I’m happy I’m here. I’m every day I get out of bed, I’m happy I am. Thank you all. I am taking a two week cross country trip, going through multiple states, photography, taking pictures across the country, visiting family, going to Reno, Vegas, Disneyland, going all the way across one end of the country to the other.

2:53

5000 miles round trip going to be a beautiful time. All right, let’s get into the real stuff here. A couple. One other thing. Since we’re doing introductions, you may notice that there are some other folks from Senturus in the chat window. Most specifically, I want to talk to meet Scott Felton, my sidekick. If there are things in this webinar that you would like to have more information or talk to us about, you can have us a.

3:23

Meeting with Scott, A 15 minute meeting. Scott, if you’d go ahead and paste that in there for everybody. I appreciate that. Scott’s going to go ahead and paste in the little chat after this webinar link. Look, this is a big topic. This is an exciting topic and if you want to talk to us about how you can integrate this into your world, please click on that link and do a follow up with Scott. Thank you Scott, my wonderful sidekick.

3:53

Before we go, let’s do a poll. So today we’re talking about how you store data, how you get your data sets, How do you feed your data sets? OK, so we would like to know from you, how are you currently feeding your data sets? And I’m going to launch this poll as soon as I figure out where the heck my mouse is. There it is. And so far, the one that I thought would come out on top is coming out on top.

4:20

My guesstimate is that we’re going to see a big chunk of you that are using on premise database connections and files, right? That’s my prediction on this. And so far my prediction is going, I’m going to let this run for a minute and basically what we’re seeing is 83% of you are using on premise databases. All right, we got it, 85% responded.

4:50

So as you can see, hopefully that’s showing correctly the way I want it to. 84% of you are using on premise database connections, 65% of you are using files and that is one of the big things we’re going to talk about today. That’s 65% of you that are using files, the 15% who are already using data Marts, why are you here?

5:18

Do you just like the sound of my voice? Do you just like coming and talking to me? I’m not complaining, thank you, But you already know all this probably you probably know more than me. The cloud based and the on Prem, you’re in that same ballpark view. You guys are together. It’s the file based folks that are going to get a lot out of this. All right.

5:43

Thank you all for taking the time to respond to that. So what the heck are data marts, what are they? So let’s do a quick introduction here. One of the hurdles that we see a lot and frankly probably 65% of you have as well. How do we move towards self-service? We hear this look self-service.

6:12

It’s, the buzzword of the 20s, right? It was the buzzword of the 10s, but it’s becoming even more so in the 20s. You know, these roaring 20s, self-service. 65% of you are using files to load your data sets. That works great for business users. That makes business users very happy because they can take.

6:37

That file or that Access database that Phil created in 1993 that’s been sitting under Phil’s desk and they can go right to work? That’s awesome. Unless you’re in IT. Because if you’re in IT, you’re like, whoa, wait a minute, Who vetted this data? Who’s managing this data? Who’s backing it up?

7:06

How do we know that this data is any good? I’m not just going to let you go out there and build reports of data that I’ve never seen before that isn’t formally approved, right. That sound like something most of you have experienced or at least an issue that’s come up at least once. Yeah, see, this is where you say things in the chat window or you know, you chit chat with me. It’s okay. I it’s, a pretty common thing. Business users want access.

7:37

IT wants control. That’s that. Well, data marts help us to bridge that gap. Data marts give us a way of making both groups. I’m not going to say happy because you know you’re at work. Work is a four letter word. Nobody’s really ever happy. But it’s a way to meet the needs.

8:07

Of both of those groups now, data Marts have been in preview for quite some time. Last year, in June I believe was the first published introduction, formal introduction of them. And so they’ve been around for a while and they are starting to come out and going to be in release soon. Yay, They’ve worked out a lot of things with them. They’ve listened to the feedback.

8:34

Soon it’ll be in the general product. As a matter of fact, Microsoft site has updated some of the articles as recently as yesterday, so they are on top of this. They are right there with it. Data marts are basically allowing you to take all those self-service data sources, web-based files, bills, access database.

9:04

Load them up into Power BI. And then what happens is it gets pushed into a fully managed database that IT can have control over. You know, I better double check real quick. Good Okay. There’s nobody named Phil on. I can blame everything on Phil gang. There’s no Phil’s connected to this meeting today. So everything’s Phil’s fault, right.

9:33

So this data, this local data gets pushed into a data mart which now can be managed and can be handled. Yes, Andrew felt like the Groundhog, it can be managed by IT. So that means it can have governance, it can have security, it can have control.

10:01

It can have incremental refreshes, you know does never bother any of you that we still base our weather on a Groundhog. I’m just saying so we can do all this, everybody wins.

10:30

Hey, let’s hear it from Minnesota. I’m, I got nothing but props from Minnesota these days. Okay. Our biggest benefit is that it allows for business users to have self-service in a way that allows for data governance and manageability. And here is one of the best parts. You don’t need to be a rocket scientist.

10:58

I mean, if you are, that helps. It’s not going to hurt. But this data, that we’re putting into this data Mart can actually be used in other places. We now can use this data for ad hoc analysis and we’re going to see this today that I can either write, I can write straight SQL queries.

11:26

Or use the built in editor to do visual queries. Tigi, I don’t necessarily want to. Don’t hold me to this because again, this is a preview feature to still. So right now there’s no cost on using data marts in terms of whether you have a Pro or premium license, But that can change once this goes to release okay, so I don’t want to.

11:51

So the question was is for incremental refreshes you need to have a premium license. Talking about Data Marts in general, right now there’s no requirements on the licensing. But until that moves into actual release, I don’t want to say and say anything wrong. OK, Alwin your question about deploying reports and dashboards, different environments that actually we’re going to touch on. The short answer to that is.

12:20

Deployment pipelines. You want to start looking at and using deployment pipelines for that. It’s built in, it’s there, it’s a very easy way and it does actually integrate with this data Mart. And the things we’re talking about today, Aaron, the difference we’re going to get into the difference a little more in depth. A data Mart is not a data set okay.

12:48

This is a way for you to get to some of that data that you don’t have this ability to necessarily. So we don’t know where this is. This literally might be an access database sitting under Phil’s desk. Okay. But hold on to that, Aaron. I’m going to get to that in a second. And as you guys see in the chat window, Scott, just.

13:15

Posted that Hey, the product manager I’m assuming at Microsoft, refused to answer the pricing question. So Tigi, I’m sorry, I can’t necessarily confirm or deny what the licensing requirements are going to be. OK, everybody good. Everybody got their questions so far that is true Sandeep deployment pipelines are a premium feature.

13:46

Oh, all right. So the benefits. So here, yeah, it is included with premium capacities and a PPU, but I don’t know what’s going to change with release. OK. The nice thing too about this and Aaron, this is where it can differ from what you’re thinking of potentially.

14:09

Because this becomes a managed database, we’ve got performance tuning automated. It’s built in. When we do the incremental refreshes, there’s this thing known as proactive caching, where it’s going to do things every certain number of minutes. When it detects changes, it’s going to be able to take care of a lot of this stuff.

14:30

Right. It’s all web-based. We don’t need any other tools. You know, we’re going to net, June, not May, because I’m May is out for me in June we’re going to be talking about something that requires the tabular editor. So that’s a little different. We don’t need any other tools. We don’t need anything else. It’s all here. Okay. You’re quite welcome, Alan. I told you guys, you know those of you who’ve been to these things before like Cherry. Hi Cherry. I haven’t seen you in a while. I’m just looking through the list, you know, saying hi to people here.

15:00

I do try to answer these things as real time as I can gang. So please, if I do miss something, ask it again. I try my darndest. And that’s right, It’s a G rated presentation. It’s darndest. When do you use these? There is a difference between when to use a data Mart, data flow, data set, when do we do these data marts?

15:28

Are really for this self-service scenario. You’re in the accounting, you’re in finance. You want to build your own data model. You want to build your own data collection. Gregory, that is an excellent question. Gregory just asked a question. Do you have to have a data warehouse in place as a prerequisite? No. That’s one of the beauties of this Is that.

15:54

You can literally when I go to do the demo here in a minute I’m going to show you the different sources you can connect to. So with the data mart, maybe you don’t need to build reports, Maybe you need to do some ad hoc querying of data, maybe you need to write some SQL.

16:23

Maybe you’ve already written SQL and you want to be able to use it and share it and do it more. This is what a Data Mart gives you. It’s a self-service, fully managed SQL database. You can then you designate a single store so you can put this in Excel, Power BI. It reduces our infrastructure needs. You can connect to this with SSMS.

16:52

You can connect to this with all sorts of things. Oh, I think we lost Andrea. We’ve got a full set of end to end models on this. We can do modeling, We can do data transformations on this. So we’ve got a lot of things that we can do here. Here’s part, not even all of it.

17:21

But here is part of all of the different types that you can connect to. Alright, look at these data sources gang. So Gregory, back to your question. Do you have to have a data warehouse in place? No. Look at this Google Analytics O data web pages.

17:51

SharePoint folders, all of these can be sources for a data Mart. Now I do want to pause for a second. I think that there’s probably a lot of you out there and I’m bringing this up based on Gregory’s question. There’s a lot of you out there that think in terms of database terms, data warehouse, data Mart like that. This is different from a database data Mart, sort of.

18:21

Basically a data Mart in the database world is something that’s sliced by line of business and or time. This is data marts in relation to Power BI and these are fed from these many different sources. James just asked if you can use Cognos Framework Manager.

18:42

Not built in that I’m aware of, but I’m going to throw that to my folks to see. We do have a connector product that allows for connecting of these different tools that may be something that it could connect to in the future and you’re absolutely welcome, Gregory. Pretty sure snowflakes on that list if I Scroll down.

19:11

No snowflakes right there, Yes, third row, 5th, 6th column when you’ll see Snowflake is there and available as a connection. And Scott, what Scott just put in the window. James is the link to our connector. So what I would do is you may want to take a look at our connector and see what we can do with that and take that from there to use your Cognos Framework Manager packages inside of Power BI Tableau connecting all these products together.

19:48

Mark, same thing. Take a look at that connector talk with Scott. We are constantly adding features to the connector at people’s requests, so feel free.

20:06

Robert, thank you. That would be one way to do it. Robert, put a comment in there that yeah, you can convert the XML from FM, turn it into JSON and bring the JSON in. Fair enough. Couple extra steps, but definitely one way to do it. All right. That Alex, you know what I don’t know. I would have to actually look to see what the difference is between data flow and data flow legacy.

20:34

I’m not going to lie. I don’t lie, right. I don’t have an answer for that one. But as I said, we will do a written version of these questions and answers. And Alex, if I can find a suitable answer, I’ll make sure it goes in the written version of these when I get back from vacation. All right, One of the things that does come up is where do you model?

21:04

You can model either upstream or in the tool in the from the model view. This is going to give you the exact same interface that you would have if you were doing this in Power BI Desktop. When you do a get data and you can go to the Model view tab and you can do all your model views there. If you have a solid data warehouse or something in place, you’re not going to do a whole lot.

21:32

You won’t have to do a whole lot of modeling, but you can do modeling inside once the data has been retrieved. So data marts, data flows. OK, First off, let’s understand what these two things are. And let’s take this off the table. A data set, is what we would use.

22:01

To build our reports, build our dashboards, build this build that, data marts produce data sets. The real thing is let’s do interesting a data flow and a data mart. Data flows give us ETL code. Data flows give us a way of exposing our data into our data lake.

22:28

Data flows are used by Power BI to put data into a data marked, data marts are storage repositories. They’re this data from these different sources that I just showed you all these different things. Thank you Andrew. I wish that I could see you in Idaho, but I just, I’m going to be too far away.

22:54

We also, through the SQL endpoint can get to data Marts and use data marts with raw SQL. This way, even if you don’t have access to Power BI, you could still get to this data because it becomes a connectable database. You can connect to it with SSMS. You can connect to it with any kind of SQL editing tool.

23:20

It’s a repository. It’s taking that data that has been floating in your organization for 20 years that IT had no idea that existed, and putting it into a managed repository. OK, If you still need to do any kind of cleansing or transformation, a data flow would be the first step that would feed the data mart.

23:47

The output from a data Mart in Power BI is a data set okay with a date. With a data Mart. You can put in RLS, you can put in row level security, you can do ad hoc queries, you can build reports. It’s a repository in the presentation. Here is a little more detail.

24:13

So what each of these does, what each of these is used for. But remember, at the end of the day in Power BI, the end result from either of these is a data set. The data set can come from multiple sources. The data set is going to be a composite model. It’s what our users use to build things all right lastly.

24:44

If you have existing data flows, you can publish push those into a data Mart, which now gives us a way of doing SQL queries. We can use the visual editor, no extra management. If you have an existing data Mart, you can add a data flow to do some of that modeling and ETL work. You can use your own data lake and feed it into there so everything goes hand in hand and since I like pretty pictures.

25:15

Here’s a pretty picture. Jennifer asks if there’s a way to reuse security to find and other tools. I am not aware of any way to take like an FM set of security, whether it be parameter maps or object level security and pass that.

25:39

Rasha, the security and the protected data, any security that’s at the data source will be recognized and for the most part recognized and respected, per what Microsoft, says Okay. But to Jennifer, to your question, yeah, unless it’s at the data source, if it’s done in another tool, I don’t.

26:03

I can’t see a way to push that from one tool to another. It would need to be. The security would be needed to be in the data source, as Rasha is asking about. So if the data source has security on it, that’s going to pass through. OK, Benjamin. Well, a date. Look at where data sets are in the flow here, Benjamin. OK.

26:34

The data set is the output of a data Mart what we could use. I’m going to go back to this for a second. You can use a data flow to feed your data Mart, but a data set is the output. Now in theory if you took a data set and you exported it and put it into excel and then turn around and use that to feed a data Mart, that would be one way to do that also.

27:02

I want to stress again, this is technically still in preview. Everything I’m saying today could be absolutely useless tomorrow because we’re all used to that, right? We all know how that goes. All right? You guys have all been asking a lot of great questions. I appreciate it.

27:31

Scott, thank you for that reply to Jennifer. So it will pass the FM security in there. Great. This is why we have the Connector gang. So Scott, put your little chat thing back in there again. They’re meeting things. So Jennifer, it sounds like you want to connect with Scott, talk about that Connector. How do you refresh this? You can do incremental refreshes, alright?

28:01

We can set up a scheduled refresh. Basically what happens is it gets automated in terms of partition so it knows what’s fresh what isn’t fresh. You can set up a policy and the policy to partition the table helps reduce how much goes. We can do incremental refreshes which allows to get to near real time data.

28:30

We can reduce our resource usage. We can create larger and larger. Man, you guys are all hitting me at once here. Okay, I’m going to hit these. I’m going to pause here because this is important stuff. Those of you who know me know that I don’t give a gosh darn heck about slides on a page. I want you to all get your questions answered. That’s more important to me. So let’s see what we got here, Wes.

28:54

No a data set is any kind of data that has been published, whether it comes from a data Mart, whether it comes from Power BI Desktop, whether it comes from somebody directly uploading something. So I don’t need a data Mart to create a data set. A data Mart does not have to have a data set outputted either, because once the data mart’s been created, if somebody wants to connect to that data using an ad hoc query.

29:22

They could You do connect to it using SSMS. And for those who don’t know SSMS, I’m talking SQL Server Management Studio. So technically, while it’s going to automatically create a data set, if you did not want to use it in Power BI, you could get to that data Mart through an external tool. Okay.

29:45

Dara, I’m trying to, yes, I know you guys don’t see him. So I am trying to repeat the question. So Wes’s question was about do you need a data Mart to a data set? Can a data Mart be used without a data set? Paul’s question is, can the output from a data Mart be ingested into the automatic scheduler? That I don’t know. That’s when it’s going to have to go on hold for a minute. All right, Dara, to your question, the delay between data Mart refresh and the auto generated data refresh, it’s 10 minutes as of right now.

30:15

So it’s looking for no activity for 10 minutes. And what it does, and that’s an excellent question by the way, Dara, because what it does and it’s on this next slide is it uses a thing called proactive caching and it basically builds A side by side model. So that way there’s no downside. And so the data is still available while that’s going on. Carollin, yes.

30:45

A data Mart is it’s not more for a business user, but it’s the point of all this is to make sure that business users have access to the data they need for self-service and ad hoc analysis while still putting a managed governed data source in your organization. This is going to help minimize and.

31:11

Handle some of those skunk work projects and those skunk work databases that might be around your organization. Look, I want a second poll here because I want a second poll. I want to ask y’all, how many of you have that random access database that’s under Phil’s desk? How many of you have Donna’s HR files and those Excel files and people are trying to report off of them, right? I think we’ve all been in that situation at least once.

31:41

And that’s what this is for, it’s to finally take that data and say, yeah, we’ve approved this data. We’ve modeled it, we put it through a data flow. So it’s now ETL that has been done on it. It’s cleansed. We put our stamp of approval on it. Okay, I have not found a size limitation, Elizabeth.

32:06

And as a matter of fact, they do say that, hey, through the proactive caching, you can build large data marts. I don’t have a number on large because you know, large is relative, right? I haven’t seen one yet. There’s always going to be a limitation on things, but hopefully it’s so large that it won’t impact most of us, All right.

32:33

We all cool. Everybody good so far. Did I miss anybody? Give me a thumbs up or a smiley face so I know you’re all doing good. This may be the first webinar that I need 2 hours for. All right. Ooh, Carollin, I like that question. Carollin asked if this could be a prototype for an enterprise data Mart.

33:02

You know, that would be a heck of a way to use this. I like that question. So my gut, yeah, this would be a great way to test some data in there, test the data source, see how well it works, see how well your business users do off of it. Yeah. And then using a deployment pipeline, you could push it out if it worked well, right.

33:32

Alwin, I don’t see any reason why you would have a problem modeling data from both the data Mart and Snowflake. You should be able to model that without issue. Paul, I’d be glad to go back a slide. What specifically would you like me to hit on this?

33:53

You know, one of the beauties of this today by the way, gang, is almost all this came from Microsoft directly. So anything that’s on here, I can just say it’s Microsoft’s fault. No? OK, you’re quite welcome. And remember, Paul, you can download this and read it. The only reason I’m going a little quicker now, gang, is that you’ve had a lot of questions and I want to have make sure I have enough time to actually show this.

34:23

David, there is a recording and the slide deck are available. You know if somebody is still around who happens to have the URL to the slide deck and can throw it in there real quick for me, I’d appreciate that. This is why even though I could do these all by myself, I don’t do these all by myself. So David, we will have somebody. There it is. Thank you. There we go.

34:53

Patrick, I appreciate that. But you can only send to hosts and panelists, but thank you a for effort just for you, Patrick, have a cookie. All right, let’s keep this going. It’s my Friday, so let’s keep this going. So going back to Carollin’s question, going back to some of the other questions that have come up.

35:21

This can be put into a deployment pipeline. So yeah, this is a good way to do some prototyping. You could go ahead and prototype this out, put it into a pipeline, make sure that everybody’s using the import model that you’ve tweaked. Yeah, I really like that usage. Carollin, you too have some pizza. Okay. There we go. I’m making sure you’re all paying attention. All right.

35:51

Right, Jeff, I never thought about pipelining it either until they started reading through this and like, Oh yeah, I could pipeline it. The deployment pipeline Okay 2:42, let’s do a demo. Hickory Dickory Dock 2:42 o’clock. So here I am. I’m in my Power BI. I’m here in my app.

36:19

Dara’s question is recommendations on avoiding version errors and data marks. I have to think about that one. I don’t i think with the proactive caching, we’re not going to have as much of an issue in version errors.

36:48

Because the data set would be updated. The only time there might be a version error is if somebody’s using something literally while it’s updating. And that’s going to depend upon how fast and how often your data is actually refreshing. So that might be a a bigger question offline, just so we can understand how often your stuff’s actually updating and what kind of impact that might be. I’m going to go to my workspace, All right, I’m going to go to a premium workspace.

37:18

Look at my little shiny diamond up there. Notice that I already have a couple prebuilt because, you know I don’t like to wait. Neither should you. New data marked Rasha’s question is which performs better, direct query or import mode? It’s going to depend on the size of data, structure of the data.

37:40

How much you’re doing, how much for changes, How much transformation do you need to do? Because remember, there’s certain things I can’t do in a direct query mode in terms of transformation, so that’s going to be on a case by case in some cases. OK and now comes the fun part. Gang doing everything live. This is not recorded, this is not edited. I always felt bad for folks on SNL.

38:17

Well, there’s nothing more exciting than doing something live, is there? This is one of the little things that, yeah, exactly. Jeff, what could go wrong? I made sure to light 14 candles. I took care of everything while this is building. So what’s happening is space is being allocated.

38:45

Space is being set up. It’s going on our sides. Thank you Scott for making a small sacrifice to the Azure gods and Dara, let me see what you just wrote there while I’m waiting for this. That okay. So Dara said that they see this happen with connection information loss. You added it come back in, the version error disappears.

39:17

Retest the connection to see if this practice can avoid. That’s fair. You know, I’m going to say Donna, to be honest. There’s like little things that I’ve seen where I’ve had to go out and come back in, especially when something’s in preview like this one. Get kept giving me an error on one of the tables. But then if I went back out and went back in, the error went away and I don’t know where or who’s to blame, if there’s any blame on that.

39:46

It just happens. So while this is loading, I want to show you some of the things on the screen here. Oh, there we go. Hey, look at that. I stalled long enough. OK, so I now have a blank repository available. This is the same. Think of this as if I was in SSMS and I just created new database. I’ve allocated space, All my basic tables have been built. Everything’s going on. I’m going to get data.

40:15

Here is the full list of everything I can do. And this is where you all say ooh ah, come on, give me an ooh ah, Olga. Yes, It’s an Azure back end that’s being created the whole tenant. It is for the whole tenant and they’re going out to that lake in their own separate repository.

40:42

Right. And that again, that’s what makes this nice is because now it’s managed. And thank you, Kayla for that. Ooh. Ah, all right, so look at all this, gang. You know, I’ve got this huge, list of things that I can pull this data from. But you, you all, already know, even with all of this, I’m going to use Excel. Come on, why wouldn’t I?

41:11

You’re supposed to already been in that director. See, Jeff, nothing can go wrong. It’s absolutely perfect. Everything can go right. So I’m going to use one of the same Excel files that we use in one of our classes. This is the exact same Excel file. It’s basically adventure works, right? Aaron, this is not the same thing as the data verse. I could answer that for you later. You and I can talk about that later.

41:40

It’s part of the data verse. You know, Steven Strange is going to come along at some point. It’ll be part of the multiverse. So this is Adventure Works data gang. So small file 5 Meg. Now I’ve uploaded it, I don’t need to set up a gateway, I don’t need to create a new connection on this because it’s coming from an Excel file. But I’m essentially taking an XLS and there it is.

42:11

There’s all my tables, so now I can model this. I can do my transformations. So this is going to open up Power Query editor online. So if I wanted to do any kind of data transformations, OK, because I am technically Rasha. Going back to your question, I am in import mode right now, OK?

42:37

So I can do those types of things that we would see in Power Query Editor with an import mode. I can transform, I can extract, I can split, I can concatenate, etc. So here I still have all the same stuff that I would have and when I save this.

43:09

There it is. There’s my data. Look at that. I can model it. See, this is what I was talking about. This little error here. For some reason if it’ll show this, but when I go out and come back in, it’s gone. Dara.

43:41

That’s just one, you know, So I don’t always like showing the behind the scenes because we know that sometimes isn’t the greatest thing to show. But I think it’s important that you all see that, that you can see this little warning on here. Once I go out and come back in, that warning goes away. I don’t know if it’s doing something on the back end. I don’t know what it’s trying to do that’s different. But here I can change my things. I can change.

44:10

My column to I got my column tools. I can update my data set, I can do my modeling. But this is the cool part. I can come here to query Okay and this is what I was talking about. Let’s say that you don’t want to build a report. Let’s just say that you want to access this data.

44:43

Look at that. For those of you out there, I know there’s a lot of you who like writing your own SQL. You’re in my classes all the time. And you always ask me, can I just write SQL? Yes, you can. And the cool thing is that I could take this from here. I could write my own custom SQL, put in my joints, put in whatever weirdness I want to put in my SQL.

45:11

And now I could build a report from that if I wanted to. So I just wrote SQL. Okay. I just wrote raw. SQL got my result set and I could visualize these results. I can build a report off of it, or I could open it up in Excel. Look at that. It just created an xls from that query.

45:45

Yeah, okay fine. Here you go. Let me connect. All right, fine. Assuming this would work, I would use my query, open it up here in Excel, have a pivot in there, or I can use the visual query editor.

46:17

The Visual Query Editor lets me do very similar things. Oh, look at that. I can bring over that. I can join these together. I can create a join off of them. I can create a relationship. I can append queries together. I can change the tables. I can do those same kind of transformations that I just saw.

46:44

In Power Query Editor I could do a multi column join if I needed to, so I can combine that and I can view the SQL behind it, and I can even edit that script if I wanted to. So I can use both of these in tandem. I can drag things over, have a visual look at it, see what’s going on, remove columns, join columns.

47:11

I can see the SQL that’s created on the back end, so this is more than just publishing something to allow for reporting. This is truly allowing us to do ad hoc analysis. Come on, somebody tell me they think this is really neat and cool. Anybody tell me this is really neat and cool. Thank you. So now that I’ve got all this.

47:41

If I go out to my data, if I go out to that workspace, there’s my data set. This is the data set that it created. So I could put this data set into a pipeline. Come on, put this out somewhere where I wanted.

48:09

And oh, look, now my users Dooby, Dooby, Dooba, Platopus carry the platopus. I can go ahead and I can build a report from it. I can build a report. I’ve got access to this data. I’ve got access to everything going on. It just becomes another data set. And so I could turn around and I could embed this.

48:42

Out somewhere. Hey, I can, save this. I can do my explorations because now I’ve got another data set, which don’t worry, Steve, I will clean all of these data sets up so that they’re not taking up space on, you know, in our environment, all right. I got to be aware of that for administration.

49:09

So, Kayla’s question is what if something isn’t ready for prime time? You would you would control who has access to this workspace. Maybe this is a development as you see here, ours is the development workspace. Not everybody in Senturus can see what I do out here playing around with these things. When I’m ready to make it prime time, I would use the deployment pipeline and I would put it out into a public workspace.

49:38

So this is, how we integrate all these things together. All right, Couple other questions. How to add these data Mart tables to an existing model of the other report. I would feed this Alwin as a I would take this as a source for another data Mart and combine those sources together, but I’d have to play with that.

50:07

If the data gets refreshed and when I open up a new report or I open up a new data set, I would get the refresh data. So again, these can be set up on a scheduled or automated refresh. So yeah, it if you set it up for an incremental refresh, it’ll look for changes. If it doesn’t see any changes after 10 minutes, it will go ahead and do that. So it can be set up to refresh dynamically, yes.

50:31

Using in, using the proactive caching and using the dynamic refresh, the incremental refresh. All right, I’m down to 4 minutes. So let’s talk about administration. Told you I might have needed 90 minutes for this one for administration. We can control it through our admin portal. We can see what’s in a workspace. We can see who’s using what in a workspace. I can see.

51:03

Where the heck are you? My premium workspace. So I can see what’s in here. I can get my details. I can see I’ve got my data sets. Training Premium. Where’s my Training Premium? Oh, of course, it’s sort by this.

51:29

There we go. Get to the right one. There’s my data Marts. There’s My data sets. Nelson, again, that would be done using a pipeline to publish this downstream. So for right now you can create them. Creating data marts needs a PPU.

51:52

And that is actually a great suggestion, Scott. How many of you would be interested? We have our chat with Pat Sessions where we focus on a specific topic. How many of you would be interested in having this maybe in June or July as a July as a secondary? We can go deeper into this because it’s already 3:00 o’clock and you guys are still asking me questions, which is awesome. Don’t get me wrong, this is awesome. I just don’t have enough time to answer everything today and that’s this is great, OK?

52:24

Andrea, there’s my July chat with Pat. If I want to see audit logs on these things, I would go out to my 365 admin center. So here’s where I would do audit logging and I would do because remember, this is tied into my entire organization. So I would use this to do my audit logging, my compliance management things along those lines, OK?

52:50

Back here I would take care of my other stuff. I can keep track of them. I can see my activity events. There are also API’s. I want to wrap this up. There are API’s that can be used. You can use the REST API’s. There’s a link in the presentation on this on how to do it. Everything else comes into the portal in summary.

53:15

It’s a big topic. I’m glad you all have loved this. I appreciate all of the feedback. I think it’s wonderful. Thank you all for the interaction. This tool allows us to build bridge the gap. It gives us self-service while giving us governance. It’s an Azure back database which we can incrementally refresh which we can access through other tools.

53:38

We administer it through our 365 and it works with everything we already have. It works with our data flows. It works with everything. Thank you, Aaron. Thank you, Richard. I’m going to enjoy my vacation. But before we go, look Senturus provides hundreds of these things. We’ve got product demos. We’ve got all. We are committed to sharing our knowledge. We are committed. Please go to Senturus.com for resources to see more.

54:07

Here’s some upcoming things. We’re doing a Cognos analytics performance tuning. That won’t be me because I’ll be gone on the 17th right when I’m back from vacation. We’re going to talk about Tableau. Then in May 18th, we’re going to talk about semantic layers in the cloud. Then June 21st, Cognos register. Come, hey, don’t forget to subscribe and like, all right, wait, no, that’s the other things.

54:31

We’ve got lots of stuff going on. Hey in Senturus we concentrate on BI modernizations across the entire stack. We’ve got you covered. We put things in a green arrow, they come out of blue arrow. And life is awesome. We really shine in hybrid environments. We can do Cognos, Tableau, Power, BI. We’ve talked a lot about the connector today. The connector is just one example of how we can help you with all those types of connections. Please reach out to Scott.

54:58

Come to the my other sessions and we can talk more about that. We’ve been doing this for 22 years. OK, 1400 clients, thousands of projects, lots of people from companies that are probably on here. You’re all wonderful. Thank you all very much. If you’re looking to be part of this crazy team, we are hiring. We’re looking for a managing consultant and a senior Microsoft BI consultant. The job descriptions are at Senturus.com. You can also send your resume. Ok.

55:28

We also, we have our FinOps consultant will be part of our cloud cost management practice. So you can be on the cutting edge of some good stuff. Bill Chat GPT can only solve so many problems but you know what Chat GPT does write some really great Dax. Just saying and I have Rich to thank for that. Rich, if you’re still on see I gave you credit where credit is due.

55:55

We found Chat GPT worked for good, Dax writing again. I think I got everybody’s questions. If I missed a question, we will have it in the Q&A session. We will put this up, give us a call, give us an e-mail. Come on, talk to us. You hear? I like talking. It’s what I do. Thank you all very much for coming today. You’ve been wonderful. Get the heck out of here. Enjoy the rest of your week. I will talk to you all in May.

56:24

Thank you all. I’m going to be shutting this down in like 1 minute. Have a wonderful, wonderful time. Thank you all very much.