Thirty years ago, Ralph Kimball introduced the world to the concept of a star schema for storing data. In the technology world, something 30 years old is seen as a relic, outdated and well past its prime. But in this case, is that true? Have all the new-fangled technologies really displaced the star schema? Data lakes. Lake houses. Optimize this! Be more efficient with that! It feels like everyone has a newer, faster, better solution to the star schema.

In this on-demand webinar, we discuss the star schema and whether it’s still relevant in the modern data environment. We talk about

- The history of the star schema

- How the star schema fits into today’s data storage world

- Why the star schema has proven to stay relevant…or not

- What the future holds for data storage

- Where the star schema fits into a cloud cost management strategy

Presenter

Pat Powers

BI Trainer and Consultant

Senturus, Inc.

Pat is one of our most popular presenters, regularly receiving high marks from participants for their subject matter knowledge, clarity of communication and ability to infuse. Pat has over 20 years of experience in data science, business intelligence and data analytics and is fluent across multiple BI platforms. They are a Tableau Certified Associate and well versed in Power BI. An expert in Cognos, their product experience goes back to version 6. Pat has extensive experience in Actuate, Hyperion and Business Objects and certifications in Java, Python, C++, Microsoft SQL.

Read moreMachine transcript

Welcome to the webinar: Is the star schema dead?

2:51

This is probably seriously the most contentious webinar I’ve ever done.

2:56

You don’t know whether I’m saying yes or no yet, so just hold on. Don’t, don’t get ahead of yourselves.

3:12

We’re going to talk about the star schema and we’re going to talk about whether or not it’s dead or not.

3:38

We got the Q&A panel, please use it.

3:42

We’re going to be doing a lot of this real time today, kids, because this is a topic that I’m sure you all have thoughts on, or at least you thought about. So we’re going to talk about this if for some reason I can’t get to your question, we’ll put them into a written response and put that on the Senturus website.

4:19

The very first question that everybody is going to put in the panel.

4:33

How do I get a copy of this presentation? Well, thank you, thank you, Scott. Yes, you can get a copy of the recording in this presentation and you can do it by either going to send Senturus.com resources or you can click on the magic link that I am failing to copy and paste into that window.

5:13

OK, so yes, of course you can get a copy of this. There’s also all sorts of other wonderful, wonderful things on our website, so please go there. Don’t go there now. Wait until we’re done.

5:30

Here’s what we’re doing today.

5:33

Going to tell you who the heck I am, for those of you who don’t know me, which you know.

5:46

Then we’re going to talk about the star schema.

5:49

Then we’re going to do some overview and some additional resources and then Q&A. But again, I’m going to do the Q&A live as we’re doing this because I know you’re going to have questions, comments, thoughts, opinions, etcetera.

6:04

Notice there’s no demo today.

6:08

I struggled and racked my brain to come up with a demo for this presentation. Consider this presentation more of a Ted talk.

6:23

Less of a hands on demo kind of thing and more of let’s talk about this and I’m hoping that you all walk out of here today with some things to think about. And as you look, if you look at the chat window, Scott Felton, our wonderful sales director who handles that stuff. He posted in there a link where you can get a follow up meeting with him to talk about this more 15 minute.

6:53

That OK, just 15 minutes of your life, because I’m pretty confident a lot of you’re going to walk out of here with thoughts and questions.

7:13

I’ve been with Senturus for 18 years this year.

7:17

Alright, I started when I was eight, and if you believe that, you’re my bestest

friend in the world, OK.

7:23

This is my 26th year of data science, data analytics, data warehousing, data modeling data, whatever term digital you wish to give it this week.

7:39

Almost 3 decades I’ve been looking at data.

7:51

I know it’s hard to believe, but sometimes I actually know what I’m talking about. I just successfully re upped my certifications and badges for some products that we do. I am certified and knowledgeable in Cognos, Power BI, Tableau.

8:26

I also have certifications in Java, C++ databases, yadda etcetera. This is me.

8:38

What I want you to do is I want you to answer this poll.

9:03

OK. Where do your data sources come from for analytics? Where are you coming from for analytics? And I’m going to let people have a minute or so here. I’m going to watch that timer.

9:22

I want to get a good percentage of folks.

9:30

And I’m going to challenge those of you who are answering with STAR Schema.

9:37

Because there’s a lot of people that think they have a star schema when in fact they have a hybrid. Because what they’ve got is they’ve got a transactional with a few materialized views pretending to be a star.

9:50

And that is a hybrid that is not a true star.

9:59

You know, and then there’s a lot of people that don’t know. And that’s OK. It’s OK if you don’t know.

10:38

The majority of you are coming in from transactional systems, which doesn’t shock me in the very least.

10:44

The 8% of you that don’t know, I would wager that you probably are transactional or a hybrid.

10:53

Very small percentage of you with wide tables.

10:58

OBT is something we’re going to talk about today.

11:02

When we got, we got a larger percentage of flat files than I expected. 37% of you were using flat files, which is very interesting because that tells me that you may or may not. You’re probably using something like power BI or Tableau where you can easily upload these. A lot of folks don’t realize that they can do that and things like Cognos and those that do are making their own data modules, which is great.

11:27

But it’s not a long term sustainable way to do things and I think you already know that.

11:35

The ones I want to talk to here directly today.

11:40

Are the transactional star and wide table.

11:44

We’re 11 minutes into this. The big question of the day. Is the star schema dead?

11:55

Yes and no. Told you this is going to be a contentious meeting.

12:10

I promised you excitement and adventure.

12:35

Here’s the reality.

12:38

If you are amongst that 56% of respondents who are still on a transactional system.

12:47

A star schema is for reporting. Is absolutely 110% going to improve your analytics environment and your analytics capabilities.

13:11

Today’s modern technology today’s cloud based systems are bigger, better, broader options.

13:21

There are some new and exciting options out there. There’s some things that.

13:26

They’re not killing the star.

13:29

But they’re certainly giving you something new to look at.

13:35

At the same time, those new and modern technologies, those come at a cost.

13:41

And whether that cost is time or whether that cost is money?

13:47

There’s a cost.

13:48

So if you’re among that 56% of people that answered that poll a minute ago. For you, it’s an iterative process.

14:02

And we’re going to dig deeper into these three scenarios, the scenario of a star.

14:09

Versus a transactional versus a wide table or one big table, an OBT?

14:18

We’re going to start talking about some of the pluses and minuses here.

14:23

Especially those of you who are moving into a fin OPS world. This becomes very, very important for those of you who are moving or considering doing things with a fin OPS of a fin OPS nature. If you’re trying to figure out your AWS spend, if you’re trying to figure out all these other things.

14:42

How that data stored is going to become very important. OK. And shameless plug, we have products that can help you, our advisor team can help you with your fin OPS needs. And Scott would be happy to get you talking and putting that in the right people. So, you know, scroll up, click on that little 15 minute link with Scott and have a nice day. All right.

15:09

Let’s talk about this for a second.

15:11

30 years ago, before some of you were even born.

15:23

Ralph Kimball said. Hey.

15:26

We need a better way of doing things.

15:29

Because 30 years ago.

15:34

Everything was pretty much a transactional.

15:37

And no, I am not going to debate you on whether Kimball or Iman is better. We’re not getting into that today.

15:50

Ralph said there needs to be a better way because for those of you who weren’t around or didn’t have to deal with these budgets 30 years ago, did you know that in 1996 a GB of storage space was, you know, about $12,000?

16:08

Think about that. A GB of storage space was $12,000.

16:14

So there needed to be something better. There needed to be something faster. There needed to be something optimized for analytics.

16:29

If you want to do things on site, well yeah, the hardware is much cheaper. I mean, give me a break. I’ve got, I’ve got. What is this? What is this one right here? I’m just going to pick the first one that’s on my desk. This one is 128 gig flash drive that I think cost me 6 bucks. OK.

16:47

That’s like $1,000,000 in $90 million of 96 storage space.

16:55

So yeah, on-site hardware is much cheaper, but.

17:00

What about the cost of maintaining things on site? What about keeping an IT department? What about keeping a data center? What about the disaster recovery plan? What about what about what?

17:11

So today we’ve got cloud based solutions that can be cheaper if you’re analyzing your spend correctly, if you’re watching your instances correctly, if you’re reserving things properly.

17:26

You can be cheaper.

17:28

And depending on your schemas and depending on your design, they can potentially be faster.

17:38

At the same time.

17:40

I’m going to stress it again to get to that.

17:44

What about all the on premise data? What about all that data that you’ve been using? You know I know of 1 particular group in.

17:52

The utilities industry that has data honest to gosh, going back to 1970.

17:59

And they’ve got that all stored in a data warehouse.

18:03

Are you going to move 50 years worth of data into the cloud? No, because then you’re killing yourself. There’s no point in it.

18:13

Hey, just because a buzzword says move it all in the cloud, no.

18:19

You still have a need potentially for on premise data.

18:24

And if you’re using that on Prem data and you’re using that for analytics.

18:47

So you still have to deal with on-prem data. The star is not dead.

19:06

And let’s focus this now. This is where this topic becomes very contentious and I want to narrow this down.

19:17

First and foremost, I am not talking about the generic storage of data within an organization.

19:24

I am talking specifically about those of you that are here to understand how to do analytics.

19:35

You’re here because you have reports that have to be built. You have visualizations that have to happen. You’ve got things that have to happen on a regular basis.

19:49

That’s what we’re talking about. I’m not talking about your generic storage and your environment. I’m talking about.

19:56

A good model, a good foundation for report development, of good base for visualization, creation, allowing. And here comes the big part.

20:08

Self-service analytics.

20:11

Whether that self-service is giving somebody Power BI desktop, whether that’s self-service is opening up Cognos to allow people to do their own explorations.

20:23

Self-service and those of you that are using flat files.

20:27

You know how important that is if you’ve got people that are doing their analytics from an XLS.

20:35

You can’t take in an XLS that’s got 73 formulas to get one number that’s linked to four other spreadsheets that’s sitting on Donna’s desk, and Donna takes a two week vacation and takes her laptop with her.

20:51

You need a solution today.

20:54

You need a solid foundation today.

20:57

And for that.

20:59

The star is still very much an option, still very much alive and kicking.

21:08

Why am I focusing on just analytics?

21:12

Because we’re talking about getting data out of different systems, one of the biggest issues that faced most IT folks and most organizations today is the issue of siloed data.

21:25

Every department has their own thing. Every department has their own database fill. In 1987 built a database on access and somehow, someway that has become the de facto standard for everybody to do their reporting off of for that department.

21:45

Well, Phil’s retiring next week.

21:48

Now what are you going to do?

22:04

So we need a good way of getting data out of all of these different systems.

22:09

We need to see our key indicators. We need aggregation and summarization.

22:16

We need a way to deliver visuals, and again, I’m going to stress it again.

22:22

We need a self-service environment.

22:24

This is the future, whether you like it or not.

22:30

Anybody can go to Microsoft site and download Power BI right now.

22:39

They can start doing this stuff on their own.

22:42

Why don’t we make sure that we’ve got data governance in place and why don’t we make sure that we’ve give them something that’s appropriate to do that work.

23:02

Come on, somebody throw me one question. Somebody tell me you’re alive out there. I’m talking to myself in the dark here.

23:55

That is one of the biggest challenges you’re going to face is how do we get Phil off of Excel? That is an excellent, outstanding question.

24:33

Those go when I’m talking about self-service, I’m talking about if I’m looking at Cognos. I’m talking about letting people use the dashboarding capability, using the stories functionality, using explorations, where you give them a package that is narrowed down to what they need. And that’s very important between a star and a wide table when we give them very, very tailored.

25:03

Models and packages to work from, whether it’s a Power BI data set or a Data Mart, which is finally moving out of preview, which I will be doing probably more than likely next month. We’re talking about Power BI data marks, so we give them Velcro. That’s how we do it. We give them very tailored, tight.

25:41

You cannot give somebody a transactional database and expect them.

25:49

To be able to do self-service on their own, when heck the most they might have is a data dictionary. They’re definitely not going to have an ERD to work off of.

26:03

And try to figure out what AR $32 sign FX is.

26:09

This this is where we’re talking about things. So how do we get?

26:14

Is the star schema dead? If you’re on a transactional heck no.

26:21

Because you cannot report off of this with any kind of good performance with any kind of self-service within.

26:31

I know that this is going to ring true for some of you.

26:35

Those of you out there who are writing reports today, I would wager very heavily you’ve got at least one set of data that you work with where, well, first I have to pick the department and then I have to pick the date range that then I also have to filter down for a particular group. And if I don’t do that, it takes 10 minutes to run the report. But if I filter those first three things first, then it’s great. But I also have to write my own custom sequel to put into the report, so that way I can do these joins between these, and that way I can make sure that it’s an.

27:05

Outer join and then I’ll go ahead and I’ll union that together.

27:11

And I know that for some of you that sounds familiar, that that sounds like a typical day of report writing.

27:19

And velsko, that’s what I’m saying. When you cannot give that to somebody, you cannot give that to Donna and HR and say, Donna, go do your own analytics. Oh, but by the way, you have to learn how to write SQL and you have to figure out what a full outer join is. Oh, and by the way, there’s no referential integrity on that table.

27:41

We give them a star.

27:43

This is how we go in steps. How do we manage that budgetary and time constraint? We go in steps if you are here.

27:58

This is going to change your life.

28:08

Now I can start seeing my data. I can break it out. I can give. I can do an inventory data set.

28:16

That I can give to the folks and that I can give to Phil to do the inventory reports. I can do a sales data set that’s a 90 day rolling.

28:41

But what about the 55% of you that are already here? Where do you go? Where’s your next step?

28:49

We’re getting there. I promise you we’re getting there, OK?

29:05

One question you might ask is how did I get here? My God, what have I done? No, wait, you might ask yourself.

29:15

Where do I do all this? Where do I put this? Where does this go?

29:22

That that’s something that is going to depend on your environment.

29:27

It’s going to depend on your budget. It’s going to depend on what you’ve got available to you because you can model these things in different places.

29:36

Always remember our goal, our goal is to allow for analytics and reporting and visualization delivery. We’re going to put this where it needs to go.

29:46

We’re going to go from source or sources to ETL to a large repository to the tool.

29:53

We need to figure out where this goes best for our analytics, and for some of you that might mean having to build a star within the tool. You might have to build a data module in Cognos. You might have to use power query editor to transform the data yourself. You might have to.

30:13

You may not have the option or the luxury.

30:17

Of doing this upstream.

30:20

It’s always best to push as far upstream as possible.

30:24

But if you don’t have that luxury.

30:27

You do what you can.

30:29

In every one of our reporting authoring classes.

30:37

We stress this time and time again. We have modeling classes that show you, OK, how do I build a model in Cognos? How do I build a model in Power BI? We do it where we can.

30:48

And that might mean I’m going to go back for a second. That might mean taking this transactional mess.

30:57

And making it a star in the tool.

31:03

And for that the star schema is very healthy and alive.

31:09

So where do we go next?

31:13

Here’s that iterative process.

31:18

We start with the transactional.

31:22

And if you’ve got a CRM and ERP, if you’ve got anything where you’re inputting data, you’ve got a transactional.

31:30

Whether you like it or not, you’ve got one.

31:35

So we move that to dimensional. Do we do it upstream? Do we do it in the tool?

31:52

A customer sales package that can be given to just a customer sales associate team.

32:03

Here’s where the star starts to show its age and starts to show it’s shortcomings.

32:12

That’s when we want to start considering moving things to a wide table. We want to start considering moving things to an OB TL1 big table. Here’s where we’re starting to move that data into the cloud so that we can distribute it to everybody in the organization. Remember what I just said 5 minutes ago, one of the biggest challenges faced by majority of IT people is dealing with silo data.

32:38

If we can get a wide table and we can publish that to our Power BI service, then it’s a very specific, tailored, narrow down data set and it is a rolling data set, it is a 12 week, 12 month whatever we need for our business users.

32:59

A wide table may be the right way to go.

33:03

Because now we’ve got better performance a lot of times.

33:06

Because we’re essentially going it’s a little bit weird to understand and go backwards though, because you’re thinking to yourself, well, wait a minute, you just told me to break everything out into dimensions, but now you’re telling me to put everything back into a single table, yeah.

33:22

But the difference between doing it here and here is that a wide table is focused. A wide table is narrow. It’s narrow in its scope.

33:34

And it’s focused on building that one report you need to get out every single month to that one group of people.

33:48

Those of you who don’t have a good data governance plan in place, you’re moving here. You better get one, and you better get one now.

34:01

If you’re going to move all this data out to a cloud based solution.

34:06

You better know who has access to what and what they should or shouldn’t see.

34:11

All right. The minute we move from on-prem we’ve got more things we have to worry about.

34:24

Again, I mentioned FinOPS a minute ago.

34:27

A lot of groups are now finding out the importance of having a thin OPS team, somebody who’s not solely IT, somebody who’s not solely business, but somebody you can have the control and the data governance and can take care of this and taking that data, taking that fin OPS data and putting it into one big table so that we can manage and do analysis just on that.

34:51

That’s a great way to go.

34:54

So it’s iterative.

34:55

We got to get off of this.

34:57

That that’s not a question, but it’s going to be a real challenge to try to jump.

35:04

From transactional wide table. Why? Because we don’t know what we need.

35:09

This intermediate step of moving it to a star.

35:14

Lets us break things out into the dimensions that we need to start identifying what we want to do for reporting. Because if you try to go from a transactional straight to a wide table and you take everything that’s in your transactional and you move it to a wide table.

35:35

You’re in just as bad of a position as you were before and that is coming from actual folks who tried to do that. I was just talking to one of our consultants that they had somebody who basically took their transactional data, dropped it into a wide table and saw worse performance.

35:52

Because they never took the time to identify, what dimensions do we actually need for reporting? What measures do we actually need for reporting? We’ve got 34 measures in there, but we only report on six of them.

36:08

What time frame do we need? Our transactional goes back to 1975.

36:13

Well, this is going to sales reports that only have to be 24 month.

36:21

So trying to jump from here to here.

36:27

Please don’t.

36:29

And this is where the star maintains its need and it maintains its ability to still exist because even if we’re just using it as a business tool to identify our dimensions and to identify.

36:44

What we’re trying to work with.

36:47

It’s still worth it.

36:51

About halfway through, give or take.

37:14

And Velsko, you’re still here. Did I give you some things to think about there? Did I help?

37:20

Define self-service for you.

37:48

Mark asked if a data mart and a wide table has a similar solution to solve security and specific reporting needs.

37:59

So Mark, if I’m looking at a true data marked, a true data marked by the Kimball definition would be a subset of your data warehouse, right?

38:11

It would be the same thing. So yes, in some degrees a wide table and a properly sliced data mark could act as similar solutions. What’s going to differentiate that, in my opinion, is whether I’m doing it on Prem or cloud.

38:28

And whether I’m doing it for a larger group or if I’m doing it for more tailored self-service.

38:34

OK. Because if I would say, if I’m say I’m building a package or a data set that I want to give to the report development team, I’m more apt to use a data Mart with on Prem data because it may cover more business areas, whereas I would move to a wide table to slice that data mark down even further.

38:58

And move it to a cloud.

39:03

You’re right. And that’s why I say so that is why next month we are going to talk about data marts in Power BI because you’re right, because it’s after nine months, it’s coming out of preview very soon. And so, yeah, we’re going to talk about data marts and Power BI for exactly that reason.

39:25

So Carolyn OBT something similar to process data Lake. This is where the terms start getting messy.

39:33

This is where you start throwing marketing terms in there where we’re saying data lakes versus data oceans versus data warehouse, yes. And OB T would be more synonymous in that respect because it is a lake of data, it’s a wide table.

39:53

I’m trying to keep the terms less marketing oriented. That’s why we have people like Scott here. You know, if you want to talk marketing stuff, Scott, I’ll jump in and help you anytime.

40:16

You say about the business users and data marks. Yeah, you’re absolutely right and that is again.

40:25

For me, the distinction is as whether I’m doing it on-prem or I’m doing it in the cloud, OK.

40:35

If I’m using the gateway in Power BI and I’m going to some legacy system that’s sitting in a warehouse somewhere.

40:44

I would do it as a as a data mart.

40:47

That’s how I would approach the situation.

41:13

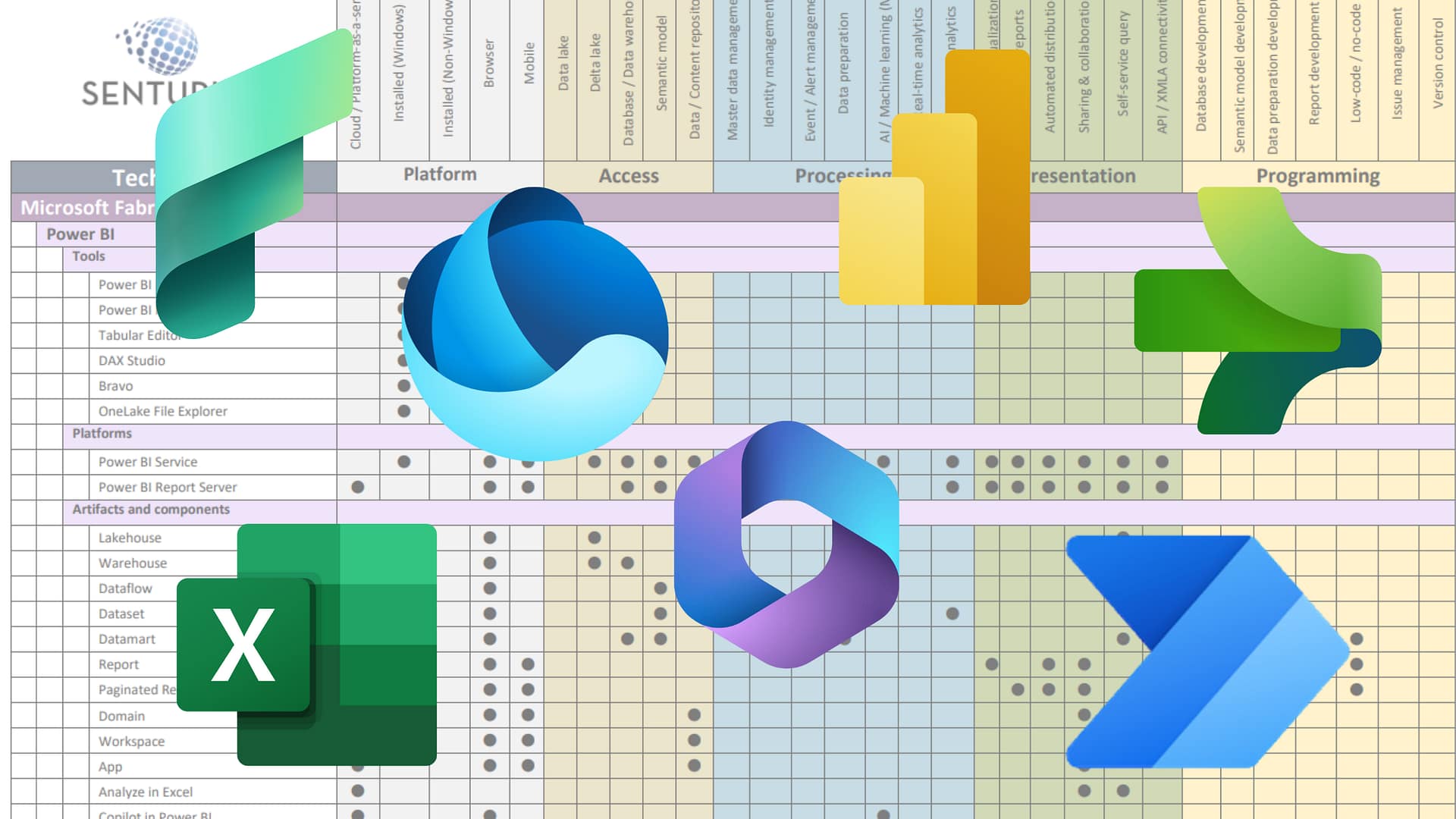

So here is a handy dandy chart.

41:20

So Carolyn and a WSDA scenario, now we’re talking wide table for the most part.

41:30

And then again, if you’re talking about doing something like that with FinOPS or anything along those lines, yeah.

41:45

This is a quick way to see everything I’ve been talking about today.

41:51

Look which one has the least amount of check marks.

41:55

Get off of a transactional system.

42:00

The 56% of you that are still in this column.

42:04

Get here.

42:07

If you’re already here, let’s talk about this. Let’s narrow it down. Let’s figure out which business areas you have would benefit for this.

42:18

Let’s figure it out because notice the one check mark contains full depth and width of data, no?

42:25

And it really shouldn’t. Not if you’re trying to get the best and most most performance out of this.

42:33

All right, mark. I think you’re going to like the next slide.

42:38

This is from Microsoft’s site.

42:45

As of February 2023.

42:52

Understand Star schema and the importance for Power BI.

42:58

So your tool vendors, IBM, Microsoft, Salesforce, they’re still going to tell you need to be out of star schema.

43:10

And they’re going to this article right here, if anybody is interested in reading that article.

43:18

I’m going to copy and paste the link to that article in the chat window.

43:31

If you need anymore proof, if you need any more validation that a star is important for analytics today.

43:43

Here it is.

43:44

Because when it comes to reporting you’re going to have better accuracy than a transactional then. One of the biggest problems with transactions is you’re not getting the same aggregation, you’re not able to summarize, you don’t have the same type of joint situation.

44:05

So look when we moved to transactional star we’re adding referential integrity, we’re eliminating redundancy where no matter what the technology is. No matter how far this is advanced, whether it was 30 years ago or whether it’s today, our biggest cost in any query is the joint. It just is OK that is our biggest cost. So if we have successfully identified our business needs, if we have successfully identified our true dimensions and measures that are needed.

44:45

Moving to a wide table can improve on that because now once again, we’ve reduced that cost of joints, but we can’t get here.

44:53

We can’t get to here.

44:56

Until we’ve gotten away from here.

45:01

Because we need to identify those things first. We need to know what we’re looking at.

45:09

One of the biggest problems that a lot of companies encountered when they first started trying to move into these larger data warehouses is that.

45:21

They felt they needed everything. I need to know everything about everything. No, you don’t.

45:28

You really don’t.

45:29

Stephen Few has a great book on that called Noise.

45:36

A lot of what you’re taking in truly is noise, so if you want to get to a wide table you got to get the noise out of there.

45:44

And going from a transactional to a star will help you get the noise out of there.

46:03

When we start moving into these other options, a star or a wide table, we definitely start getting more flexibility.

46:11

Transactional systems can be very locked in. You know, you might have to call up somebody at that. That gets paid a lot more than most of us on here. Somebody who’s going to get 3 to $500.00 an hour to come in and change one field for you because they’re the only ones that know what a R32 FX$ net is.

46:33

When you’re doing this yourself in a star or a wide table you’ve got more flexibility.

46:40

A star is going to take up less disk space than a wide table or transactional typical typically because again we’re putting things in, we’re reducing redundancy, we’re putting things into dimensions. Now you could in in essence end up with less space on a wide table if you are narrowing it down as well as you should.

47:05

And the last one and the most the contentious 1 performance.

47:11

Those of you on a star.

47:13

There is potential for performance improvements when you move to a wide table if that wide table is well designed, if that wide table is narrowed for the focus of reporting.

47:27

For those of you are familiar, 5 Tran did some testing.

47:30

And what they found in their testing was with the data set was taking it from a typical star schema to a wide table and doing analytics.

47:41

What they found in subsequent runs of that data set, Redshift showed 25 to 30% Snowflake 25 and Big Query 50% performance improvement.

48:01

There is an opportunity for performance.

48:05

And again, this was done by 5 Tran late last year.

48:18

And you can see that because we’re getting rid of those joints.

48:22

We’re cashing things better, we’re minimizing joins, we’re getting our better improvement, but again versus a transactional.

48:31

The star is still going to be better.

48:34

Alright, we’re coming up on the end of this, so let’s wrap this up a little bit. So is it dead? No.

48:43

If you want to start moving into a wide table, they’re going to be best for things like prototyping, speed to market, or in my opinion, hybrid situations.

48:58

They’re going to allow us to have the best of both worlds. Maintaining our on-prem so we can maintain our data governance, maintaining how we’re doing, but starting to move these things out so that people can start doing more self-service, getting things focused.

49:16

Google Big Query, Redshift, Snowflake those are examples of wide table.

49:40

Especially those of you still on transactions, those of you still primarily on-prem, a star schema is going to be the most comprehensive.

49:48

It’s going to give us that full breadth, depth width.

49:52

It’s going to give us something that’s going to give us better performance.

49:59

And it’s going to give us a much, much better user experience than our transactional will, Sharjeel.

50:08

So if I’m building A1 big table that goes back to that earlier slide. Where you put it is very dependent upon your organizational structure.

50:21

Your only choice may be to do it in the tool, because you may not have the ability to change your upstream databases. You may not have the time or the money to build a whole brand new database somewhere you may not have right, so it’s going to depend on that.

50:40

Peter I am promoting having a hybrid. To be quite honest, I am.

50:45

At the end of the day, when I’m saying to all of you is look, First off, plain and simple, get off of a transactional system. You want to move forward, you want to move things to the cloud, fine, but make sure you’re coming from a star that’s been built on your on-prem data.

51:01

And those things that you don’t have good data governance over or those things that require a broader audience?

51:08

Let’s get those in a star now and then use that to start identifying those self-service modules that we can narrow down that would be appropriate for a cloud based Bigtable.

51:29

That’s more what I’m saying. I want you to see this as an iterative process.

51:36

Identify your business needs. Identify what’s going on.

51:49

Your question is one of the biggest cruxes and why this topic we had to talk about it.

51:55

For all those for everybody, I want you to hear this question. How do I win the argument with all the other voices including we rely on transactional to get the best mix across your organization.

52:09

The most dangerous words at any corporation, any organization. I don’t care what your business is that’s the way we’ve always done it.

52:22

There’s the most dangerous words. One of the best ways, Vladimir, to do this is to have somebody at that senior or executive level who’s able to drive this from the top down.

52:35

That is the hands down best way to do this.

52:39

But I know that that’s not going to happen for a lot of people and that’s where we can start maybe doing some of these wide table prototypes.

52:47

If we can show performance improvements, then we’ll start winning them over. But that also is what you deliver to them. Hey, check out this dashboard I built. Hey, check out these visualizations. Hey, look at how fast I can get you to results. Look at this analysis I was able to do from this star schema.

53:15

And some cases that might take a skunk project.

53:21

But that’s why we got people like Scott who you can set up a meeting with, who will be happy to talk to you about that and happy to come in to help be your argument.

53:33

That’s what we do. That is that is why we are here because hey, if you want more of that we can demo these products for you. We can show you on our website. We can have meetings with you.

54:12

This is how you win the argument, Vladimir is you let us help you put together a presentation in a demo.

54:30

OK, step one, move off of a transactional.

54:37

Start with that. Show them the KPI’s. Show them.

55:06

We’ve also got some good resources. Honestly, there’s some great resources on our website.

55:21

You want to know how Snowflake it works. Here you go. We’ve got, we’ve got a white paper on it.

55:27

You want to talk about how do you get rid of your traditional ETL to move these things. We got white papers on this stuff. People go to Senturus.com/resources.

55:37

That’s your demo for today. So in final summary, for analytical purposes, our stars still our best first step.

55:46

Transactional and even OB T can be limited. So Peter that’s going to your point that there is a need for a hybrid.

55:54

OK. The two things that we really can’t necessarily focus on are cost and performance in some respect. Cost definitely we’re not looking at $12,000 for a GB of storage, but the performance one if you’re if you’re already moving towards a better solution.

56:17

They definitely mean less today than they did 30 years ago, but you can’t disregard.

56:23

Especially if you’re looking at the maintenance and everything else.

56:28

Hey, that’s Senturus. We provide hundreds of free resources on our website to the Knowledge Center and look at all this stuff. Give Scott a call.

56:45

We’ve been committed to sharing our FBI expertise forever and ever.

56:51

Those of you who are Cognos people, join me March 22nd.

56:56

What is that? Oh, that’s next week. Join me next week we’re going to be doing an open roundtable session where you’re going to ask me everything you wanted to know about Cognos reporting.

57:07

March 30th, we’re going to talk about bringing Cognos data into Power BI or Tableau.

57:13

April 19th I’m going to be doing power query editor for data cleansing which will help you with some of this modeling stuff. April 6th, I don’t know who’s doing that one, but we’re doing a data integration options.

57:30

We’re here. We’ve got all this. Come talk to us.

57:34

We concentrate on BI modernizations. We’ve got a full spectrum of services we can train you in Power BI, Congress, Tableau, Python, Azure, whatever you need to get to your bimodal BI and migration, all right.

57:50

We really do shine in that hybrid space.

57:57

So if you’re looking to migrate, if you’re looking to move, please, that’s where we excel.

58:05

We’ve been doing this for over 20 years, 1400 clients, 3000 projects.

58:23

If you would like to be part of this wild and wacky team, we’re looking for a managing consultant in a senior Microsoft BI consultant. Send your resume to jobs@Senturus.com tell them I sent you. I’ll split whatever money I get with you half.

58:40

We will have to find OPS consultant will be part of our new cloud cost management product and practice woo fancy.

58:57

One last question here. How can a star schema be wider and deeper than the transaction system that I got one minute. I’m going to answer that really quick.

59:11

Most transactional systems are specific to a particular business area. They are your ERP, they’re CRM, a proper data warehouse built into a star schema, takes data from all areas. It’s your inventory system, it’s your budget system, it’s your customer management system. It is typically something that’s come from many, many systems and it is a true warehouse of all of your data. So therefore it can be wider and deeper than the transaction system for which it’s sourced because that warehouse is the culmination of multiple transactional systems.

1:00:01

There’s nothing that says you can’t have a sales and inventory in the same data schema.

1:00:08

It’s multiple stars, but it’s a single schema with multiple facts and conformed dimensions.

1:00:19

Thank you all for coming. Have a wonderful rest of your Thursday. You’re all beautiful. Get the heck out of here. Enjoy the rest of your life.