Business analysts: you are now required to have a strong understanding of key data modeling concepts.

Visual analytics tools have elevated this skill from “nice to have” to “necessity.” As self-service analytics and data experimentation have become more common, these tools have opened the data floodgates. Business users can introduce any type or quantity of data sources. IT departments, which used to manage that data in complex BI systems, have relinquished control, leaving the responsibility in the hands of line of business. The potential for flawed data is high.

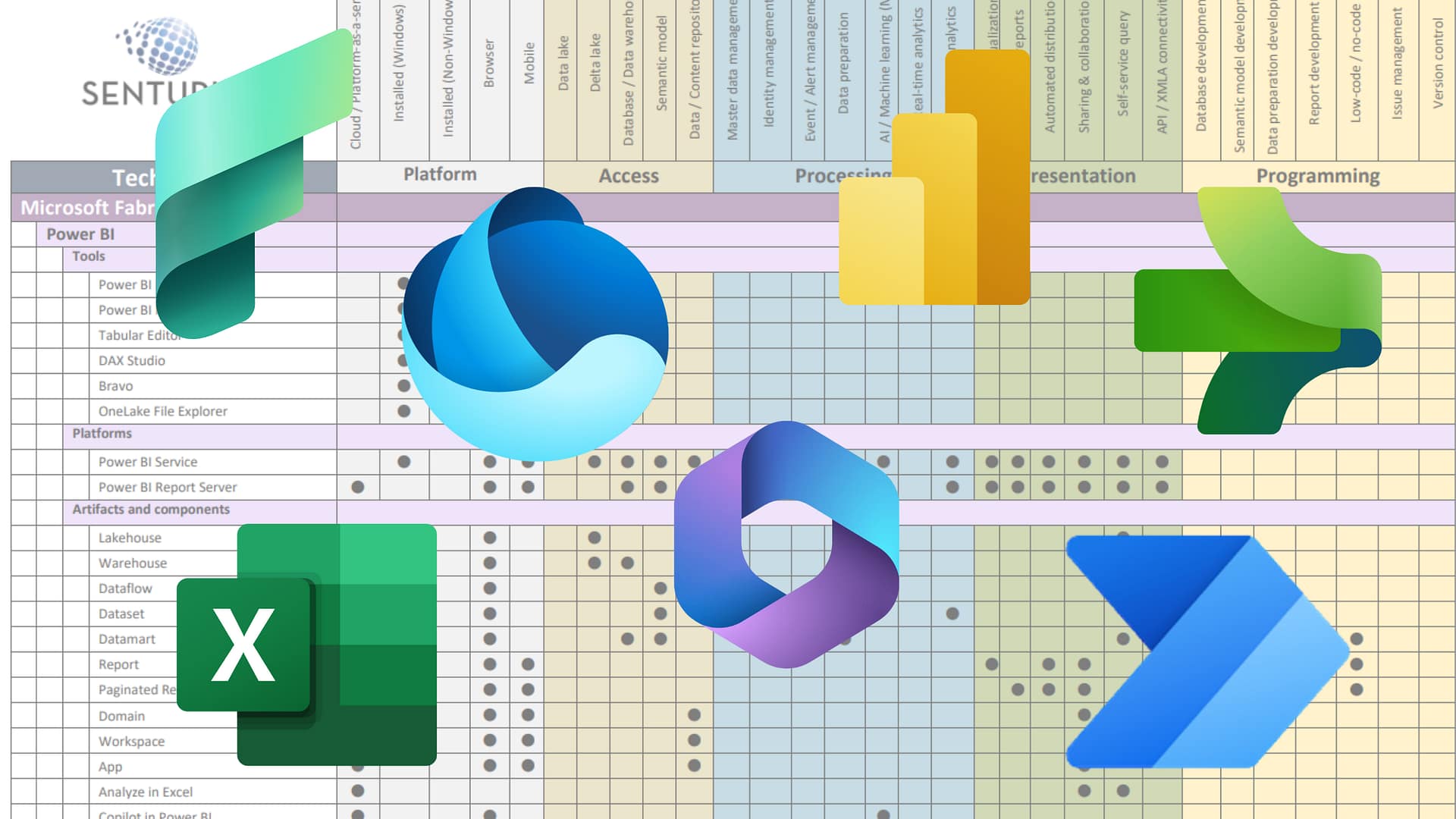

Whether you use Cognos Analytics, Tableau, Power BI or even simple spreadsheets, managing your data architecture is crucial. These tools give you power to either be a data hero…or to be the source for delivering potentially unsound data that can lead to disastrous decisions.

In this on-demand webinar we share the data modeling fundamentals you need to confidently charge forward. From the simple linking of a single spreadsheet to an existing data package, to a complete dimensional model of dozens of database objects, we show you how proven data modeling techniques and fundamental concepts will guide you toward building a reliable, stable and accurate analytical framework.

Presenter

Albert Valdez

Vice President of Learning Solutions

Senturus, Inc.

Albert has more than 18 years of experience in business intelligence education and technical training. In addition to founding and running the Senturus training division, Albert also serves in various roles in the company including senior consultant and solutions architect. Before joining Senturus, he was a Senior Education Specialist at Cognos. Albert is an IBM Cognos Certified Trainer and has his lifetime CTT and certification from CompTIA.

Read moreQuestions log

Q: Is a single fact structure good for reporting?

A: The simplest structure in data modeling is a single fact structure, meaning there is only one fact subject. The ideal structure of your data model will be determined by the scope of the reporting requirements for your project. For example, if you are only concerned about reporting on actual sales, you can design a single-fact schema. However, if you also need to look at returned items, inventory or forecasted sales, this schema would be insufficient, and you would need to edit your design to include those additional fact areas. This is an advanced modeling concept, so if you are interested in exploring more, please contact us at 888 601 6010 ext. 1.

Q: Is it possible to use unstructured data for modeling or reporting?

A: Any type of data can be modeled and reported on; however, most traditional BI tools do not handle unstructured data well. The query language these systems are based on is SQL–Structured Query Language–so to deliver unstructured data through these tools, a significant amount of transformation or manipulation of the underlying data is required. There are other analytical tools and approaches for this type of unstructured or semi-structured data that we recommend you use, such as NO-SQL, Hadoop, text mining and R.

Q: Our reporting is slow due to the volume of data. What can we use to speed up reporting? Will building multiple silos or cubes of data help? We already have a star schema.

A: We strongly believe in the star schema as a basis for design in relational environments. There are a number of other techniques and tool-specific features in the modern RDBMS world that can significantly improve query performance such as columnar data storage, in-memory processing and aggressive caching. MOLAP cubes such as PowerCubes are still a good approach in many applications as well. We can conduct an architecture review for you. This investment will pay off many times with improved performance and user experience. please contact us at 888 601 6010 ext. 1 for more information.

Q: Do you think there is a difference between analytic reporting data modeling and operational reporting data modeling?

A: Operational and analytical reporting have meaningful differences; however, the underlying data models do not change much from a logical perspective. It is true that a semantic approach (how the underlying metadata is exposed) may diverge based on end users and whether they are more focused on operational versus analytical outputs, but it is rare that one application would have distinct data models for these two audiences.

Q: Are we able to review one-to-one and one-to-many cardinality?

A: Cardinality refers to the number of potential matching rows on each side of logical relationship. It is important for the query engine to have this information to create the proper query plan, especially in multi-fact queries. Cardinality is not expressed in SQL; however, query tools that generate SQL need this information to correctly prepare aggregation plans and optimization strategies.

Machine transcript

Hello. Good morning. And good afternoon. Thanks for joining us. My name is Albert Valdes and I am going to be your emcee and your host and presenter got a couple folks on the panel that are going to be taking some questions. So wanted to get started right away. We’ve got very interesting topic here. It’s something that we don’t do as frequently as some other topics, but it’s really a concept that’s become much more important for business analysts. And there’s a number of reasons for that. So let’s get to some housekeeping items. First of all, if you’ve not use GoToWebinar there is a little control panel that pops up on your screen. You can move it around and docket wherever you need that, little green, or excuse me, orange arrow, there, that button will hide and show the panel. That way you can submit questions. We want to make this interactive.

3:53

There’s lots of folks on the event, so we don’t have everybody’s microphone open.

3:59

But, again, definitely want to make sure that we can have some, a live Q and A at the end of the session here. And, as I mentioned, we’ve got a great panel that’s going to be helping address some of those questions. So, use that.

4:12

Questions, panel, comments, feedback, back, and, certainly, you know, anything related to our topic or anything else, please use that, too, make sure that, you know, we get your feedback and input on, on the session here, so.

4:31

First question we always get, where can I download the deck? Can I view a recording? So, yeah, we do have a resource library, the deck should already be there, so you can go ahead and download that, and share that with whomever, And we’ll do some quick, clean up an editing of the recording, and click, paste that in the Resource Library, as well. That might take us a few days, and we’ll send out a note to everybody who registered when that is ready as well. So, that is kind of are typically most common questions when I had those off. And then, just ticket.

5:04

Quick peek at our agenda. This looked like a really packed agenda. But, till we’re going to do a couple of quick polls, I don’t like to spend a lot of time with that, but we want to survey the audience, especially relevant to this topic. Again, it’s not specific to any one particular platform, so we want to get a sense of what tools folks are using, as well as background and experience with the topic. And I’m not going to get too deep into the technical weeds here, but we do want to give everybody some exposure to what we are talking about here and when it comes to data modeling and one of the core concepts and why is it important? And is data modeling. The same thing is data preparation.

5:45

They kind of overlap, but we want to make sure, again, we kind of clarify what types of tasks and activities and concepts are related to those different areas. And of course, we want to make sure you understand who we are, and what it is that we can offer to help you with this process.

6:02

I mean, it’s definitely something, we find, a major challenge.

6:06

In fact, I’ll relay some stories as we go through the material here, regarding the audience that we tend to interact with AED.

6:15

You know, the difficulty that some of these topics can, can really bring. So, if you’ve not been a part of our webinar series the last several years, I, my name is Albert Valdes. I’m the Vice President of our Learning Solutions Practice Senturus. I’ve been in the business for about 20 years.

6:33

Focusing and analytics business intelligence, mostly in the Cognos and Tableau platforms, but, really, around solutions.

6:43

And, of course, my main area of focus, and my passion is in learning, and delivering knowledge, and working with clients to help enable them.

6:54

So that being the case, again, not really focusing today on any one particular tool, we wanted to see from the audience, what BI tools are you currently working with?

7:08

And so I use the word prevalent.

7:10

That’s not really that important here. I don’t want to say which you know any one. So, this is a multiple choice.

7:16

So go ahead and give us your feedback. We see a lot of folks are clicking in there, so, if you check out the poll, please click in there. You can pick as many of these as you want.

7:27

Like I said, we know the reality is that most organizations do work with multiple BI platforms.

7:35

So, don’t, again, this isn’t a one is the most popular or widely used, but which ones are in place? So, we know our audience pretty well.

7:43

So, you can see the majority there is really in that Cognos and Tableau family.

7:49

But a good proportion on up and coming platform, like Power BI.

7:54

And, you know, a definitely significant amount in the other category.

7:59

So, in the questions panel, if you’ve selected other, go ahead, and, if you want this type in which tool it is that you are using, that’s not covered, and we add a limit of five, and we wanted to use one of those buckets for others. So, go ahead and drop us a note.

8:18

If you’re interested in sharing that, I’m going to go ahead and share the results, so everyone can see where we’re at.

8:25

And, like I said, prevalence more of a prevalence on the IBM cognitive side than I anticipated.

8:31

Maybe it’s because of who the presenter is today, and my experience and background, but a good chunk. Also, using Tableau and Power BI.

8:42

Like I said, if you got a couple seconds, go on the questions panel, and let us know which BI tool that’s not mentioned here.

8:49

It’s always great info for us to have, and appreciate everyone participating there.

8:54

Great. Wow, Really, 86% participation there. And then, for my own edification, and it helps kind of guide, you know, where I want to focus on some of the slides? There’s not a lot of PowerPoint here.

9:07

But it is a conceptual topic with the idea of data modeling.

9:13

I wanted to stay on where people’s background, you know, how experienced folks might be in working with, uh, data modeling as a concept. So, go ahead and give us your response. This is a one response only, so, what category of these five do you kind of find yourself in? And, trust me, we get a lot of folks, like I said, it’s.

9:38

Sometimes we make assumptions about who our business analysts are and, you know, what is expected of them.

9:46

But, in reality, as this, the evolution of analytics and the whole landscape of business intelligence and IT role, and the things we’re going to talk about has been in flux the last several years.

10:02

We’re starting to realize there’s a big gap in what the comfort level and experience level is with our business community, and what the, you know, real requirements are for them to do this job effectively. So, as, again, is expected. Most folks kind of in the middle ground there, just about a few more seconds here.

10:27

And I’ll go ahead and close the poll and share with you so you can see who were working with actually a pretty, pretty good, uh, group that’s in the more experienced level. So that’s good.

10:41

That gives me some background in terms of, you know, where I want to work, Focus here, and in the depth of the topic, and still, you know, a decent amount that are, you know, somewhat familiar or really, Hey. This was brand new to me.

10:57

And, yeah, pretty even distribution, As you might expect, not that we wanted to tilt it that way, it was really more out of curiosity, because we do get a different, you know, different types of folks in our webinar attendance.

11:12

So, for everybody’s edification, we’re not going to get real deep into this, but the basics around data modeling really has to do with relationships between data entities.

11:24

And so, you may hear some of this more technical jargon, like …, entity, relationship diagram, whenever you see something that has a bunch of tables on a diagram that has little links between them, that’s really what we’re referring to as an entity relationship diagram. It’s what guides the design of databases, right?

11:45

And even though that’s typically something that’s already been completed, when we, as business analysts, are now looking to extract data, it’s something we should be familiar with, right? So that’s Kind of, you saw the poll results. And the vast majority of folks at least have some understanding that, yeah, there’s tables in a source, or multiple, what we call entities, or objects that we are querying when we build reports and do analysis. And there’s some sort of relationship between them.

12:14

So this describes what we call a logical model of our data. Quick screenshot here of a SQL Server, right?

12:23

So, to really simplify the database that we, you know, typical Oracle SQL Server, DB two, Informix, there’s, you know, hundreds of different flavors of databases out there.

12:33

It’s really a collection of tables and views.

12:39

Yeah, yeah, yeah. I think the poll mess up your sharing, and you share your screen.

12:45

Yeah, let me see what’s going on here. Thanks, Todd.

12:48

Yeah, sorry about that.

12:52

Perfect.

12:53

OK, yeah, so the … was the only other slide here, and I’ve ever happened before, so I’ll have to keep an eye on that. And then yeah, so again, databases are really collections that table’s views.

13:09

There’s other types of objects that live within tables, you can see, in SQL Server, you can have diagrams, and you can have synonyms. And you can have all sorts of other kind of more technical type of objects that are there.

13:21

But for the most part, when we’re talking about the perspective of business intelligence and analytics, tables and views are the important pieces of what’s in a database.

13:31

So what’s really, a table made of tables are columnar stores of data.

13:39

Table columns have important attributes.

13:43

And inside of a database, all of the actual or physical rows of data are present. So you can see a screenshot there of the query result.

13:51

And so databases can get big, right? Databases can contain millions or up to billions of rows of actual physical data.

14:01

So when we’re modeling, are typically referencing those physical objects through logical views, such as an entity, relationship diagrams, or metadata models.

14:14

A view is similar to a table, except that is actually just a logical description of data that exists in one or more tables or based off of expressions.

14:24

So when you think about a view, it’s basically a way for us to construct a result based off of a logical expression.

14:35

It’s not a physical set of rows of values, like a physical table is. So the relational database, right, so we use this term to describe the most commonly used databases out there.

14:50

There are other types of databases, like object databases, and multidimensional databases, and, you know, columnar data stores, which are really similar to relational in terms of their structure.

15:01

But the idea is that every object or entity is somehow related to the others within that database store, right. And that’s why that where the term relational database comes from. The relationships are also referred to as joins. Those are highlighted with the red arrows.

15:21

So, in this very simple schema, we have, you know, several tables that are typically, we look at this as almost like a star type of relationship, where there’s a central table that is acting as the kind of home base.

15:37

So, even though each of these external objects don’t relate directly to one another, they relate through this intermediate path or these tertiary relationships.

15:48

And so this is a very common way for us to describe an actual physical set of tables. This is another very simple example.

15:57

And so the idea is that, even in very basic systems, we always break those things down into there, smallest, logical piece. This is the most efficient way to store and retrieve data in almost every case, right? That’s not always true.

16:13

Again, we have different paradigms within databases now, in data storage, with Hadoop and file system storage. And, you know, so things have changed in the last several years.

16:27

But the vast majority of systems, in terms of databases, are made up of these separate entities without relationships. This is the core concept that I’m trying to get across. We have issues.

16:41

And so, for those of you that aren’t really familiar with how a reporting or a dash boarding or a BI tool works, basically, its main purpose is to allow us to drag and drop objects into a report. For instance, I’ve dropped these five columns into a list.

17:02

And the tool is generating this query for me behind the scenes.

17:07

And so whenever we need to get information from a database, a query must be designed and executed.

17:14

The language that’s used in relational systems is called SQL or structured query language.

17:21

So if you hear SQL or SQL, that’s what we’re referring to.

17:25

We’re not all out there writing SQL code. Again, the reason is that the BI tools are designed to do that for us.

17:32

That was really the big revolution in the late nineties, mid to late nineties, was now, hey, we’ve got great tools that allow us to graphically interface with them by dragging and dropping, and designing reports, and not having to worry about the proper select statement or the proper where clause.

17:49

And so I wanted to highlight the core piece of this, which relates back to these lines that connect these entities.

17:56

Those relationships or joint appear in the structured query, as what we call the where clause.

18:06

This tells the database, how the different objects are related.

18:12

If we did not have a join between the entities, we would get a result, all right.

18:20

So, if you have poorly formed, or poorly structured query, it doesn’t necessarily mean the database is going to come back and say, well, sorry, here’s an error, or you did something wrong.

18:31

The real risk is that it will return a result set, but it may be not 100% accurate.

18:39

It might be, without the join, something that we call a cross product, or a cross join, typically cross joins are not useful. They’re not meaningful and will probably bring down your query engine.

18:51

OK, the database administrator is going to come back and say, hey, what do you do? And you’re sending a bunch of queries out here. It’s running forever and ever. It’s intersecting every row on every back table.

19:01

And it’s just bringing the thing down.

19:03

So this is why we have to be aware of how relationships behave.

19:10

And so this is also important not just when you’re querying a database with many tables.

19:16

But as we expand our scope of analysis, and start introducing other sources, like spreadsheets, and CSV files and tools that may produce some sort of extract, that we then want to link to a source from a different tool. That’s when we really are now being introduced to this concept, when in the past, that we just let the BI tool generate the query for us.

19:43

Right, and that’s Kind of where I want to take, this is expanding beyond just the standard, how the BI tool generates queries.

19:51

So back to this same concept, right, even though all of the entities, the table’s outside here on the right-hand portion of this diagram are not directly linked to one another.

20:04

The question I always ask is, from this diagram, in this system, does it show me that I would be able to determine which customer bought a particular product in a particular year?

20:16

And the answer is yes.

20:18

Because even though products, customers, time, and the billing details don’t necessarily directly relate to each other, for instance, customer and product, don’t have a direct link.

20:31

There is a path between those objects within the Logical Model.

20:36

All right.

20:37

So as we get into the more deep topics of architecture and database design, that’s a concept that we’d like to get across. And then just again, just knock out some vocabulary, get everybody comfortable with this, and then you’re going to see them just a second.

20:56

This is important because the tools themselves kind of follow this, even though we, you know, make fun of Venn diagrams with the different types of joins, the Venn diagram is the best way to describe it, right. So, if you think of A and B as being two different tables are two different sources. So, A, might be a spreadsheet, and B might be a table from your database. And you want to create a report, or a dashboard, or some sort of query that pulls data from both of them. The most common type of join, in case, in most cases, the BI tools default to what’s called an inner join.

21:33

So, when new result comes back, the report, the list, the dashboard, whatever it is that’s presenting, that results set, is going to only include the rows that are common in both tables, OK, This is your most common type of join. It’s what, if you let the tools do, what they do by default, it’s what they’re going to default to in almost every case.

22:00

And so that’s the most efficient kind of joint because it’s the narrowest result set so it produces the smallest number of results.

22:08

It’s the most efficient way for the database to process.

22:12

Look at the next example, which is what we’ll call a left outer join, which table a, just because it happens to be in the left position in the way that we structured the query.

22:23

But don’t worry about left versus right.

22:26

It’s just a question of how the objects or tables are position in relative position to each other in a particular modeling exercise. So some people get caught up and say, is this a left outer join or right our dream? Well, it depends.

22:42

Which table are you referring to? That is in the left versus the right? Is it your orders table on the left side? Is that your returns table on the right side? That’s the difference.

22:50

So there, you’re saying, regardless of whether or not there’s a matching value in Table B, I want my report to show every row in Table A and all of the columns from Table B, where the linked column is equal to the value in table eight, right.

23:12

So we’re not getting everything in table B, but we’re always getting everything back from table A, only the matched rows from table B, based off of the relationship. Definition, will return, the left, outer join. The right is the exact opposite.

23:26

Again, just a question of what relative position or the tables in, the full outer joins says, give me all the rows back from both tables and match them up on the logical relationships. So there still is a logical relationship that drives the result set and a full outer join.

23:48

Full outer joins are rare, but they are important in more complex systems when you’re querying against multiple areas of what we call fax or transactions.

23:59

So for instance, if you’re querying orders and inventory.

24:05

What if your report needs to show all of the inventory values, regardless of whether or not an order was placed in that particular time period?

24:17

And also needs to show all of the orders that were placed at a time period, regardless of whether there’s a matching value in the inventory?

24:25

Because, without that, you would miss in that report, orders where there was a no inventory available and vice versa.

24:33

The inventory result wouldn’t show unless somebody had placed an order for that product in that particular time period, based off of the constraints of the query. So we do have use cases for pretty much. All of these, I put a big slash through the Cartesian cross product.

24:48

Again, it’s not something that is, has absolutely no potential value, but it’s unlikely to have meaningful value when you intersect or multiply the rows in table A with the rows and tables.

25:03

But if you produce a query and you don’t have a where clause, oftentimes, the Cartesian or the cross product or cross join result will come back. You’ll see results. It’ll take a really, really long time, and you’ll get way more rows than you expect. Some of those rows will be correct, and the vast majority will be useless.

25:21

And I love this example because this is how I learned how to do data modeling when I didn’t create proper join constraints when I design queries.

25:32

I get these massive results and I’d say, wait a second, something’s going on here and then.

25:36

Yes, you know, some of us have to learn the hard way. So, let’s take a look at what this looks like in a BI tool.

25:42

I love the Venn diagrams because they look so much like what you see in a tool like Tableau, for instance. So, Tableau Desktop.

25:50

Everybody’s done this exercise where we’ve gone into the sample data in Tableau and here is our good old sample superstore, right?

26:07

And we say, well, all right, let’s see what’s in the sample superstar. Let’s drag over the different sheets.

26:13

So there’s this worksheet and the Excel file that shows what was ordered, you know, all the things that they sold, and then the people, these are the customers.

26:22

And it creates a join. You can see are not fun, little Venn diagram. It’s an inner join.

26:26

And this sample also has those things that were returned, which I always thought was an interesting concept.

26:34

And so, this is where I surveyed folks that go through our Tableau Fundamentals and our intermediate training folks that are typically really focused on, How do I create cool looking dashboards with Tableau and Visualizations is really a strong tool for that and so, you know, Hey, let’s use the superstore sample and let’s do some examples.

26:55

And when you bring all these three sheets over, you can see that there is an enter join that the tool defines between orders and returns, and the same thing between orders and people.

27:09

And so, I asked the question, how many of our folks attending training are aware of what the impact is of this inner joy?

27:25

And we actually have an extra size.

27:26

I think it’s not even into our intermediate class, to where we say, let’s focus on this piece, because when I, um, use this default.

27:39

Setup, and I go to a worksheet here. Let’s take a look at what the results returned, because what I’d like to know is what percent of our order amounts, the different quantities here, are return, right? Or how much of our sales is actually return.

27:57

And based off of the default logic that Tableau produces.

28:04

Let’s see if I want to, for instance, look, again, at my measures here are, let’s look at Sales and the DoubleClick Sales.

28:11

And, it says that, I have a total of $785,000 in Tulsa, OK, Great.

28:20

Now, let’s see if how many of these were returned.

28:23

So what I want to look at here is under Returns, I want this item here and it looks like everything that we sold was return flag is equal to, yes, so that’s a real good indication that something’s wrong with my logic, right?

28:45

So every single sale, it’s also returned. It probably doesn’t pass, you know, the common sense test, right? So something’s going on here.

28:58

And so let’s take a look at our data source again and see what’s happening.

29:06

And again, if I look at the inner join, I can see based off of what we’ve learned, that every row in orders must match every row in returns on order ID. So that makes sense, right?

29:18

It’s, of course, saying that in the result, they both have to be true, meaning I’m not seeing any orders that were not also return.

29:28

What I really want in this relationship is called a left outer join. Give me all the order’s whether or not they were returned.

29:35

And so Tableau makes it really easy for us to define the join.

29:39

Correctly, go back to our sheet. And now we can see that the return flag does sometimes have a null value. And, of course, we know the 785 K is where’s turn on T S. So, very, very, different results.

29:54

Based off of that simple relationship that can be easily overlooked or assumed to be correct, because, again, the BI tools will typically default or something like an inner join for these relationships.

30:09

All right, so now, what I can do is, I can create my calculations. So here I can do a at a table calculation.

30:21

And what I want is a percent of Total.

30:29

And I want to show this Table across. And so this will show me which percent were returned and which ones weren’t.

30:41

And again, without my, join, being properly defined, I wouldn’t be able to see, first of all, the accurate results of just what I sold, but also, now I can calculate that 73% of my sales were not return. And 27% were, which is still a pretty high percentage, but certainly not the same as we saw.

31:04

Another fun little thing here, I can drag now, my sales measure, over to my label macron, drop some additional info, so, I, my Y axis, when I did my cow, turn to my percent of total sales.

31:21

And, so, I’m just dropping that, measure back over onto a different mark, gives me totals there in the display, so as this fun little thing here.

31:29

So, that’s one example of, a very basic change that, in a Tableau environment, you’re going to be wanting to be aware of and, let’s take a look at something here. So, in IBM Cognos, the latest version, actually, for a couple of years now, since we went to 11. We have this concept of what we call data modules.

31:50

And data modules are very powerful way for the end users to create their own design.

31:57

And they can be based off of packages, or external files, or datasets or modules, so you can use your frame or manager package and link it to another package. You can use a fair graduate package and link it to an Excel spreadsheet.

32:09

You can go in and browse tables in a database, so I’m going to look at a data …, what we call Data Server Connection.

32:18

That’s what these things are here.

32:20

And this is one that’s been established.

32:22

So this is a view to the entire set of underlying tables and the Go sales sample’s schema.

32:30

And so, I’m going to use this as my basis, so I don’t expect a lot of end users will be building models from, you know, expansive sets of, you know, tables and databases.

32:43

But, no, the possibility is there. And one of the concepts that I really wanted to bring home today is the idea that, you know, hey, now that we’ve been given some of this freedom, we now have potentially this responsibility. So I’m going to select tables from my schema.

33:02

From, this is just a SQL Server database, the good old, great outdoors sales, and I love going through this exercise because I look at what’s available.

33:13

Say, you know, when I know I want to build a report based off of some combination of these tables. I’m not sure which ones I want, so you know what I’m going to do?

I’m going to go ahead and just bring them all.

33:22

Right, why not?

33:26

And so, just like as we dragged the Sheetz from the Excel file into Tableau, when I select all the tables from the database in the Cognos Data module, it does its best to do create these relationships based off of what it sees in the underlying tables, what we call primary and foreign keys.

33:47

I’m not going to get into what those features mean. But, basically, it’s saying, hey, these, I’d see these as common elements amongst these tables. Some things may not have any common elements that it discovers.

34:00

And, I build some relationships, one of the things you’ll notice in the Cognos diagram is that it also represents the cardinality.

34:10

And cardinality is another concept that’s important for data modeling.

34:15

So let’s take a look at one of the key table’s in this diagram here.

34:22

I’m going to move things around, and it’s my order details table, and the order header, it’s going to be way down here.

34:35

And what’s cool about this degree of separation, this diagram has some cool features to it.

34:41

You can move things around, and you can pan through the diagram, and as you can see, the one degree of separation shows me all the tables that are exactly one relationship away. If I move this up, it shows that what we call tertiary relationships and that goes on and on and on.

34:58

Obviously, if I took it all the way to the end, it would just highlight everything.

35:01

Because at some point, um, in this particular database, schema, everything has a path to all of the other underlying tables.

35:10

So this is my first order relationships from my main area of sales where I track things like you can see over here.

35:19

These are the quantities and costs and prices of the things that we’ve sold and then those have some key relationships to the what was, you know, whether things we return, what’s on the order header, which is stuff like the date, and who was the customer, and who was the sales rep, and, and so on.

35:39

So I want to go from order header, I can see those pieces there.

35:44

So, this is just another tool that allows us to kind of understand how the BI system is, hopefully assisting us in defining relationships.

35:59

But at the end of the day, as you saw in Tableau, I have to be very careful that I am monitoring how this logic has been defined, and that the logic that is being implemented in my model meets the business requirements that we have, OK?

36:16

And so that’s ultimately, the goal is what business outcome, do we expect? Again, if you think about the Tableau example. Right. Before I change this to an inner to a left outer join, my result was that I would only be able to see orders that had been returned, right?

36:38

And until I make that fix, that’s the entire scope of my, my workbook.

36:46

Right? And so, these sources that we define become extremely important.

36:52

So, let’s go back to some of the other considerations. So, you saw in the IBM Cognos tool that it’s also expose something in the join definition called Cardinality.

37:05

Everything here is important and is all about how our business needs fit the, the model in terms of the outcomes right? Cognos also exposed to something called join filtering, Right? So, if you’re interested in this, we do have a depth training around how to implement these concepts within the different tools.

37:31

So, again, here’s another concept that has to do with, well, how are your measures treated? Right?

37:36

So, a measure that gets summarized or aggregated as a total, most measures do, but not all.

37:45

Sometimes we need to do a count or an average in order to have that measure be meaningful in the aggregate, and so, we can control that as well.

37:57

All right, so, when we do modeling, not only is the relationship and how entities are joined important, but also how the features of that model behave. And the most important of those features is, are the measures, the things that gets summarized or aggregated. What we’re doing is creating what can be considered as somewhat of a rigid or singular logical design.

38:22

You can’t change this design in each individual report, once you publish that data source or that model.

38:28

Reports that are based off of it rely upon that underlying definition.

38:33

However, what we’re really doing here, when we’re defining measure behavior and measure properties, is we’re setting what we call the default behavior.

38:42

And so, those types of things, for instance, the number format or aggregation of a particular number.

38:50

Each report author or each dashboard author could go into their particular representation of that, and change and say, you know what, for this particular report, I want to see the average revenue, for whatever reason.

39:04

So setting the default behaviors is important.

39:07

But it also is something that can typically be overwritten by the entries. So, what risks does this represent, right? So, again, this kind of new world of self-service, more modern business intelligence.

39:21

Where’s the control, Right, if a user A, defines a model a certain way, and user B takes the same set of data, but models it’s slightly different, which one is correct?

39:32

Overall, right, the overarching concept here, you know, is it being done, right? So, we give you some best practices.

39:41

Don’t plan to model forever report, meaning each and every time you have a requirement, don’t think that that requires you to build a model a 1 to 1 type of environment.

39:53

Four models and reports, right?

39:57

You want to do something that’s more universal, holistic. It doesn’t mean that you’re now stuck with, going back to the same paradigm of, you have one model that everybody uses, but you want to centralize and minimize the redundancies of models as much as you possibly can.

40:18

OK, self-service, and doing, you know, one-off modeling is great from an experimental standpoint, right?

40:25

But, look at your use cases, right?

40:29

At what point do we need to have a governed model, right?

40:36

And the Dilbert. No representation of this is great raises.

40:42

A very common type of thing that we get into said, well, you know, we don’t really trust the source, all right, well, you know, at what point in time do we get back to this concept of governance?

40:54

All right.

40:54

So take a look at what’s possible, um, again, in the tools that we saw, right?

41:03

So there’s a great amount of potential places where we can make some mistakes, right, think about that.

41:12

Massive tables I brought in here from just this one databases.

41:15

Is it even very complex schema here?

41:20

But it gets overwhelming pretty quickly, and we need to now step back and say, well, wait a second.

41:27

If I’m relying on this model to produce meaningful results, who’s in control, right? What rules are we following?

41:37

What governance systems do our processes? Do we have in place?

41:44

The next concept goes into Data prep versus data modeling, right? So data preparation is, you know, they do intersect. Right.

41:52

So we all know Data prep, which encompasses data modeling in our context, can take up to 80% of the modern business analysts time every day. Right.

42:02

That’s the metric that we keep hearing that Data prep is taken up all this time. And part of that is making sure you have the right joins and relationships and all that.

42:12

But at all, part of it is the fact that the data that we typically get to work from is ugly, right?

42:20

And full featured tools, like all tricks, are designed to reduce the time required to perform Data prep, and also to add value to the task, Right?

42:30

So you can see that this is a good screenshot of an all tricks workflow, where you’re doing a lot of cleansing, right. You’re adding, enhancing by adding calculations. You’re, you know, defining aggregate behaviors, renaming, right. I mean, this can take a lot of time.

42:46

And so part of that is data cleansing data transformation, doing things like bidding and grouping, adding Couse, adding filters, and yes, MOOC, blending, and integrating multiple sources.

42:58

So Data prep is, I guess, you might want to think of as kind of the parent type of activity and within their data modeling in terms of what we’re focusing on here, and the concepts of joins, and all that really falls within that as a subset of the Data prep overall Data prep task.

43:17

OK, so why is this more important now, right? We didn’t really talk a lot about this 10, 15 years ago, because we really relied on BI tools to do it for us, right?

43:28

Now that we have, in almost any organization, the ability to work across many, different BI tools and I’ve just got a sample of them up there.

43:39

I may now need to take something from Cognos and bring it into Tableau.

43:44

And I may have existing sets of data in either of those target environments.

43:49

So, now that I’m merging data from multiple tools, I didn’t know how to do it correctly, Right?

43:59

So, as IT has now evolved, to become more of the infrastructure and enablement role, as opposed to the ones responsible for delivering us data models and delivering us standardized reports, right?

44:12

That those days are not completely behind us, but for the most part, IT is going to say, hey, you want a BI tool. You want self-service. Great. I’ll set up the infrastructure. I’ll stand up a database and you’re on your own.

44:24

Build, whatever models you want and, you know, at your own risk, right?

44:29

And so, that’s enabled us. It’s empowered us as analysts and as lines of business. But, again, it, it adds this additional responsibility.

44:38

So think about these overarching changes in the ecosystem, and realized that this has really now come into impact the need of the business analysts to become really familiar and comfortable with the concept of how to blend and merge and integrate data sources. And, of course, number three, more data, more data, more data, right? Stuff has come in from all over the place. We have more systems out there, collecting data. We have unstructured sources. We have feeds from other systems, not just BI tools, right?

45:15

And so as we get inundated with this, and we want to make sense of multiple different systems, and be able to present a holistic view of our performance, data modeling becomes a tasks that business analysts, that the person developing the dashboard, and building the report is going to have to be a little bit more familiar with.

45:38

And, you know, this is this, is it right? It’s all about freedom, right?

45:44

The freedom to do what we want on our own IT is about standardization and centralization in those paradigms of enterprise.

45:56

Traditional BI are, are still around for certain use cases, but for a lot of others, it’s really now about hour, taking ownership of that.

46:08

To reduce time to decision.

46:11

To increase the scope of what’s possible, but also the responsibility that comes with that, which is making sure we create accurate, reliable, govern data models, right?

46:26

So, what we can offer for you, this?

46:30

There’s a lot of different kind of ways that we can engage. But, really, what we found the most effective when clients are struggling with this issue is small group mentoring.

46:41

You know, this isn’t necessarily, let’s build out a massive six month project. It’s probably have some ongoing tasks.

46:47

And you could use an expert’s advice in, you know, how you’re rolling things out with Tableau or Cognos or Power BI or maybe some combination of those tools and what the right tool to use is. And, you know, those aren’t things that you’re going to go to a classroom and get. Get all the answers from. Right.

47:04

And, again, it’s, it’s not something typically that comes into a, you know, really well defined project. So, engage with us and let us know, you know, where we can kind of help you. We can be, do work with you in increments as small as, you know, 2 or 3 hours at a time.

47:22

So, we have a lot of folks that have the right skill set and we can assign them and prescribe, you know, solutions that are much more micro targeted at exactly what you’re trying to do.

47:34

So that’s really where, you know, we find, we’ve added value to folks that are, Hey, I’m great with the BI tool. I’m great with, you know, building reports, but I’m just, you know, has struggled with some of the data integration pieces.

47:49

So send tourists as an organization, you know, are vision is to really bring decision making, you know, to the forefront of, of what every organization does and to have that be driven by, by data, right?

48:06

We really believe in this philosophy that the right decisions are made when they’re based on facts based on reliable information and that piece that takes all of the craziness on the far left-hand side here and makes it meaningful, and produces a useful and timely and accurate.

48:29

System of support for decision making is really what we’re, we’re grayed out and understanding, how to translate, what the business needs, and what the underlying architecture needs to look like to provide that to provide that solution.

48:44

And, so, it really is, you know, about architecture, but it’s also about expertise within different tools.

48:50

And so, we really have, um, focused down on the kind of three key suites.

48:57

Here are platforms, Power BI on the Microsoft, a stack and Tableau, and then the IBM Cognos Analytics Stack.

49:03

It’s really, where strength of experience are is, but, again, it’s not just about the tool.

49:11

We know that there’s a lot of factors that go into the success or failure of analytics and business intelligence projects, and we have a lot of, not only great resources, but also software solutions that allow us to really provide the right fit to your particular need.

49:32

And regardless of the size or location or industry that you operate in, we’ve got a team of experts over the last 18 plus years, delivering solutions across just a significant broad swath of the, of every enterprise and every size, and type of organization that you can imagine. I think we have our Q and A slide at the very end here. So, I’ll just Kind of crank through this.

50:00

We do have the next event coming up in a couple of weeks and pros and cons of moving BI to the cloud, right?

50:07

This is a hot topic, and it’s actually been something, I’ve working with a lot of clients right now that are still in this, you know, hybrid, doing things on prem, maybe moving slowly to the cloud.

50:21

That’s up good approach, but why, right?

50:25

There’s a lot of considerations on both sides, right?

50:28

So, definitely join us for that register, even if you can’t make the live event.

50:33

We always do recordings, and send out resources, and check out our library, existing resources, the things that we’ve done in the past, our blog.

50:42

We try and keep that current with new content on a regular basis.

50:47

And there’s a great search tool. I didn’t really use it for a while.

50:51

And I was surfing through a bunch of content recently, and went and found something really quickly by just searching one of the keywords in, in the deck.

51:01

And I found some, some really valuable resources there. So take a look at our library.

51:09

And, again, let us know if there’s other topics that you’re interested in, and of course, we have a full breadth of learning solutions or from, you know, your traditional classroom training, customized group training, and self-paced e-learning is another offering that’s relatively new for us.

51:26

So if you’ve got, you know, a topic that you’re interested in, where e-learning might be the right fit.

51:32

Or if you have a large group, and you want to bring an e-learning, or computer based training resource in house, let us know. We can offer that as well. It’s a really cost effective way to, to meet those needs.

51:45

That’s a real compliment to the other, types of treat it more traditional classroom lead, instructor led type of learning. All of these things fit together. Again, think about also bringing resources that can work with groups of 4 or 5 to solve specific problems, and do it in a very short time period.

52:07

Those are, you know, oftentimes that not a traditional engagement, but something that can be really valuable to address immediate needs and deliver value in a very short period of time.

52:23

So, lots of flexible solutions definitely let us know if you’re interested in engaging with, with any of our experts and that will lead us to Q and A So I’m going to kind of take a look at some of the questions that I know Todd’s been managing that while I’ve been speaking.

52:41

So I’ll let him drop me any of the.

52:46

Yeah, so this is actually a first for me. I don’t think we’ve got a single question so you must have explained everything perfectly and very clearly.

52:56

All right. I’m looking through this view.

53:00

You get that last one. Is a single structure good for reporting?

53:04

Yeah, so Syria yeah, Thank you for that question. This is kind of, you know, I was debating about how deep into some of the concepts we wanted to go.

53:15

And one of the things that I mentioned was apologize my, looking through the slides here, but basically, when we start talking about, you know, some of these, you know, schemas and different designs.

53:31

The question is related to, you know, whether or not it makes sense to create a model that is related to just, one, what we call fact, area.

53:46

And this is a good example, typically, when you hear the term, like star schema or dimensional model, it usually means that we’re designing a structure that takes the, our core area where our transactions are, our measures reside.

54:05

And then positions the, the met will be called dimensional tables, the things that describe what was transacted. So, for instance, when an order is placed, we get an order number, and we the product that was ordered and the number of units and the price.

54:24

So a lot of things that happen in that transaction are valuable, because they tell us, what measure values are associated with that transaction.

54:34

The supporting information, like the customer name, and their address, and their phone number, and their e-mail on the product.

54:44

Description and product color, and product packaging, and what order channel they use. And all of that is typically described in one of these other tables. And the reason is that if we describe all of those things on every transaction, the transaction records would get very wide.

55:00

And it would be very inefficient for our databases and our systems, to process thousands of attributes that we might want to see every time a transaction or an order is placed.

55:11

And so we store that information, which doesn’t change as frequently in these other outlying tables and then we link them through.

55:20

So for instance, the time dimension is based off of a day key, so there’s just a very small, maybe, you know, eight byte type of field here that describes the date. And over here I have all of my other richness around my date. What was the month and what was the quarter in one day of the week was it? And all of those things.

55:41

And so the question relates to, hey, if I have just this one fact or transaction area.

55:51

I can build some reports, but, but not everything, right. I won’t like, for instance, this training tells me, and you know, all the different training sessions that employee may have gone to over a period of time and all that kind of stuff and whatnot.

56:03

Part of my organization they belonged to and what the class was, but I don’t know anything about that. Employee’s performance, As, Let’s say, these are my salespeople, and so there’s some other area that shows all of the sales, like the orders I was describing, and links it to the same employees.

56:25

So that would be a model that has more than one fact, or more than one transaction area to it.

56:32

So the reality is, most of our reporting is going to require us to view, uh, history across multiple fact areas, or multiple transaction areas.

56:46

And that just basically is an extension of our design, right.

56:51

So if we, again, getting a little bit deeper into the architecture side of things, then using the what we call conformed dimension design.

57:01

So if the sales is related to the employees, I can then link over to my sales transactions and create another star, right, Or there are other schemas like Snowflake, schemas and hybrid schemas that provide the same capability to create reports off of multiple transaction areas when that happens, and if that becomes something that we now have to deal with.

57:31

As business analysts and reward mom managing these models, that adds another layer of complexity.

57:38

Because what can potentially happen is that we may have some transactions that happen at a particular, what we call grain or level of detail.

57:46

So, for instance, orders happen every minute, every second.

57:51

So, in my view of time, I need to be able to summarize my orders all the way to a very low level of detail on my time dimension.

58:04

However, I may also have measures in an inventory table, and I only track my inventory for each of my products one time a month.

58:12

So, that happens at a different grain, or a different level of transactional detail.

58:17

And when I report off of those two areas, queries can, unless we give them, through our model, the appropriate amount of information, then, it is possible that a query tool will inaccurately aggregate or summarize the measures.

58:39

Yeah, the incorrect grain or incorrect level of detail.

58:43

So, I know I’m going a little deep into some of the technical aspects of this, but the idea is that if we are now doing all the modeling within the BI tool.

58:52

If I go to Tableau and I bring in orders and inventories and everything from a bunch of different spreadsheets or different database tables, I’ve got to be aware of those potential pitfalls of my design and whether or not I’m managing that through the joins appropriately based off of how I’ve defined joins.

59:14

Remember that in Cognos? We even have the ability to define things like cardinality.

59:21

And so, if I go into the definition here, I can scroll down here and I can see that this is, I showed you a screenshot of this. So we talked about the inner versus outer joins and is it a 1 to 1 or 1 to many? We’re providing information to the query tool.

59:38

So that, hopefully, generates the right underlying query when we grab items from these different tables and put them into a report.

59:48

And so, all of it is based off of our design.

59:51

And so, you know, Kind of to the question of when you work with a model that has multiple, you know, here, I have my orders, but there’s nothing stopping me from introducing new data.

1:00:06

That, you know, comes in from a different schema. That’s why I only have one schema loaded.

1:00:11

But, again, I can bring in my inventory data, and now I have to make sure that my model reflects the appropriate logic in terms of how inventory versus orders are going to be aggregated.

1:00:25

So, it gets complex quickly.

1:00:27

It was a simple question.

1:00:29

I know, but, again, this is a topic that hey, if we’re now responsible for this, it’s, it’s very critical that we understand those concepts.

1:00:40

Great. Thanks ever. We’ve got about just a minute left here. We had a couple other questions.

1:00:44

One of them was a, about using unstructured data, for modeling or reporting.

1:00:52

Um, yeah, and the unstructured data, right? So, there’s different schools of thought that, you know, data always has some structured, so, but, yeah, the reality is, is that we’re really working from a, you know, most BI tools.

1:01:07

When we look at modeling, expect the data to be in some sort of relational structure.

1:01:15

Some tabular structure, right.

1:01:17

Unstructured data can refer to things, you know, that would still be potentially living in a table.

1:01:22

But, for instance, one of the fields might be a comments field that doesn’t have any real structure to it, is just a bunch of formatted text, right? So, how do I relate that text to other elements of my data?

1:01:37

So, certain tools, like an all tricks and other Data prep tools, have features built into them to mine that text to provide you some, you know, kind of create structure where there isn’t any.

1:01:55

So, I don’t want to say, you know, it’s not possible but when you’re working with, you know, like data modules in Cognos or with the typical data sources in Tableau, they support any number of types of sources like database types, for instance.

1:02:16

But, they expect the data that you’re joining to have some no way of creating that relationship.

1:02:25

So, it, depending on the tool you’re using.

1:02:29

Unstructured data might be more in Tune, like SPSS Modeler or all tricks is a really good place for that.

1:02:40

But, yeah, when you’re really working with something. Let’s just say, Hey, I want to create a really simple, what we call SQL join.

1:02:47

Um.

1:02:48

Joining on Unstructured Elements is probably not going to work right?

1:02:52

That’s a great question.

1:02:54

I see the other questions here. Let me go through them Kind of quickly, because we’re out of time here.

1:03:00

Recording is slow, do, due to the volume of data. So, if the volume of data is causing the reporting to slow down, you know, it sounds like you’ve isolated the cause of the performance, right? So, BI tool can only return data as fast database, can bring it back.

1:03:17

There are a lot of ways to tune databases.

1:03:20

Um, I don’t, we don’t believe in, you know, creating silos of data because that limits the scope of what you can.

1:03:28

Um, no answers that you can provide.

1:03:32

Cubes are a really good technique that have been around for a very long time, but again, depending on the volume and the technology you’re using, building a cube may or may not be an option.

1:03:44

I like to pursue that, typically, as, as one of my primary avenue’s.

1:03:49

If I have a lot of data, that’s, you know, because it’s got Kube allows you to pre aggregate and consolidate.

1:03:57

But there’s other technologies, like columnar based storage and tuning on the database.

1:04:03

We’ve found that, with modern database systems, if you really take it to that, as far as the optimization can go, you can really squeeze a lot of performance out of the underlying system. That was not possible, you know, 10, 15 years ago. So I would look at that, first, I would look at cubes, maybe second.

1:04:29

Stripping the data out into, you know, smaller subsets is not my avenue of choice, just because, again, you’re, you’re limiting the scope of analysis based off as something that could probably be solved in, a better way.

1:04:49

And that, is there a difference between analytical reporting and operational reporting in terms of how you design your models?

1:04:57

There can be, we typically promote a, you know, approach that provides flexibility, so depending on, it shouldn’t be dependent upon what type of analysis you’re doing. Your underlying architecture should be designed in a way that supports everything.

1:05:18

But that again goes a little bit deeper beyond really modeling within the BI tool.

1:05:23

So I would say, Tiffani that the no individual use case may determine that I have a model in my BI environment that supports a set of reporting requirements and a slightly different model of the same underlying data that is supporting a different set of use cases.

1:05:49

That’s possible.

1:05:51

But again, you really want to look all the way upstream to your underlying data architecture.

1:05:58

And usually there, we want to go with the singular design.

1:06:03

But on a reporting use case, by use case, you may end up with something again, within the front end tools, that that does have, read some level of redundancy in terms of what the potential output is, versus, you know, analytical versus operational.

1:06:24

Those are very specific.

1:06:25

So, again, I would say, underlying architecture, no, I would really think I’d have one, but BI representation of, of, uh, a particular data model.

1:06:40

Yeah, that, I could see that being something where you have A multiple views. And then, the last, Brad, I’m not going to get any deeper into the cardinality. I don’t want to make this a teaser too much, but, you know, the intent was to really say, hey, the, there’s more than just cardinality that we have to consider.

1:06:59

But, one too many versus, you know, one to, one, we talked about inner versus outer join, right? So that’s part of what the cardinality defines.

1:07:12

But the Singularity versus the Minas, notice it doesn’t let me do a many too many join BI tools do not like many to many relationships. This has to do with, again, how the measures get aggregated.

1:07:29

And it really has an important impact on how the query engine plans that that SQL query that it’s going to generate for us based off of the cardinality neurological design. So I’ll leave it at that. If you have any last-minute questions, feel free to drop them into the questions panel.

1:07:47

We’ll leave the session open for a couple more minutes, appreciate everyone’s attention, and hopefully we’ll see you at another event. So if you have any last-minute questions, drop them in.

1:08:00

We’ll make sure we get them saved, and if there’s anything that we didn’t address, we’ll send out a follow-up to everybody that registered to see what, you know, so you everyone will have some insight into questions. So if we don’t have time, we’re out of time, right now, drop them into the Questions panel, and we will do our best to follow up with them. OK? Again, thanks, everybody. Thanks, Todd, for help in the queue up the questions there, even though they all came in to last-minute, appreciate everyone’s sticking around a few minutes late here. And hopefully, we’ll see you at a future session.

1:08:38

Have a great rest of your day.