Organizations have many disparate data sources that can yield up meaningful insights when using visualization tools like Power BI. But first, that data needs to be combined and organized into a format that matches the decision-making needs of the business. And to achieve the scalability required of modern BI and a truly data-driven culture, the data needs to be delivered automatically, reliably and in a timely fashion.

The good news is that the Microsoft BI platform offers a broad array of technologies for data integration and preparation. The challenge, however, is determining which one of them is the right tool for the job at hand.

The answer depends. Multiple considerations come into play including Microsoft’s product roadmap, your organization’s existing analytics platform(s) and cloud strategy, the source data being integrated, performance, scope and scalability, security, data refresh frequency and more.

In this on-demand webinar, we explore five data integration options for Power BI. Learn about the important features, similarities and differences, pros and cons, pricing and use scenarios of

- Microsoft SQL Server Integration Services (SSIS)

- Azure Data Factory

- Dataflows

- Azure Stream Analytics

- Power Query

In addition, see demos of

- Mapping data flows in Azure Data Factory

- Real-time Power BI dashboards using Azure Stream Analytics

Presenter

Andy Kinnier

BI Solution Architect

Senturus, Inc.

A certified Microsoft business intelligence architect and developer with over 20 years of experience in software development, Andy has made regular appearances at the Power Platform World Tour events over the years. He also serves as assistant organizer of the NJ/NY branch of the Power BI User Groups.

Read moreMachine transcript

Greetings, everyone, and welcome to the latest installation of the Senturus Knowledge Series.

9:19

Today, we’ll be covering the topic, five Data Integration Options for Microsoft Power BI.

9:26

Before we get into the content, I have a few housekeeping items to cover, and if you could please advance your slide, Please feel free to go to the webinar control panel to make this session interactive, while we have the microphones muted out of courtesy to our speakers. We encourage you to enter questions in the GoToWebinar control panel, and we will try to answer questions as we are able to at the end of the webinar, so be sure you stick around for that. To the extent we’re unable to do that, owing to time constraints or whatever reason, we will cover it via written response document that we’ll post on

10:02

Senturus.com, which always brings us to the next slide.

10:06

People always ask us, can I get a copy of the presentation? And the answer is an unqualified absolutely. There’s already a link in the chat, or it’s available on senturus.com if you want to just head over there via the link you can see on the screen.

10:21

Make sure you bookmark that while you’re there, as there’s a ton of great, free content on a variety of business analytics content.

10:30

Our agenda today, we’ll go through a few quick introductions, and we’ll do a brief, high level BI analytics architecture overview, and we’ll get into the heart of the presentation, discussing data integration options for Power BI afterwards, and we’ll go through a brief Senturus, overview for those of you who may not be familiar with our organization and our expertise, some great additional free resources.

10:54

And then we’ll get to the always informative, aforementioned Q and A so, by way of introduction, I’m pleased to be joined today by my colleague, Andy, and John Petersen. John is our company’s founder and CEO, as well as a thought leader and visionary. He directs the delivery of all projects here at Senturus. Providing the bridge between technical and business understanding, we are pleased to have him here today on a cameo appearance.

11:22

And I’m also joined by Andy, who is a certified Microsoft BI architect and a developer with over 20 years of experience in software development.

11:30

He’s made regular appearances at the Power Platform World Tour events over the years. And also serves as Assistant Organizer of the New Jersey/New York branch of the Power BI Users Group. He’s a wealth of knowledge on the Microsoft stack. As you will, soon see for yourself. My name is Mike Weinhauer.

11:48

I’m a director here at Senturus, and among my many responsibilities, I’m pleased to be the host of these webinars.

11:56

So, with that, I’m going to hand the microphone over to Mister Peterson for a quick overview of BI System architecture. John, go ahead.

12:06

Oh, thanks, Mike. And thank you very much for allowing me to shoehorn myself into what will be a nice, rich presentation by Andy. I wanted to take the opportunity, first of all, thanks, all of the folks attending today, and any folks that are listening to this at a later date.

12:27

The exciting thing for me about this, Mike is a series of webinars, where we talk about the different elements of the architecture, typical architectures of BI and analytics systems. As most of the folks know, I noticed a number of very familiar names on our attendee panel. We’ve been doing webinars on all different types of topics, at all different types of levels. And one of the areas that is always a very popular one is what are the different pieces of enterprise level BI architecture, what are the architectural options to put those together? What are the tools that could be put toward them? And if anything, over the 20 years we’ve been doing this type of work. It has just become more and more complex in terms of the number of different ways and tools you can put this together. But it all really comes down to a basic flow. And that’s what’s on the screen right now.

13:33

Which is at the universal rule. If you want more data to make better decisions and take actions in your business, the data typically there is hard to get and impossible to really make sense of the massive volumes, plus there’s all sorts of issues with it.

13:53

So universally, you need to grab it and you need to do something to it in order to get it into these tools. The nice thing is that these modern, self-service tools, like Power BI, like Tableau, can allow you to actually fulfill this entire chain within the tool. However, there’s a big caveat. That’s typically, your mere mortals can usually do that at the desktop level and maybe at the department level. But by the time you actually try and roll that out to the enterprise level, you face an extraordinarily large number of additional issues. And, those issues come down to a basic set of things such as performance.

14:36

large data volumes and data validity. How can I trust that? What’s a single source of truth if everybody has their own data model? What, how do I apply security to this so I it’s not getting in the wrong hands? How do I automate a process? So when Joe is out on vacation, the dashboards refresh the next day? How do I create reliable and robust processes in this in this chain of events maintainability?

15:09

What if people leave? How do I actually scale it to add new additional information? Now, things like source control, monitoring, and you end up quickly getting back to some of the enterprise level systems. Like the next slide, Mike, where we’ve been doing work for 20 years. So if you could advance the slide.

15:30

I’m sorry, this is a little bit busy, but it captures everything, this presentation is available for download. I’m not going to talk to the gory details. But, the essence is, you’ve got a bunch of source systems. The more data you can pull in, the richer your dataset is for making decisions.

15:48

You typically use some form of tool to integrate that data into usually some form of repository. Modern systems allow you to do direct connections, and Andy is going to speak to an aspect of the Power BI platform that allows you to do that. But more typically, you’re staging the data in intermediate repositories for a number of reasons that we talk about in other webinars. And then you apply tools on top of it to make sense of it and deliver it to the various usage points. So that’s the broader piece. What we’re going to want to, what we’re going to focus on today are the data integration tools and if we go to the next slide.

16:31

We’re going to break it down to basically connecting to various types of data, preparing that data, combining that data. We have other webinars that we’ve delivered. In fact Mike delivered one on data modeling with Power BI. Relatively recently we just delivered a Snowflake webinar. We’ll visit last week talking about how you how you might options for storing the data, and we’re going to continue to enrich the Senturus offerings for both webinars training and delivery services to include the entire chain of events required here. And so I wanted to just set the stage for where this sits, the last thing I’ll say is that Andy will show you a series of tools, each one is aimed at solving a different part of this problem.

17:20

And the key is to understand what you’re trying to do, what are the available options, how they might fit together. We are going to focus solely on the Microsoft platform in this webinar, there are a myriad of other options from other vendors, and oftentimes, we work in hybrid environments.

17:38

But today’s discussion will be specific to the Microsoft platform, which is gaining significant traction. The benefits are, they’ve got a lot of different options that challenges is determining which one to use, and hopefully by the end of today’s webinar, and it will give you some tips on which might be the most appropriate tool or set of tools for your, for your job. So, that’s, that’s the overall context. Thanks, Mike, for letting me to plugin here. Thanks it’s always good to hear you on these things. As usual, we try to get a finger on the pulse of our audience here. So, we have a poll for you that I’m going to launch right now. The first one is, what is your role in using Power BI?

18:21

Are you an ELT, ETL developer, a power user, a consumer, or a manager/executive? So go ahead and get your votes in there.

18:36

See how quick people are on their toes today. Like John said, this is a pretty meaty webinar. So if you haven’t eaten lunch, you’ll probably be full after the hour.

18:44

And we got about two thirds of the votes in here.

18:49

We’re still getting their votes in. Go ahead and make your choices.

18:53

It’s like we got everybody.

18:54

Most everybody, and the results are in about 30 you are ELT developers little more power users.

19:03

Only a small portion are consumers. And the rest of all are managers or executives. So, yeah, that looks like about the kind of the pyramid there.

19:12

Great. Thank you for that information.

19:14

And then the second poll that we have is, how are you currently ingesting data into Power BI? If you’re using it or are you pulling it from an enterprise data warehouse?

19:24

Or, I guess, that would include if you have an ODS, or anything akin to a data warehouse data mart. Are you leveraging Power Query and pulling in disparate data sources or are not are you using analysis services cubes?

19:40

Are you pulling it from the cloud, like a data lake data bricks Snowflake, something like that streaming data or do you have another way of doing that?

19:50

So go ahead and get those votes in.

19:55

Talley these things up here.

20:02

Certain a slowdown in about two thirds, OK, I’m going to close it. Share the results. So, yeah, most of you all are close to two thirds, pulling it from a data warehouse of some sort. And then, kind of the next bigger one is, is Power Query. So not super surprising there. I thought we’d see more analysis services in there.

20:20

And cloud looks like a little piece and then the rest is other.

20:23

So, that’s interesting, I thought there’d be more of a uniform spread across those. Great. Well, thank you for providing your insights there and making this session interactive. So, with that, we’re going to jump into the heart of the, of the presentation. I’m going to hand the floor over to Andy, who’s going to go through this topic. Andy floor is yours.

20:52

All right. There you go. Thanks. Thanks, Michael, appreciate it, and let’s get right to it. Ingesting data into Power BI, what are the choices? And first choice is, obviously, when you bring data into Power BI Desktop. I’m going to start with Power Query. And that’s the personal Power BI right away. And then we’re going to move on up into the more complex and more enterprise level, as we go. I will explain acronyms that you’ll see often terms of bringing data into anything. And we’re going to talk about ETL an L T, which is going to be transforming data before it even gets into Power BI. So this ETL is Extract Transform, and Load, So this transformations in between the extract and loading portion.

21:35

And then just kind of switching around to extract, load and transform, which means take the data, move it into an environment, and then do your transformations on that. But similar in what it accomplishes, you’re moving data. You’re transforming data. You’re preparing and combining data, and getting ready to pull it into Power BI.

21:55

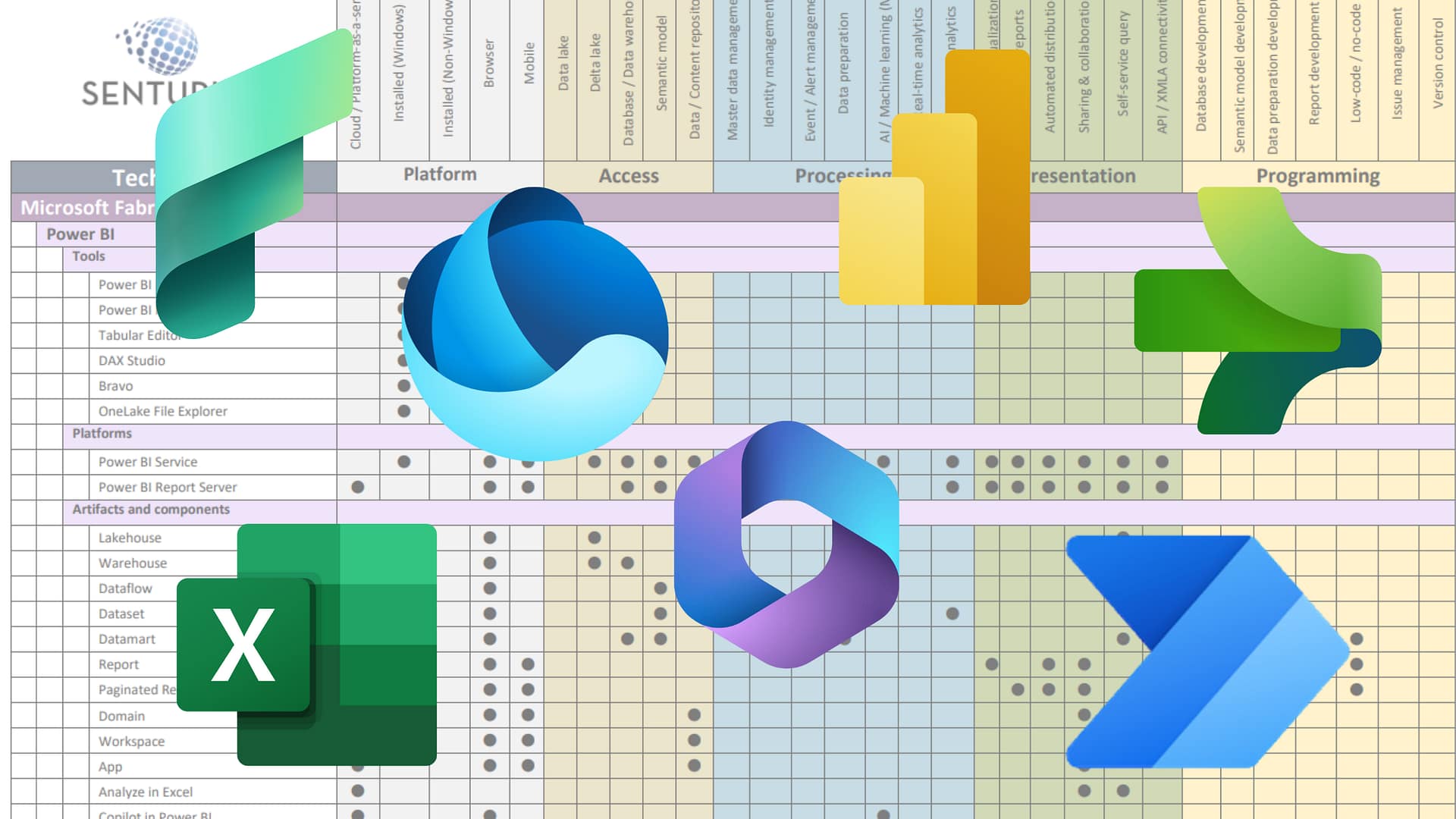

We’ve talked about the Microsoft platform today, but what is the right tool for the job?

22:00

There’s a lot of different things to weigh when you’re choosing the right way to, you know, creating an ETL or ELT platform, what’s, what’s right for budget? What’s right for the people that you have working for your organization? So, the complexity that’s involved, what’s the scalability? Well, what’s my data look like this year? What’s it look like two years from now?

22:21

And at the end, we do have a decision matrix that that way, some of these different options for each one of the tools.

22:29

All right, and this.

22:32

This presentation is going to be very technical.

22:37

We’re going to show a bit of each of these technologies, and we’ll start with Power Query as you bring data into Power BI directly, Power BI Data Flows, which is a one-off from Power Query and in desktop, it’s Power Query in the Cloud.

22:55

And it allows you to save data. Integration services are better known as SSIS.

23:02

Calling it a legacy tool at this point came out 2005.

23:05

It has comes with SQL Server and as a pretty hefty ETL tool.

23:10

Built-in free into SQL Server and there’s a lot of work that’s been done out there in SSIS, what’s its future, and how can it be brought into the, the newer platforms? Let’s talk about Stream Analytics. This is going to be a different form of working with data.

23:27

It’s working with streaming data, two options of bringing data into Power BI directly, so you can see it happening at the time. And then also, bringing it into your data lake. So one stream that splits and populates both of those, and then Azure Data Factory. I wouldn’t call it new anymore, but it’s the platform going forward. It’s Cloud Platform and we’re going to demo a fairly new technology called Mapping Data Flows, which is GUI based tool. That is sort of replacing SSIS.

24:02

All right, so let’s get into Power Query. And this is your personal BI. You’ve got Power BI Desktop. It’s free download for Microsoft. You open it up, and you want to bring data in, and that’s what you’re using right now. It’s Power Query Editor. So you bring data into Power BI Desktop. And then, from there, you bring it into the model. All right, so there’s many built-in connectors that Microsoft has put in there. We’re going to touch upon that. Shows some transformations and then merge tables. You can create new columns.

24:33

It doesn’t persist the data. This is the first important piece that I think we should talk about with Power Query Editor.

24:39

It brings data into Power BI. But then it goes straight into the model. It doesn’t save that data at the end of its transformations. It just moves it into the model.

24:50

And from there, you can save the model which has other things involved with it. DACs expressions, which are real-time querying, that goes on when you’re using the reports and dashboards.

25:03

So it doesn’t actually save the data after you get done with it, in Power BI itself. And that’s an important piece that we’ll talk about in the next tool after this.

25:14

Does use the language, it is a sequential data transformation language, was, uh, invented for this specific purpose of this tool.

25:25

It brings it each step. You create that transformation, it, it does it sequentially so that you’ll have a way to go back and change it and undo it and see how it’s working within the editor. So let’s get right to Power Query.

25:42

We’ll start to talk. We have

25:45

database project here. So for Power BI desktop, we created reports dashboards on the Johns Hopkins coronavirus data. And we’re using that to show what we did with query editor. And right now, I have the get data. If you click on Get Data, this is what opens up, and it gives you an idea of all the different connections connectors that are available to the Power Query database, Oracle, IBM, post gray, all the popular databases here, and the list goes down.

26:21

You can see a lot of different connectors that can bring in different data.

26:28

You have the Power Platform, bringing in Power BI Datasets, Datasets, published up the Power BI service, you can have data flows, which is our next technology. After that show that the Azure platform itself, so on Azure SQL database, Azure Analysis Services in here.

26:46

I just Synopsys there data warehouse appliance and many other Forum’s HD insight, Blob Storage and Data Lake Storage, all available as connectors to bring data into Power BI.

27:07

Now, when you go to, when you’re in Power BI Desktop, we want to go to Power Query Power Query Editor. Then you click on that Transform Data button here, and we’ve got it already.

27:22

And it brings you into this tool. So, this is a desktop tool. It’s on your desktop right now. It’s part of Power BI Desktop. And it just opens up a separate window.

27:32

And you can bring in new data here with the window that I just showed you, with new sources. And inside this environment, you can transform data, you can bring data in, and then start doing stuff with it. So, I’m going to show one of the tables here, fact country group.

27:46

We had as source is a CSV file that came in. And, you can see with the CSV file, you’ve got the headers in the first row.

27:56

This next step here promotes the headers, all of which gets done for you automatically when you bring in a CSV file.

28:02

So, the first few steps are handled by the by the application itself. And then I can do certain things as I go along. You can add custom columns and so, on this particular one.

28:17

If I double click this now you can see what the gooey part of this is about, right?

28:23

So I wanted to create a geography key, which would be county, state, and country, which would be unique for a specific date in the Johns Hopkins data. So, it’s a simple piece here that puts these together.

28:40

Other parts to this, you can look at every time you do something, if you add a custom column.

28:47

And then at the end, you can see here we grouped the rows, so.

28:52

Alright, so this here is just cleansing in particular field. So, one of the fields to distinguish six New York, as opposed to Essex, New Jersey.

29:02

A few things that you could do in here, and then grouping rose at the end, make sure that we are unique on the geography Quay, and date, which the Johns Hopkins data is.

29:15

Once that, once this happens, it’s in the background, it’s creating script in the M Language, so if I go to Advanced Editor brings up, and this is what it looks like behind the scenes.

29:29

So, if you look at the geography key, that’s this one right here, so if I look, it’s got its own expression like language that creates that.

29:39

Table out column is replaced value function, and then, in each of these is the parameters inside the function.

29:48

But for the most part, you don’t need to know EME language.

29:51

You can use the GUI editor to do a lot of these transformations, and, but there are some people have really decided to really learn M to the best of their ability, so that they can go above what the editor can do with the data.

30:08

Then, when you’re ready, you hit Close and Apply, and that brings it back, brings the data back into the dashboards back in here.

30:17

That’s, that’s essentially the ideas of personal BI, your subject matter expert, power, user and accompany.

30:27

You are bringing data in, and you’re creating your own reports, you can publish them up to your Power BI service within the company, share it with other people, but for the most part, you are a single person doing a single job in bringing all that data in.

30:46

Yeah.

30:47

OK, so, next up is Power Query is, is going to segue right into Power BI, Dataflow. So one of the things about A.

30:59

A subject matter expert, who gets very good at Power Query Editor.

31:03

The organizations, we’re seeing that a lot of their own people are very good at creating these models in Power BI and using Power Query editor to do a lot of transformations, Combining conforming data, and then bringing it into these models and dashboards. But it’s not re-usable. Power Query Editor doesn’t allow it to persist data in a particular form. So, Microsoft wanted to utilize that.

31:33

Microsoft wanted to give away for companies to utilize their expertise within it. So, a lot of different power use. It’s not really that the ETL developer, someone who’s gone to school for database knows that different platforms. And then those, a lot of the high-end ETL tools.

31:49

These are people that are hired to do analysis within the company, and got very good at Power BI, because that was part of their job. So how do you leverage this expertise with the rest of your company? And in Power BI Service, it allows you to now create Power Query excuse me, Power BI data flows. And it uses the same interface that Power Query, it uses Power Query as the interface to do so.

32:19

So if we look at this next slide here, the storage in the cloud that you get with the Power BI service, you can create these dataflow. She isn’t Power Query Editor, and store these entities, Nikolai …, entities, but their tables, that table is stored in the cloud, and they’re stored, combined into what’s called a Dataflow.

32:42

And then, if someone wanted to use your, your work, they could create a Dataflow that brings in, say, 3 or 4 entities from, from your Dataflow. And then, they can add another 3 or 4 entities to build their own dataflow combined with your work.

32:57

So it allows a platform of utilizing the good work people have done from a personal perspective, but now bringing it a step further. This is what you would call almost a poor man’s ETL or I wouldn’t call it poor man. But there are a couple of use cases for doing so here.

33:16

And just to note, take this one step further once the data flows are created can then be ingested into Power BI much. In the same way, it would is there for a database table.

33:28

Hmm.

33:30

So, its Power BI data flows.

33:31

One piece I do want to just touch upon here is the use cases for having’ a system like Power BI Dataflow is and why would you want to go that direction?

33:42

You could have your whole ETL and your whole reports and dashboards all in one environment, power BI premium, or Power BI service. And within that environment, you can do all your transformations, all your entity creation, and have that ready to be ingested into Power BI. And then, you have the reports developed, and you don’t need to hire out full department worth of developers. And the use case, usually with this, is for smaller companies that don’t have that type of budget yet, at this point.

34:16

And also, for large companies that have certain departments within the organization that want to be able to handle their own analytics without having to go to the national level, or the corporate level. They want to be able to have a little more flexibility to do some, some data work. Before.

34:34

it gets to the point of, know, if you’ve been there before, you put taking in, And three weeks later, it goes by, and they finally get to it and then it’s in test and QA. And six weeks later, I finally get my field. That was, was fairly easy to add. Now, you have a much more agile approach, just saying, I need to add a field to this Dataflow, and I can go in and just do that. And then, than it needs to be some form of governance behind that and mild good touch upon that, as well. So, let’s demo, Power BI Data flows. All right, I’ll go into.

35:12

Come in, too, Service.

35:25

I’m in my Power BI Service. And.

35:31

Let’s see.

35:34

Start on. Give me a second.

35:40

OK, and we have here.

35:45

Let me go back two quick. OK, so when you get into Power BI Service, you have your workflow, workspaces, and this particular workspace. You go to the all content here, and see that you also have this option here, datasets and data flows.

35:59

And this particular one, Lunch, and Learn 100, developed for. And looking at, looking at what a Dataflow it looks like, it just looks like tables here, All right. That’s what they’re.

36:12

And we’ve brought these tables in from Adventure Works, and also from a source, an Excel spreadsheet in SharePoint. And we brought them in as one Dataflow.

36:23

If I go here to Edit, it’s going to open up the Power Query Editor in the cloud. So this is a cloud based application, or now in the cloud. It is in Power BI Service, and it’s not part of my desktop. I don’t be Desktop in order to create a Dataflow.

36:42

But it looks familiar. Right, the interface here is Power Query Editor. And it has all of the same options that you can do in Power Query Desktop.

36:55

And it does use the language, the same thing, and it should look familiar. Alright, so I have different tables here.

37:04

And then, you can see the applied steps over here.

37:06

And then we can go into the Advanced Editor, as well.

37:09

And just to show a little more of the functionality of Power Query, I’m going to click on this Facts, in fact, budget table.

37:16

When I first brought this in, you have a table that’s in a, OK, promote the headers.

37:27

Got a site, you have a table, it’s in a matrix form. You will see this a lot with budgeting, right? We’ve got this is going to be on the Adventure Works, product category. So you’ve got three product categories here for the budgeting, the year 20 13, and for, each one of these months, you’ll see across the top is, the is the month. So there’s a budget set out for each one of these cross a month. Now that it’s an, it’s a very flexible way of putting data in Sonya and Accounting And you’re saying, I’m going to create a budget.

37:56

You get into Excel, You have a matrix with the three are months across the top categories down the side, and then you put the numbers in for each bucket.

38:07

That’s all well and good, but it doesn’t help us in a dimensional model. So we have to do certain things here to try and make this a better dimensional model. And one of the things that are easy to do in Power Query is UN pivot, which is if you ever had to wrangle with this with SQL Server and create a query.

38:25

It’s always, what do I have to put in this this area here, and there was this, Corey. But for power, Power Query, it’s the simplest, as, say, a UN pivot, and base it on these rows.

38:37

And what it did was it put the, the months down here, now on each in each route and for each category. And so now, I can create a key. I need a key on the date value here. I need to create a date value, essentially. And this key here is going to be my key to my category dimension.

39:00

So again, if I move down to the bottom over here.

39:05

You can see.

39:18

So now I’ve created a day key with the conditional column. Based off the month, I don’t see it, 2013. I know it’s the first of the month, so that I can create a conditional that drives that column. And so now, I can connect to the date dimension and the product category dimension where they bring the data and go. Back to my workspace here.

39:43

Yeah, close out of query editor. And this has already been developed as part here. And then you can see the effectiveness of bringing that in when I look at.

40:24

This is the resulting report based on that. I was able to now use a date to mention. So I have the months down here and a chart.

40:32

I have both my values from the fact table, which came from the database, which is the sales amount, and then the budget amount, which came from my table.

40:40

So now if you are able to merge the data together, and then it’s done in the in the Power BI model, or you set the relationships there.

40:50

But now I can look at accessories, and you can see it’s changing both the budget numbers and the sales amounts here at the same time. So I can see that my sales for a little bit below the budgets to start, but apparently, this company did better as the year went on.

41:05

And if I go to Bikes, you can see its all different number here.

41:08

I think we’re over a million dollars are looking at bikes.

41:12

So not only is the sales amount being changed by this, uh, hmm by the slicer here.

41:20

But also the budget as well.

41:24

So it’s meaningful chart when you are able to integrate that data together.

41:29

So Power BI, Power Query Editor allows you that integration of the data.

41:36

And then now with, with Power BI, Dataflow, is it allows you to store that data into Dataflow in there.

41:44

So if I was going to go now to Power BI Desktop, and I want it to get data, I can open it up and see one of my choices here as data flows.

41:55

If I use the more here, this is going to bring up the original.

42:02

Just want to be able to show everyone how to get from the original one. Need to give that one a second.

42:10

So it has a category of Power BI platform, and you click on that. That gets you to the option of Data Flows. So Power Platform.

42:22

And then Power BI, Data Flows.

42:24

And I can connect here.

42:26

Then it’s going to bring up the data flows that I have signed in as my user.

42:30

It knows right now, it’s going up to my Power BI service, and it’s looking for any data flows that I’ve had managed.

42:43

So that’s an important step.

42:45

Andy that, which we’re not covering in this webinar, is that you pull those data flows in, and then there’s a modeling aspect to it, right? Where you’re creating the relationships, so that you can get to the, the presentation layer here at the point is just that.

42:57

You have these options via desktop and or the web to do a lot of powerful ingestion and transformation and then store it either locally, or on the web, in the case of, in Azure Data Lake, in the, in the case of data flows, and then leverage them in your visualizations.

43:13

Yes, absolutely, Michael. Good point, and that’s where this demonstration stops. We’re not going to go into creating the model at this point, but I just wanted to show that this is how it looks coming from here. It looks like tables, and when you bring it in it, so it’s very similar to bring it in from a database.

43:28

All right?

43:29

And the other point to mention here is the governance that comes with Power BI Service now. So, you can, when you were part of the problem now, with having people, subject matter experts, bringing their data into a platform that gets shared, is, you want to make sure that it is.

43:54

There is some sort of governance on it. So the datasets that get created and published will allow the organization to control that. Say, you’re in a big organization, but your department is control of the data that gets put into your power, Power BI, Premium Service. And when you have datasets, as we all do, get a dataset, and they publish it up, and I create some reports. But it’s not finished yet. I’m still modeling. I might have a certain, what Workspace set aside, but it could be up in an area. Where it gets there, was a datasets are shared, and someone else would look at it and use it.

44:29

So I want to use this technology say, well, hold on a second. It’s not ready yet. You’re still in the midst of working and testing on it.

44:36

And so, how do you have something that can at least govern this type of data? So as a developer, you can publish up your datasets, and you can set, set it to be promoted. So, if you feel really strong about it being a finished product, you can click on Promoted, and it now tells everyone in the company that it is, that you feel good about that, the developer feels good about it. And the other one is certified.

45:04

So, if your Power BI admin and you have the admin privileges, the certified datasets, and it should be coming up any second, now, that’s a really important aspect.

45:22

With the old BI tools you modeled it and then publish it. You didn’t have to think about that stuff, because it was vetted and curated.

45:33

But, exactly, it was also slow. And now, the Power BI in the Tableau’s of the world came along, and you had chaos with all these data model.

45:40

So, this is where Microsoft really moved the needle along. Tableau has done something very similar to, to introduce governance into these modes. Two type of tools by having the certified and promoted exactly. So now, if you’re in that company, or in that department, you know that if it’s certified, that somebody in your organization has said yes, we have looked at it, we have certified it.

46:04

And if you want to use that, while Andrew … made this, he’s promoted. He feels very strong about it. Certification admin hasn’t got to it yet. But you may want to use it, depending

46:15

based on that, can pull those up to the top and, and, you know, it gives you trust in the data, which is really important in terms of driving adoption and minimizing versions of the truth and making better decisions. Exactly. And that’s sort of the poor man’s ETL solution, as we have to add some stuff to it, we have to add governance. As, you can see here, I showed some of the good features, CM language, not going to get into the refresh, but you can schedule, a refresh on your data flows, and the governance that we just looked at it. That was added. So that, if you wanted to use Power BI Premium of Power BI Service, you can do everything within it, and still have a fairly controlled environment.

46:58

OK, let’s keep moving integration services, and I loved integration services, I got very good at this particular tool, and it is, it is free with a SQL Server license uses Visual Studio for the development environment.

47:13

And it’s part of that that whole toolset.

47:16

So the source control and everything involved with an ETL system comes with it.

47:22

Now looking at and you’re into the area. Now, what you are, an ETL developer, if you use SSIS no longer the person knows who’s hired to do Analytics.

47:36

And I advise on analysis, but you’re really the person in the ETL department or a database department, data warehouse department and your job is ETL developer.

47:51

And this is in the on perm Windows app. Now you can have a VM, a virtual machine, in Azure, and install SQL Server.

48:00

So, you would have a Windows App, essentially in the cloud that way, but it is, it was created for on perm purposes, and also for Data Warehouse purposes. You know, it was, at the time when Data Warehousing was in 2007.

48:18

SSIS came out about 2005, and a very good pipeline architecture.

48:25

Data into memory, while it was in memory, at the transformations, on the data. And then it loaded out, and it was a pretty good tool for what it, especially for free. Given that its competitors were Informatics, Talen, it was a pretty nice tool to include and SQL Server for free.

48:47

Let’s do a quick showcase on this more of a showcase, then.

48:52

Done a demo.

48:54

But this is integration services, to that Senturus Corona virus project that we just showed. So we do some transformations before it even gets into Power Query.

49:07

We had to do some stuff to make the data coming in was cumulative numbers. And we had to find a way to, to say, well, if it was this on this day, and it was this on our next day, what was the actual amount?

49:19

So you’re taking the difference there for each one of those county, state, and country, date, by date, and finding the differences. So I use this instead of Power Query Editor, because it had more of that in the tool set to do so, including SQL, which is used in the, in the store procedures.

49:39

This control flow allows you to do two different streams here at the same time. So these don’t have any connection in between, so when it runs, they both run at the same time.

49:50

And you could do this is executing a stored procedure, so stoked procedure. I created an SQL Server. And it also has this tool called the Dataflow so Dataflow task. I open it up. It looks like this. And you get to see data streaming through here, as it comes through.

50:07

And I haven’t, but here a data viewer. So as the data comes through this part and I wanted to see adorned overrun that gives me some insight to the data from, for some good debugging something not working out. I can take a look at a certain step in the data.

50:23

So I’m going to take this. I was just going to execute this task now.

50:27

And we’ll take a look the Dataflow.

50:37

OK, and you can see the window opens up, that’s this window right here, that’s the data viewer that I created. And it’s waiting, for me, the pipeline’s not moving at this point that allows me to see the data at this point. So if I had some derived columns which I do, then I can check to see if that. I was also doing a geography key, deriving that here.

50:57

So I let you the bug right in here. It’s a very good, robust tool for that. And if I detach it, it allows it to go on. And now, you’ll see that the numbers of the road’s gone through here.

51:16

OK, it’s taking its time, it is running on my local machine, which is working against the GoToWebinar tool.

51:27

So, I will cancel out of here, now, but you can see how the Dataflow works in that regard.

51:35

Reading, driving, and then populating a destination.

51:40

And integration services sort of being sunset. It. I don’t know if I call it that, at this point, but Microsoft is moved all its energy into the cloud based tools. This is a fairly robust tool, as it is. They do keep adding a few things to it. Especially Cloud Connectors and different things that might pop up.

52:00

But it is something that there did, not emphasizing any more in terms of tool to look for, for ETL. It’ll probably be around for some time, but their thoughts are into the Cloud.

52:15

Definitely moved from.

52:16

You’ve definitely moved up at that point from sort of your desktop power user type person, too.

52:21

Much more of a, an actual ETL person because you’re working in visual studio, you’re able to do much more complex things, but there’s a steep learning curve for that or reasonably steep learning curve.

52:31

Sure. Yep.

52:35

Now, even steeper as we get into the more the cloud and the more enterprise level ETL functions that come about. And we’re going to talk about Azure, Azure. It’s the Microsoft Platform Cloud Platform, and some of the tools that are in it in order to bring data in an Azure Stream Analytics is the streaming technology that works in Tandem with Azure Event Hub. And you can bring data into your Data Lake and directly into Power BI Streaming data as well. It is for big data processing, some of the examples you would see for this.

53:09

Police departments will stream license plate readers, so, as cars go past this license plate readers that are taking pictures, and then they’re sending that information right In social media, which we’re going to see a demo next.

53:25

And other things that manufacturing in particular now has really become machines that do manufacturing, worked for a gold mining company and had these big machines, 300 feet under the surface.

53:40

They were streaming up data on how efficiently they are running. And, the temperatures within the machines that, if they felt one of the temperature was rising, it could cause a part to break or whatever. And it was a 24/7 operation. So they needed to know.

53:56

The maintenance portion of it. They needed to know that their operators will be inefficient.

54:00

Because each time those machines went down, but didn’t perform well, then they lost money, so they were streaming this data up into a Power BI dashboard, and watching it real-time, as it happened. So it is real-time data analysis, but there is the cold path to be considered, as well, in this.

54:23

This graphic here will show what we’re going to do.

54:26

So we’re streaming data into a piece called the Event Hub here, which is really just an entryway into the cloud, it’s going to use it. Go into a tool called Aisha streaming analytics, and it’s going to have two different paths that one stream is going to come in

54:40

one, is going to go to Azure Data, Lake Storage, and the other is going to go directly into Power BI and Stream Live. So, the data that goes into the data lake, can then be processed by big data tools. In this particular graphic, we have Azure Data Lake Analytics, which is parallel processing engine that takes large amounts of data, and then can, can do analytics over the large amount of files that are associated with it. So if we look at here, going into.

55:21

Yeah.

55:24

OK, this is a data lake that we have set up, so Azure Data Lake, we’re now in the Cloud.

55:31

And as we come into this, it’s a folder structure, a lake, and it gets, it’s done, so that it puts data in.

55:41

Or it handled streaming data in such a way that started streaming last night.

55:47

So just one day in the 11th month, the 19th.

55:51

But, I can go back here, and let’s, let’s pick, and all the year, show you what I’m trying to talk about.

55:58

2018, third must 2018, let’s say, the eighth day.

56:03

You can see that we were streaming data in here, throughout.

56:07

So, every hour a file gets created from the streaming data.

56:11

And then it’s just stored in your Azure data lake.

56:14

But now, how are you going to look at this data, How are you going to do transformations on?

56:19

And if I wanted to look at all of 2018, 10 different folders, and it would be tough. But something like integration, surface sister to take all that information from, from various folders, in order to get down to the level or the chat. And so, these tools that were developed to handle that.

56:38

Sure, to today, but Azure Data, Lake Analytics as one data bricks, HD Insights, Spark. These are all tools that can handle files in that type of structure, handle them all at once, and do a single query against all of them. So, they’ve built for that type of, of analytics.

57:01

The other piece.

57:04

Find my way back.

57:14

OK, so the other piece has gone into Power BI. Let’s take a look how, how to set this up.

57:26

So there’s an event hub that gets set up. I don’t want to go into that too much. It’s really just configuration.

57:31

We have a C-sharp console project, which takes in the key is it takes in my Twitter. We’re going to show Twitter data. So I need my Twitter account.

57:43

I had to become a Twitter developer on their site, and they gave me a bunch of keys that I put into the configure file here.

57:50

And then I also had to put in the keys from the event hub into the, into this, into this program. And then, when it started, it, started streaming data.

58:01

And right here, it basically writes to the console every time, many different tweets are happening here. So, every time, we see another road, get put in 44. 646, and hopefully, there, we go 44, 660, 115 messages came in in that 22nd street. So, every time, it’s pumping upstream data. Now, we need to connect that to the

58:27

configure file, which goes to the event hub, and the event hub gets configured in Azure Stream Analytics.

58:35

And this is what Azure Stream Analytics looks like, again, or in the Cloud, to Cloud application, and it tells you, I’d ask for you to set up what you, your inputs are.

58:47

Our inputs are coming from the, from the event hub.

58:54

Back, you have to define the outputs in here. I define one for the data lake and one for the Power BI stream. So it goes directly into Power BI.

59:03

You have a query mechanism, and this looks like SQL. Is it SQL? Well, it has a couple of other functions added to it.

59:11

But I have two different statements here that take the streaming data, you can see I’m looking at.

59:22

Cosmetic companies, cosmetic brands. That’s what I put into the App configure file for the c-sharp program. So, it’s grabbing all tweets that have these keywords in it.

59:33

And I’m taking these key words from the text.

59:37

And if it’s fitting includes Dior, then it puts Dior, and that’s the brands, are just a case statement. Here, that creates a field called Brand.

59:47

And it has a number of different cosmetic companies here. And then, one is the tweak cap, which will summarize in Power BI.

59:57

We added a time-stamp here, going into the data lake. So it would have a time-stamp and the data.

1:00:02

Now, if we go back to data Explorer, and I’ll go back up to 2020.

1:00:12

This is the data that’s streaming now.

1:00:15

You can see that that query is going to create a file like this.

1:00:19

It’s a CSV file.

1:00:21

It is.

1:00:24

It brings, and these are the tweets, you can see, they’re going to have D, or something involved with Chanel that’s involved with the keywords that were, what grab data, that program. And it gets stored in your data lake in this format.

1:00:42

Mindful at the time, we have about nine minutes left to cover the rest of the material, so you might want to move along.

1:00:48

Good point. So, let’s go straight into the one with Power BI, then, at this point. So I have.

1:01:00

Yeah.

1:01:05

I’m back, here, launched, it, gets to Power BI.

1:01:10

It created this Twitter DS dataset and from that dataset, you can create a report, which I created a report, because it’s looking at that data and then you have to pin it up to a dashboard.

1:01:22

So, if we’re looking at the dashboard in Power BI.

1:01:27

This dashboard is now live, and it’s going as, as the data goes up. So, for looking here, now, you see, we just saw that the, the amount here is for 43,000, and it started after this program started, so it’s not quite in sync with it.

1:01:42

But you’ll see as this does its next up, tick here. The differences will be exact. So 741, it was eight added to 131, which gave us 139.

1:01:54

It is.

1:01:55

So, as you saw there, it updated that console. And a split second later. It updated the chart itself and each time that goes up, this, this chart here is, is also moving with it. So, when the data changes TO said, 983 now, let’s take a look.

1:02:13

Now, they’re at 9 89, so you can see that the chart is updating, as well.

1:02:17

That’s your real-time streaming analytics, as also, it’s populating the data lake to do that, OK. I’ve got one less technology to go.

1:02:27

And this is Azure Data Factory and Azure Data Factory is, is the Premier technology for, for Microsoft at this point.

1:02:38

Bring data in, do your transformations, and then prepare it for ingestion into Power BI and other types of data analytics tools as well.

1:02:49

So as a browser based Azure application, all right. So we’re talking Cloud, just like data flow’s Cloud application.

1:02:57

And it’s for ETL and LT.

1:02:59

Cost is use Space It, so the more data you pulled through, the more you’re going to pay. So, it allows you to scale without having to add service analytics, ritually this a lift and shift technology. Which means moving large data files into the cloud.

1:03:15

Version two has recently added a number of GUI components to it. So, you can run an SSIS package now as part of Azure Data Factory Pipeline. The data flows this Dataflow is a different technology that what we saw before with Power BI Service. But it has, now, tool called Dataflow that mimics SSIS. So its GUI based data flows. And even wrangling data flows to mention this ranking. Dataflow is just now that Power Query Editor, now in Azure Data Factory.

1:03:43

So those that know, Power Query Editor can use it in to create Dataflow in a pipeline.

1:03:52

So it’s continuously evolving and it is enterprise level, so it’s got all the bells and whistles. DevOps, source control. Moving stuff from test, kea, to prod, alerting, scheduling, all of that involved with Azure Data Factory.

1:04:11

I can manage multiple technologies, just mentioned here.

1:04:14

You can have an SSIS package of your re utilize in something that, you know works well and it’s very efficient. You can put that into the pipeline, and then use its other Copy Activities, or Execute SQL. Stored procedures, things like that all cobbled together into an Azure Data Pipeline.

1:04:35

OK, so let me go and just demo this font quickly.

1:04:40

That’s in the other window.

1:04:43

So in Azure Data Factory itself, you’ll see here that we have the development environment and the development environment now.

1:04:51

And these are my pipelines, I can add a pipeline here and create, create a new pipeline to have this pipeline created.

1:05:01

And from here, you can add different things to it. So right now I have this folder open but a number of things that you can add to it. In the general folder, you’ll have your SSIS package, stored procedures.

1:05:17

All the different things that you can add to it. In a data lake analytics, that we just discussed, The SQL programs that get created, and data Lake analytics can be done here, data bricks Azure functions.

1:05:31

A lot of people come into here with the copy data. So that was the lift and shift. Part of it is to copy data. And then you can also add a Dataflow and a Dataflow here. If I go to Create Dataflow and allows me to choose either a Dataflow, which is sort of a replacement for SSIS in the cloud, whether strangling dataflow, which is still in Preview and it’s the Power Query Editor.

1:05:54

What I’ve done here is created a Dataflow, just to give you an idea what that looks like.

1:06:01

All right, the data comes in. here.

1:06:03

We, what we did, is we were looking at the, uh, the natural hierarchy and the adventure works, products, data warehouse, so for product they have category, And then subcategory, and then product, and that’s a natural hierarchy.

1:06:19

So instead of having three tables in my data warehouse, I want to have one, which is the product, and I call it in Senturus deem products new.

1:06:36

That allows you to well, let me just show you how this this gets set. You can add in a source here, which is the product category table and now as product subcategory, that’s also a source.

1:06:48

But now, I want to use product, subcategory to look up the, uh, and product category.

1:06:55

So subcategory has the product category key as inequality here, based on that. And once you have that set up, then you now have a data stream.

1:07:06

That includes this lookup cat here. Now, this data stream includes both the sub cat and the category. So it’s, it’s like a merchant, in this case, a left join lookup.

1:07:19

And then, this particular, with product now, I’m going to have this lookup merged dataset here.

1:07:28

With this particular lookup, we have sort of the same thing. But now, we have the subcategory key and the product dimension, and it’s going to match to this sub product category key in the lookup cats column.

1:07:41

Alright, so now we have that and what we’ve added is, essentially, we’re adding two fields to a table and, and down here, OK, that’s a mapping, takes place.

1:07:58

I have added these two fields to my output table, subcategory, and category, and you can see a mapping the English product, subcategory Nance, subcategory, an English product category name to category.

1:08:12

OK, and, with that, I’ll, running out of time, at this point. I think we should jump back to the slides here and get to the summary?

1:08:19

Sure, OK, so in summary, though, these tools all share the same fundamentals, right?

1:08:24

They’re looking at your transforming data to the power of AI can, can produce useful analytics. So before you even gets the Power BI, with the exception of Power Query Editor in desktop, you’re doing. You’re loading data. You’re transforming data. You’re preparing it. So the Power BI can use it. You’ve seen that the number of different forms. We do have a decision matrix here that be part of the, if you go to the site.

1:08:50

When the recording is, you’ll see this Decision Matrix.

1:08:53

It looks at the tools here across the top, a number of different options that should look at when you’re deciding to choose a tool to ingest into Power BI. And it is a continuously evolving stack of technologies, is Power Query Editor is now being added to Azure Data Factory, it’s still in preview. Just shut those to show how they’re constantly adding functionality and features as they see the industry going.

1:09:21

And, so, it’s, it’s a type of thing that will be around for a while, you’ll be able to scale with it.

1:09:27

And, you’ll be able to use it with your Power BI stack of technologies.

1:09:35

And that’s excellent. Thank you. Yeah, that’s, it’s complex and ever evolving. So, that matrix that we’re pointing into, we’re going to probably enhance that some more.

1:09:42

It’s really just meant to be, kind of a, a basic guide for giving you an overview.

1:09:48

But, as you can see, it’s complex and so, we’d love to have that conversation with you around how to, how to choose the best technology and the right tool for the job, if you will.

1:09:58

Now, if you stick with us for just few minutes, please, put any questions that you have in the question pane.

1:10:02

And as I said in the beginning, we’ll post those via response document on the, along with the recording, since we’re at the top of the hour here already. Or will, we’re more than happy to if you want to ping us, have a conversation with you offline? If you need help in your Power BI environment, we do offer Power BI assistance in many different ways, whether it’s training. We have a great getting in transforming data with Power BI that dives into Power Query.

1:10:27

We’ve got several offerings there from desktop to Enterprise, which, where there’s a, to help you get on the path to efficient, accurate, sharable dashboard reporting or a bucket of hours.

1:10:38

So, if you need help, and you’re not quite sure how that might play out, of course, we can give you this support, however, you need it at whatever point in your BI journey, you are at.

1:10:48

If, you want to go to the next slide, please, just quickly about us, and if you could advance to the next slide, Senturus, as we know, sorry, the one prior to that.

1:10:59

There you go. We are, the authority in business intelligence.

1:11:03

We’ve been focused solely on VI, bringing expertise across the entire BI stack, so, that diagram that John was talking about at the beginning from the backend, all the way to the front end and across multiple different BI platforms. So, we focused on Microsoft today but that also incorporates things like Cognos or Tableau and other expertise. We will bring pragmatic experience to help you determine, design and deliver the right architectural options and the right tool for the job based on your analytics needs. No matter how complex or diverse your environment. It say you’re running multiples of these tools on the next slide.

1:11:39

Our clients know us for providing clarity from the chaos of complex business requirements, disparate data sources, constantly moving targets, changing regulatory environments, And I made a name for ourselves because of our strengths, bridging the gap between IT and business users.

1:11:53

We deliver solutions that give you access to reliable analysis, ready data across your organization, enabling you to quickly and easily get answers, at the point of impact, in the form of the decisions you make, and the actions you take.

1:12:05

As sort of mentioned earlier, in the next slide, Andy, we offer a full spectrum of BI services.

1:12:12

Our consultants are leading experts in the field of analytics with years of real-world pragmatic experience, and experience advancing, the state-of-the-art.

1:12:21

We’re so confident in our team, and our methodology that we back our projects with an industry, unique 100% money back guarantee.

1:12:28

And we’ve been doing this for a while. And if you go to the next slide, Andy, we’ve been focused exclusively on BI for 20 plus years at this point, or 1500 clients, ranging from the Fortune 500 down to the mid-market, as you will likely recognize a lot of our logos on, our next slide there. And many functional areas, lines of business, including the Office of Finance, Sales, and Marketing, Manufacturing, Operations, HR, and IT.

1:12:51

What’s great about Senturus is that our team is both large enough to meet all of your business analytics needs, yet, small enough to provide personalized attention.

1:12:59

We invite you to, if you go to the next slide, there, go to

1:13:04

senturus.com, to our resources page, and that’s again, where you’ll find all of our webinars are upcoming events and easily consumable blogs.

1:13:15

Then, I’d be remiss if you go to the next slide.

1:13:17

If we didn’t talk about our complete BI training offerings across Cognos, Power BI and Tableau, we offer multiple different modes ranging from instructor led online courses to tailor group sessions mentoring and self-paced e-learning.

1:13:29

And we can combine those and customize those in a way that will best benefit your organization.

1:13:37

Last slide here, Great additional resources from Senturus. We’ve been committed to sharing our knowledge and expertise for well over a decade.

1:13:46

And with that, we would normally get to the Q and A Again, if you have questions, we do have some in the question pane. We will respond to those and post those online, along with the deck, which is already up there, and a recording, which we should have it up before too long. And with that, we’ll go to the last slide here, Andy, and, of course, first, I want to thank you, Andy, for, of a very meaty presentation, a lot, a lot of high wire demos there. You did. Thanks for a great job. And I want to thank all of you for taking time out of your day to join us on this latest installment of the Senturus Knowledge Series. Sorry, we ran a little bit late today, but hopefully you found the information valuable and helpful, and again, if we can help you with Power BI or anything, business analytics related, help you reach out to us, you can find us on our website.

1:14:31

If you still actually use your phone for making phone calls, we have an an 800 number, and you can always reach us at info@senturus.com. Thank you very much, and enjoy the rest of your day.