More than ever, companies are using Tableau to push beyond the limits of business analytics and into the realm of advanced analytics. Advanced analytics can typically find insights more quickly and, when used in the right manner, they can also reveal the “why” behind past performance or behavior.

Tableau continues to beef up its capabilities for advanced analytics, providing built-in functions as well as integrations with third-party data science packages. In this blog, I review the various methods of performing advanced analytics (Tableau’s built-in functions, integrated with Tableau, free standing analytics programing languages as well as enterprise software programs) and their pros and cons.

Defining advanced analytics

Generally speaking, advanced analytics is the autonomous or semi-autonomous examination of data or content using sophisticated techniques or tools. Autonomous and semi-autonomous refer to how much the tools are “learning” without any guidance or pre-disposed ideas about what the answers should be.

Advanced analytics allow users to discover even deeper insights, make predictions, generate recommendations or find associations and patterns that reside within the data. The analyses can sometimes be as simple as a statistical forecasting method but are more often algorithms designed to solve a particular problem.

Tool choices for performing advanced analytics in Tableau

Tableau built-in functions. Tableau includes some built-in functions for performing advanced analytics. Found on the Analytics tab, these are simple, light-weight techniques that allow users to run trend lines, create forecasts and use a clustering algorithm to identify disparate groups within the data.

Tableau integration. For more robust advanced analytics, Tableau provides the ability to integrate with R and Python, popular open-source environments. Using this integration, users can create a calculated field that invokes the R or Python engine to pass values out and return the output from that analysis.

To receive and process these calls from Tableau, R and Python both need a go-between server. R uses the Rserve application and Python uses TabPy. These can run on your local machine or on an actual server for multiple users and on various operating system platforms. See our blog Installing & Configuring R for Use with Tableau if you’d like to try it yourself.

It should be noted that Rserve running on Windows can only handle one user connection at a time, and Tableau can be set to use only one external service connection at a time. So, while it’s not difficult to change the setting, it’s easiest to focus on one or the other platform.

Stand-alone programming platforms. Both Python and R can be used as stand-alone platforms independent of Tableau. When used alone, data analysis is done directly in the tools themselves. The results are output to data files or to a database, which can then be easily used within Tableau just like any other data source.

For both tools, users need to know how to code in those programming languages. For users with no programming background, this can present a steep learning curve. Because both tools are open source, a wide variety of advanced analytic techniques and methods are developed in the form of packages, or modules, that can be added to the tools for use.

Enterprise data science platforms. Enterprise software platforms such as Alteryx Designer and IBM SPSS Modeler also function independently without full integration to Tableau. They are designed to provide advanced analytics to the enterprise and bring with them all the things you’d expect an enterprise to be concerned with like ease of use, scalability, security and automation. These also are stand-alone in their functionality: users access the data sources directly, perform any necessary data manipulation, use the advanced analytics functions and then output the results either as a new data set for Tableau or a table of data that can be joined with existing information. Although not a full integration, Alteryx has has made significant effort by adding the ability to output data as a native Tableau Data Extract (.tde) file, publishing work to Tableau Server or Tableau Online, and can even serve as a web data source to Tableau.

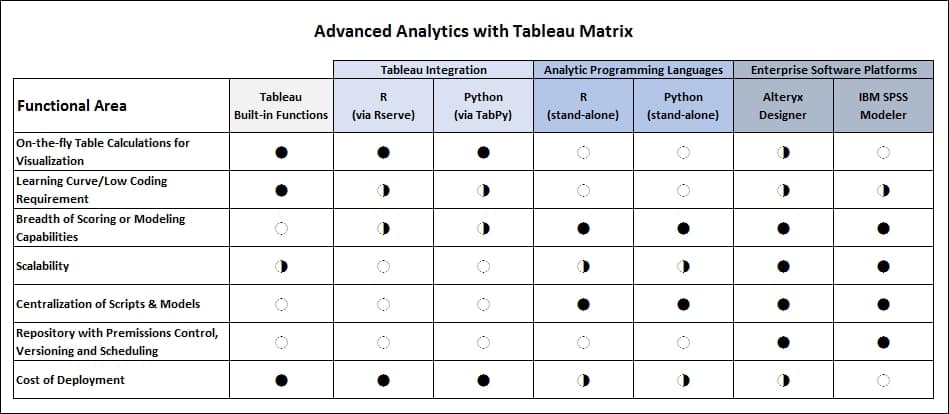

Welcome to the (functionality) matrix

The following matrix outlines some of the major types of functionality that an enterprise would be concerned about in the deployment of advanced analytics along with the common tools that enable those needs. This tool list is by no means exhaustive. For example, the enterprise data science software class is quite large, so I’ve given just a couple of good examples that cover the broader functionality points. And, while I have done my best to evaluate the ratings within each function in general, I know there are many exceptions that can be found. With the caveats out of the way, let’s dive in.

On-the-fly table calculations and visualization

Tableau users love the ability to drag and drop elements into the workspace and immediately see the calculation results or changes to the visualization. The matrix ratings for these functionalities reflect how much advance preparation is required to add them and whether the work can be done solely from Tableau. Naturally, the built-in functions are there when you need them, and with a little more work on setup and some extra knowledge, you can create a calculated field to use the integration to R or Python and get the job done. Rather than write a full-fledged program or develop a formal process, the R and Python integrations grant the ability to quickly use advanced analytic concepts and even quick and easy data manipulation or conversions that may be missing in Tableau. This is all without having to do development elsewhere.

Stand-alone methods and enterprise software can actually create data formats that Tableau will adeptly take in as dimensions or measures just like any other data sets and they are even faster than using the integrations. But these stand-alone methods are slower on speed-to-utilization on-the-fly. Alteryx Designer receives a good rating on speed-to-utilization because it is able to push work to Tableau Server and appear as a web data source within Tableau.

Coding requirements and learning curve

The built-in functions of Tableau are easy to use, requiring little more than dragging and dropping or menu selections to work. On the other hand, the Tableau integrations to third-party utilities can be a bit more challenging. Search the internet for walk-throughs and examples. Most are relatively easy to either use directly or can be translated for your desired application.

Because the R integration has been around longer, there are more examples of R integrations than of Python, but that may be changing. Since these do require, at a minimum, some amount of coding (and sometimes troubleshooting) to get them working, the integration packages are ranked with a moderate learning curve. It should be noted that some extremely complex actions can be implemented via the integration, but as we’ll see later, this may not be the best way to go.

The steepest learning curve may be in creating R or Python code without any integration. The users of these platforms must learn the proprietary programming language from accessing and cleaning up data to building and evaluating models and handling the output of the data. There is a lot of power and flexibility with these tools, but the actual practice of using them is substantial for the average BI analyst.

The two enterprise data science platform examples, Alteryx Designer and IBM SPSS Modeler, earn a moderate score for learning curve. Both have tremendously powerful capabilities, yet each has been architected to require little or no coding. Each provides visual drag and drop interfaces that allow users to control sophisticated routines and algorithms by selecting the appropriate actions and settings for the process. With this simplicity, it is possible for power users/analysts to pick up and begin creating viable advanced analytics models with a moderate amount of training and experience. What’s nice about these tools is their breadth of service from the needs of a novice analyst to a full-fledged data science practitioner.

Breadth of scoring or modeling capabilities

Under consideration for this functionality is the variety of methods that can be realistically employed as part of the analytic process. The integrations from Tableau to R and Python allow you to get much deeper, but can be unwieldy with larger datasets and complex actions, such as analyzing the sentiments conveyed in survey responses. Using the stand-alone analytic packages or enterprise software unleashes the full power to users.

Scalability

Tableau’s built-in functions scale moderately well because they are limited by their simplicity. Conversely, here is where the third-party integrations start to show a bit of downside.

Keep in mind the nature the integration. Tableau is trying to create a calculated field by sending all the data in the data frame out to either R or Python, where they are processing the information line by line. The resulting data is read back into Tableau and placed in the workspace. Due to the bottlenecks of the process, raw computing horsepower only goes so far and cannot really make it more efficient. This process can be very slow and time consuming if Tableau analysts have to wait for a lengthy table update from external processing every time they make a change to the worksheet. If there’s a lot of data to process and/or complex actions taking place, it makes more sense to move the work externally to Tableau using the stand-alone analytic packages or enterprise software.

The two analytic programming languages earn a moderate ranking in this area. The ratings reflect their native capabilities although these capabilities are improving rapidly. Currently, these tools typically must be combined with other technologies to scale for very large datasets.

Centralization of scripts and models

The ratings for this aspect have to do with managing the advanced analytics processes. Two Tableau analysts working independently may create a process attempting to achieve the same thing, but due to different methodologies they could come up with slightly different results. It becomes difficult to standardize and also manage changes and improvements to the work when a team that must share and implement the underlying scripts with each other. Once an enterprise deems the production of the metrics to be valuable, it makes sense to standardize how statistical analysis and advanced analytics are used to ensure they are uniformly applied. This means moving the work into stand-alone programs controlled in a centralized area or onto the enterprise software platforms.

Repository with permission control, versioning and scheduling

This area is highly related to the reasoning behind centralization of the work. Once advanced analytics work is federated within an enterprise, managing and protecting the assets becomes a priority. Want to make sure someone only has access to certain results based on sensitive data? Need to revert back to a model or analysis method that was used three revisions ago? Want to easily schedule deployment of the processes? The answer to all of these questions is to use the enterprise software platforms.

Cost of deployment

Using built-in functionality or the integrations to open-source R and Python are very low cost. Alternately, building a process around R or Python outside of Tableau requires the skills of an in-house or contract programmer and advanced analyst. Enterprise platforms have a higher cost initially, but can give much quicker return on investment when you consider the value of other features that may be important to your business.

Final thoughts and best practices

- Give the R or Python integrations a try. Find some examples and work through them to get ideas on how they can be applied in your work.

- Work with the scalability issues of integrations

- Use a smaller set of data to validate the effort and results.

- Once you have the worksheet how you want it, allow the process to run on the full data.

- Decide if the length of time versus result is worth having or move upstream with an external process.

- At this time, Tableau Online and Tableau Public do not support R or Python capabilities.

- Visualizations created in R or Python via the integration cannot be imported directly into Tableau; however, the image files of the visualizations or URLs pointing to them can be used in the Tableau dashboards.

- You may be interested in our intensive, half-day instructor-led, online course Using R in Tableau. This expert-level class is not available anywhere else.

- Some handy resources

I would love to hear about your experiences using advanced analytics in Tableau and know your thoughts on my ratings. You can reach me, Arik Killion, at akillion@senturus.com.

Listen to the on-demand webinar I hosted, Advanced Analytics in Tableau: Use the Force!, in which I reviewed the material in this blog, real-world case studies and more.

Learn Tableau. Expert instructors. Advanced topics.

This post was contributed by our own advanced analytics architect Arik Killion. A bit of a pioneer in advanced analytics, Arik has 19 years of experience in the subject and in that time has developed deep experience using a variety of methodologies from simple quantitative statistics to predictive modeling techniques and text/sentiment analysis.