What makes the Snowflake data cloud so special? Purpose-built for reporting as an analytics data cloud rather than a transactional database, Snowflake takes the best of traditional database technology and evolves it with some fresh and unique technology that make it particularly well-suited to meet the needs for agility, sharing and large data volumes demanded by modern BI. This blog reviews 10 advantages Snowflake offers analytics platforms like Power BI and Tableau.

Snowflake overview

The Snowflake data cloud is provided as Software-as-a-Service (SaaS). The service sits on top of one of the three major cloud providers: AWS, Azure and Google.

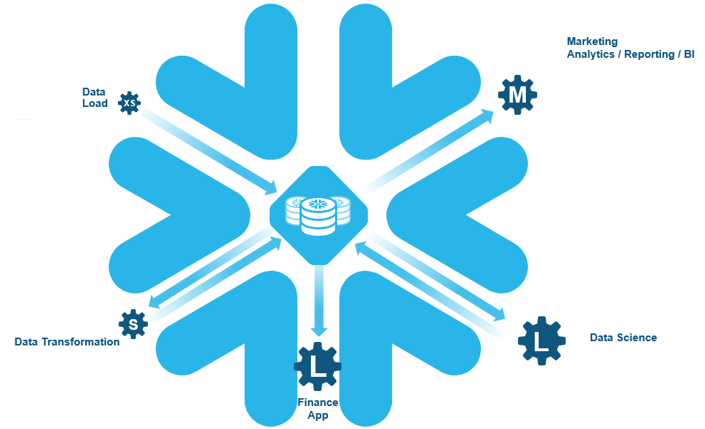

Uniquely, Snowflake has true decoupled storage and compute. Multiple, independent compute resources can access the same database. This means you can have a virtually unlimited number of concurrent workloads against the same single copy of data and not interfere with the performance of other users.

|

The Snowflake pricing model is consumption based, with separate charges for compute and storage. Different departments can run different size computes and easily bill back the appropriate charge to each group.

Watch our on-demand webinar for more details about the 10 reasons.

10 reasons Snowflake is great from analytics

Large data volumes

Because storage is backed by AWS, Azure and Google; Snowflake is highly scalable and reliable. Data that is already stored in Amazon S3, Azure Blobs or Google Cloud Storage can be hooked directly into your Snowflake tenant. What’s more, loading large datasets is really, really fast.

Data loading flexibility

Snowflake provides numerous options for loading data into the system depending on your data source:

- Web applications

- Cloud storage

- On-prem data bases and files

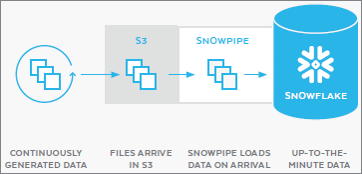

Two particular standouts in data loading flexibility are Snowpipe and third-party tool support.

Snowpipe is Snowflake’s continuous data ingestion service. As new data is generated, Snowpipe is triggered. It pulls the new data into Snowflake, providing up-to-the-minute current data.

Snowflake’s support for many data integration partners enables rapid lift and shift from an on-prem SQL-based enterprise data warehouse. You can easily repoint your current ODBC or JDBC driver tool to a Snowflake database and start loading data into Snowflake.

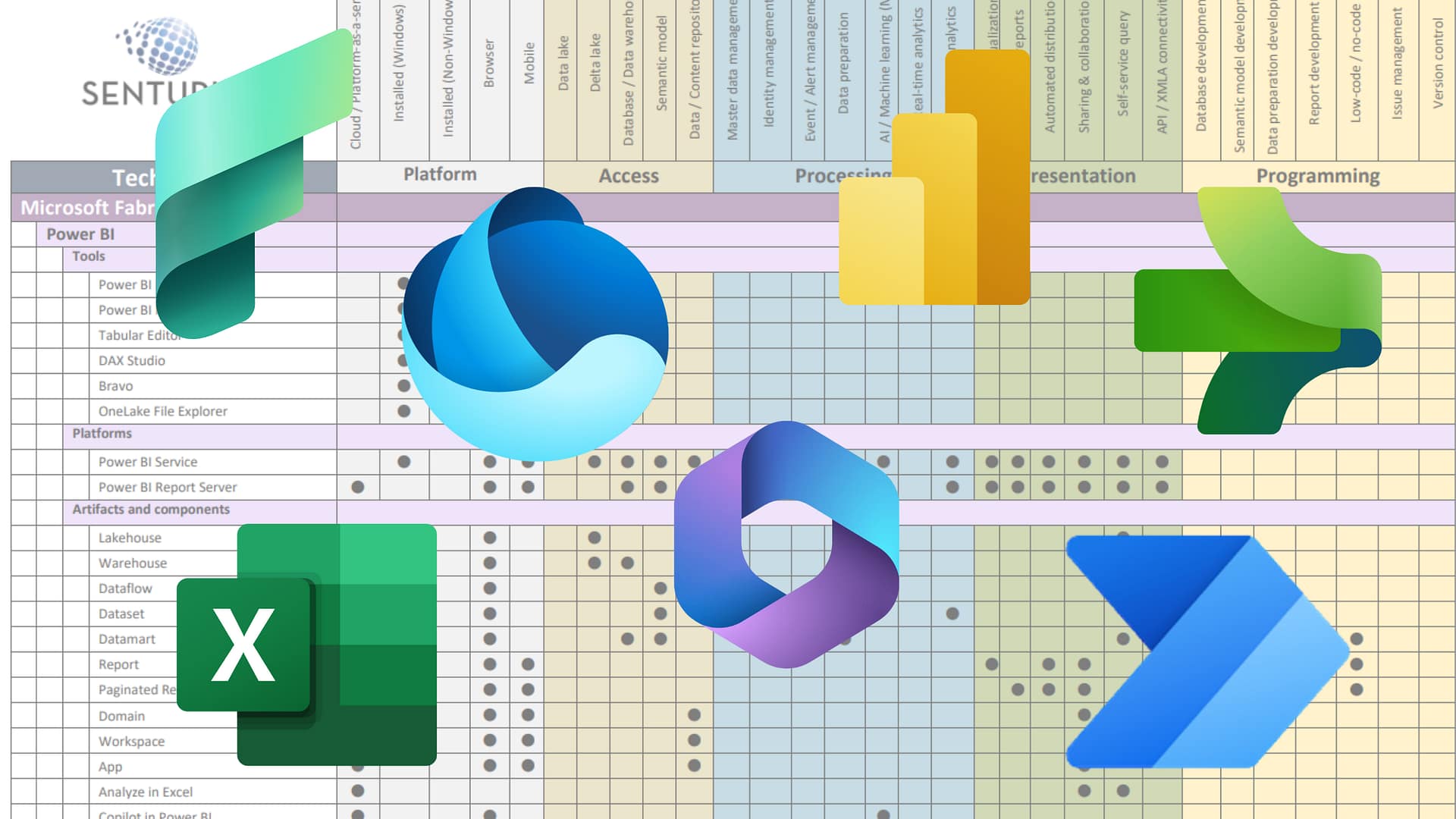

Some tools Snowflake supports:

|

||||

|

Broad support of BI tools

Snowflake connects to many BI tools. It also provides lots of flexibility on how you connect to Snowflake (ODBC, JDBC, command line).

Some of the tools supported.

|

|

|

|

|

|

|

|

|

|

|

|

Supports multiple analysis ready data models

Despite what you might think given the platform’s name, Snowflake supports multiple data modeling approaches. (The company founders like to ski and snowflakes are made in the cloud.) Modern BI tools such as Tableau, Power BI and Cognos work really well with star and snowflake data models. You can create star and snowflake modeled analysis-ready views on top of Snowflake that you can then secure with users and roles.

Minimized administration

Snowflake lets you simply load, share and query data. Unlike traditional databases, with the Snowflake service there is no need to manage upgrades, partitioning, indexes, backups and a myriad of other tasks.

Performance scalability

In Snowflake you can have many different warehouses of many different sizes. Cost correlates to compute performance level and use. To control costs, you can start and stop the warehouses as needed. There’s also a built-in auto-suspend feature that limits cost out of the gate. Here’s an example of why this is so powerful: if your morning data load is taking too long, you can scale up to a larger warehouse. Because the data will load faster, you can stop the warehouse sooner and see improved data load performance for negligible or minimal costs. Improving peak data load performance in a legacy system would be more expensive and likely take much longer.

Additionally, if all your users run their data analytic reports and dashboards on Monday morning for example, the warehouse devoted to that use case can be set to auto-scale. This scales out an additional compute capacity when this peak load occurs, then removes those additional resources as the load subsides.

This idea of dedicated warehouses and scaling up/down and in/out can help your organization optimize its spend while seeing impressive performance across the system.

Semi-structured data

Like many modern data lakes, Snowflake offers the ability to pull in semi-structured data into database tables. Good examples for taking advantage of this capability include data with constantly changing or inconsistent schemas. Snowflake lets you pull this data into a VARIANT column, then provides SQL level extensions to cleanly and efficient query that data into structured views. Unstructured data support is also on the horizon.

Cloning

Snowflake’s “zero-copy” unique data storage technology allows it to copy massive databases quickly. When a copy is made, Snowflake creates a pointer back to the source data, capturing only the changes in that newly copied database and not copying the database in its entirety. This method of cloning is fast, cost effective and offers massive benefits for DevOps scenarios.

For example, problems that are seen in production can quickly be reproduced in a dev or test environment by simply copying the production database into a new database. Because of zero copy, the process is nearly instant and incurs almost no additional cost for the copy of that database, no matter how large. Testing then occurs on the copied database, removing any risk of impacting production systems.

Snowflake’s cloning feature is also useful when promoting changes from dev to test to production.

Time travel

You can query back in time, which is useful for troubleshooting data loading and for recovering accidentally dropped tables or data. It is like having a back button for your database! You can store up to 90 days of historical data changes. The length of time you store impacts your storage costs, so if you have tables that change constantly you may not want to retain data quite so long.

Data sharing and the Snowflake Data Marketplace

Snowflake’s data sharing does not consume significant additional storage space or cost. And it opens up two compelling use cases:

First, is the ability to pull in curated data sets from the Snowflake Data Marketplace. Many organizations, including non-profits and governments, publish rich databases of information to the Data Marketplace that you can link to your Snowflake environment. With a quick search and a few clicks you can quickly combine your data with datasets like weather, census and similar. As those databases of information are updated, you also see those updates replicated in your linked copy of that data.

Second, is the ability to securely share data with your partners. Similar to databases published on the Snowflake Data Marketplace, you can share a database with a partner or pull a partner’s shared database into your environment. As updates to those databases occur, your linked copy of the data stays current. A great application of this capability can be seen in the supply chain realm, where buyers and sellers can understand inventory levels throughout the entire supply chain.

Conclusion

If you are reviewing options for an analytics database, Snowflake is worth a look. It’s pricing structure, scalability and secure data sharing capabilities are among some of the noteworthy features that make it unique in the marketplace. Snowflake could be your answer to the solid data architecture foundation required for successful analytics.

To go deeper on Snowflake, see our on-demand webinar where we go into detail on each of the 10 reasons. Contact Senturus for help with Snowflake.